Natural Language Processing: Definition and Technique Types

Regardless of the different types of definitions present on the world wide web, Artificial Intelligence, in essence, is always about mimicking the abilities of a human mind. Among these abilities, language is a fundamental capability of humans to communicate with each other. It is the same reason that the big corporations are working day and night to advance AI in the language in the form of Natural Language Processing or NLP. This field comes as an intersection of computer science, AI, and linguistic. The ultimate goal here is to make computers understand natural language to perform all our textual digital tasks.

NLP has become a significant part of the information age, which in turn, is a crucial part of AI. It has given and made our life quite simple in the form of digital virtual assistants, voice interfaces, chatbots, and much more. Some other NLP instances include Spell Checking, Keyword Search, Finding Synonyms, Information Extraction, Sentiment Analysis, Machine Translation, dialog systems, and complex question answering. Furthermore, more and more applications are developed every day in the advancement of NLP.

Hence, in this article, we will go through the different types of NLP techniques. And before we do that, let’s quickly go over what NLP actually is.

NLP Definition

A leader in analytics, SAS describes NLP as “Natural language processing (NLP) is a branch of artificial intelligence that helps computers understand, interpret and manipulate human language. NLP draws from many disciplines, including computer science and computational linguistics, in its pursuit to fill the gap between human communication and computer understanding.”

Hence, we can say that NLP is the automated manipulation of natural language such as speech or text by software. The study of natural language processing has been around for more than 50 years and grew out of the field of linguistics with the rise of computers.

In NLP, the structure and meaning of human speech are used to analyze various aspects such as syntax, semantics, pragmatics, and morphology. Computer science then converts this language knowledge, which can solve certain problems and perform desired tasks, into rules-based, machine learning algorithms. This is how Gmail is able to segregate our email tabs, software correct our textual grammar, voice assistants can understand us, and the systems can filter or categorize their content.

Now that we have covered the definition and working methodologies, let’s transition to NLP techniques.

Named Entity Recognition

Named Entity Recognition (NER) is the first step towards information extraction that aims to segregate ‘named entities’ into pre-defined categories. These categories can range from the name of the person to locations, organization, expressions of time, percentages, monetary values, etc. NER in NLP answers the ‘what’ and ‘why’ aspects of the world problems. Instances of such can include what person or business’ names were mentioned in the article, name/information about the product, location of any useful entity.

- Entity Categorization — This algorithm searches all news articles automatically and extracts information from the post, such as persons, businesses, organizations, people, celebrities, locations. This algorithm helps us to sort news content conveniently into various categories.

- Search Engine — All articles, data, news that is to be extracted and stored separately refer to the NER algorithm. This increases the search process and makes up for an efficient search engine.

- Customer Support — Businesses receive massive amounts of feedbacks and reviews on a daily basis forming a new set of colossal data. Herein, NER API can easily pick out all the relevant tags to help businesses make sense of this data.

Tokenization

Tokenization is one of the most common activities in dealing with text information. Tokenization is the division of a given text into a list of tokens. These lists contain anything like sentences, phrases, characters, numbers, punctuation, and more. There are two significant advantages to the process. One is to reduce discovery time to a large degree, and the latter is to be successful in the usage of storage space. The method of mapping sentences from characters to strings and strings to words is initially the basic stage of any NLP problem since, in order to comprehend any text or document, we need to understand the context of the text by reading the words/sentences present in the text.

Tokenization is an essential part of every Information Retrieval (IR) framework, not only includes the pre-processing of text but also creates tokens that are used in the indexing/ranking process. Various techniques of tokenization are available, among which the Porter Algorithm is one of the most popular techniques.

Sentiment Analysis

Sentiment Analysis informs us whether our data is correlated with an optimistic or pessimistic outlook. Although there are various techniques of producing sentiment analysis, typical use cases include defining the emotion conveyed in a statement or collection of sentences in order to achieve a general interpretation of the customers’ mood. In marketing, this can be helpful in understanding how people respond to various types of communication.

Sentiment analysis can be conducted using both supervised and unsupervised methods. Naive Bayes is the most common supervised model used for sentiment analysis. Besides Naive Bayes, other machine learning methods like the random forest or gradient boosting can also be used. Unsupervised approaches, also known as lexicon-based strategies involve a corpus of words with their related feeling and polarity. The sentence’s sentiment score is determined using the phrase’s polarity.

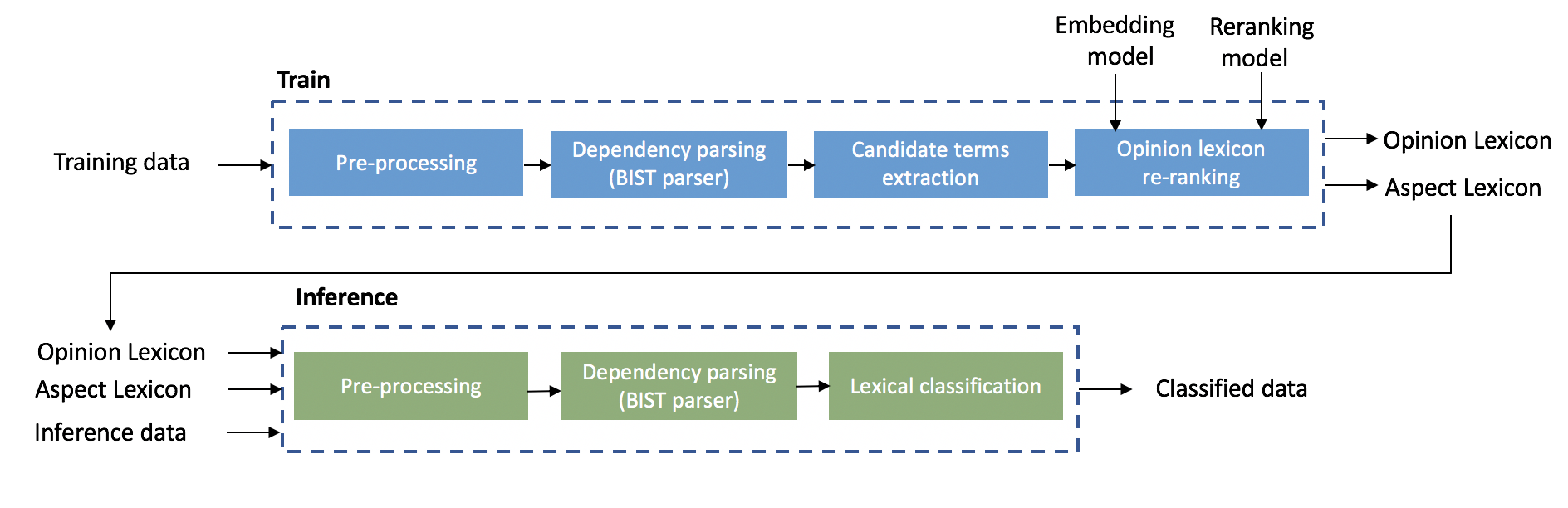

The Intel AI Lab has developed a lightly-supervised ABSA solution that was released as part of the NLP Architect open-source library version 0.4 in April 2019. This solution enables a wide variety of users to generate a detailed sentiment report. The solution flow is divided into two phases, training and inference.

Automatic Text Summarization

Automatic Text Summary is a method of producing a succinct and accurate text summary from various text tools. These tools can vary from books, news stories, blog posts, academic papers, emails, and tweets. The demand for automated text summarization systems is increasing these days due to the availability of vast volumes of textual data.

Text summarization can be narrowly separated into two categories—Extractive Summarization and Abstract Summarization.

- Extractive Summarization — These approaches rely on separating a variety of pieces, such as sentences and phrases, from a piece of text and stacking them together to create a description. The identification of the relevant sentences for summarization is thus of the utmost importance in the extractive system.

- Abstractive Summarization — These approaches use sophisticated NLP techniques to produce a brand new description. Any portions of this summary may not appear in the original text.

Keyword Detection for SEO Techniques

The keyword detection is used by creating a short line list of common words in your text data and comparing it to the current SEO keyword list to build or allow search engine optimization (SEO) techniques. Herein, businesses will scan the data to find the most relevant keywords, also distinctive substantive. From here, they will draw up a list of words, one shortlist based on features and the other on price, which correlates more closely to the questions of each product. They will also create a (new) SEO keyword list to help boost your click rate and eventually gain more traffic.

For example, a business could examine email support from its customers and point out that one of the items has more concerns about features than price.

Topic Modelling

Topic Modelling technique is applied to break down a large amount of text body into relevant keywords and ideas. Afterward, you can extract the main topic of the text body. This advanced technique is built upon unsupervised machine learning that does not depend on data for training. Correlated Topic Model, Latent Dirichlet Allocation, and Latent Sentiment Analysis are some of the algorithms that can be utilized to model a text body topic. Among these, Latent Dirichlet is the most popular which examines the text body, isolates words and phrases, and then extracts different topics. A text body is only required for the algorithm to work.

Indium’s teX.ai, a SaaS-based accelerator is an example that identifies the latent topic inside documents without reading them using Topic Modeling.

Natural language processing is a branch of artificial intelligence and computational linguistics. The main objective of NLP is to take the human spoken or written language, process it, and convert it into a machine-understandable form. In other words, we are trying to extract the meaning from natural language, be it English or any other language.

Businesses can use NLP in numerous areas from medical informatics to marketing and advertising. NLP is definitely applicable for analyzing the content of huge datasets. There are various techniques used in NLP such as Sentiment Analysis, Named Entity Recognition, Text Mining, Information Extraction, and so on. Once these techniques are applied, information can be collected and fed into machine learning algorithms to produce accurate and relevant use.

Templates let you quickly answer FAQs or store snippets for re-use. “Dino Swords is our take on the classic Chrome dinosaur runner game, made extravagant with 26 weapons to help your runs…Many of them pay homage to classic video game weapons, some of them are totally OP, and some are completely useless. There’s even a few easter eggs that are pretty hard to unlock.” http://judahpkbq754219.blogacep.com/8889845/dinosaur-in-mario-games After decades of the series being offline only, even past the point where it would have been technologically feasible, Nintendo has updated Super Mario Party with online play in a rather sudden announcement. Click PLAY GAME to start! Despite selling over 13 million copies since its release in 2018, Nintendo has never really given Super Mario Party the post-launch support those sales numbers probably deserve. It seems especially criminal considering the game, despite being a well-lauded entry in the series, also suffered somewhat of a dearth of available boards for players to engage with. DLC seemed inevitable, especially as the company stated a desire for DLC for their big titles earlier that same year.

Howdy! I understand this is sort of off-topic but I needed to ask.

Does managing a well-established website like yours take

a lot of work? I am brand new to operating a blog however I do

write in my diary everyday. I’d like to start a blog so I can share my own experience and feelings online.

Please let me know if you have any kind of suggestions or tips for new aspiring bloggers.

Thankyou!

Hello aithority.com administrator, Keep up the great work!

Hi aithority.com administrator, Your posts are always informative.

Hello aithority.com administrator, Excellent work!

Dear aithority.com webmaster, Thanks for the well-researched post!

To the aithority.com webmaster, Your posts are always well-referenced and credible.

To the aithority.com administrator, You always provide great examples and real-world applications, thank you for your valuable contributions.

To the aithority.com administrator, Your posts are always well organized and easy to understand.

To the aithority.com admin, Your posts are always on point.

Hi aithority.com administrator, You always provide clear explanations and step-by-step instructions.

To the aithority.com admin, Well done!

Dear aithority.com admin, Your posts are always well-balanced and objective.

Hello aithority.com admin, Your posts are always well written.

Hi aithority.com administrator, Good work!

To the aithority.com admin, Keep up the great work!

Dear aithority.com owner, Thanks for the well-organized post!

Hello aithority.com owner, Your posts are always informative and up-to-date.

Hello aithority.com owner, Your posts are always well-supported by facts and figures.

whoah this weblog is excellent i really like studying your articles. Stay up the good work! You understand, a lot of persons are searching around for this information, you can help them greatly.

hello there and thanks in your information – I’ve definitely picked up anything new from right here. I did alternatively experience a few technical issues the use of this web site, as I experienced to reload the site lots of times previous to I could get it to load properly. I had been thinking about if your hosting is OK? No longer that I’m complaining, however slow loading circumstances instances will very frequently have an effect on your placement in google and could harm your high quality ranking if advertising and ***********|advertising|advertising|advertising and *********** with Adwords. Anyway I am including this RSS to my email and can look out for a lot extra of your respective exciting content. Ensure that you replace this again very soon..

Unquestionably believe that which you said. Your favourite reason appeared to be at the web the simplest thing to be mindful of. I say to you, I definitely get annoyed while other folks think about concerns that they just do not know about. You controlled to hit the nail upon the highest and also defined out the entire thing with no need side effect , people can take a signal. Will probably be again to get more. Thanks

We’re a group of volunteers and opening a new scheme in our community. Your site provided us with valuable info to work on. You have done an impressive job and our entire community will be thankful to you.

Keep this going please, great job!

بالطبع ، تحتاج الصناعة إلى شيء ما لتكون قادرة على اتخاذ هذه الخطوة

الكبيرة.وهذا هو المال – من الواضح.

To ciekawe spostrzeżenie, ale na naszej stronie znajdziesz więcej informacji na ten temat.

I gave https://www.cornbreadhemp.com/products/cbd-sleep-gummies a prove for the treatment of the first time, and I’m amazed! They tasted excessive and provided a be under the impression that of calmness and relaxation. My lay stress melted away, and I slept well-advised too. These gummies are a game-changer on the side of me, and I greatly put forward them to anyone seeking spontaneous stress alleviation and think twice sleep.

I gave [url=https://www.cornbreadhemp.com/products/full-spectrum-cbd-gummies ]cbd with thc gummy[/url] a whack at for the treatment of the primary habits, and I’m amazed! They tasted great and provided a sense of calmness and relaxation. My emphasis melted away, and I slept better too. These gummies are a game-changer on the side of me, and I extremely recommend them to anyone seeking appropriate pain liberation and better sleep.

Scrap Copper recycling benefits Sustainable copper processing Scrap metal repurposing solutions

Copper cable recycling plant setup, Scrap metal salvaging, Copper scrap community engagement

If you find someone special, visit a catalog of real presents to surprise a woman

Depraved french canadian, click for more to connect bombshell, here to please you