Emotional AI: How Cultural Factors Influence Gen Z Attitude Toward Technology

The unregulated nature of this Emotional AI technology has raised many ethical and privacy concerns. Are Gen Z preventing AI's rise if data privacy concerns are not addressed?

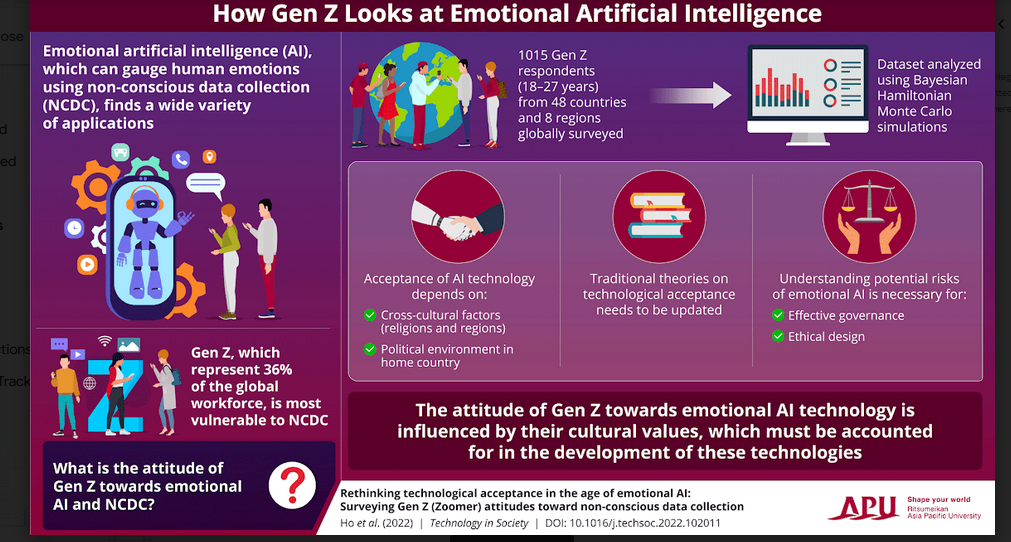

Emotional AI, also marketed as Affective Cognitive Intelligence and Artificial Emotional Intelligence is a fast-growing domain in the data science world. It enables AI users to practically adjudge the emotional state and well-being of an individual or group of individuals using sensor-based emotion detection systems calibrated by computer vision, NLP, facial recognition, and other advanced machine learning algorithms. But, are these group of individuals really happy their emotional state is being measured using AI and not real humans? As part of our latest analysis of top AI ML research projects, we are evaluating “Emotional AI” project teams latest study. The project is led by Professor Peter Mantello who published a new article in “Technology in Society”, a top-ranking international journal in the field of social sciences. The study tried to investigate what people from certain age-group feel about emotional AI and their attitude toward technology acceptance in the AI era.

What is Emotional AI?

Emotional AI is defined as the subset of AI that ” measures, understands, simulates, and reacts to human emotions.” MIT Media lab professor Rosalind Picard first postulated “Affective Computing” and documented the use of emotional AI for research and commercial applications. In the last 25 years, Affective Computing or Emotional AI has emerged as a strong influence on the adoption of technology for digital transformation. New models are developed for machine learning, human-computer interface, creative arts/ web 3.0, human health, mobile gaming, perceptual information retrieval, text analytics, document processing automation, and wearable computers or connected devices/ the Internet of Things (IoT).

For this research, the team used AI models that were fitted with data using the Bayesian Hamiltonian Monte Carlo approach using the R software package, bayesvl.

Data used to build Emotional AI algorithms

There are different techniques used in emotional AI algorithms. The techniques differ from each other based on the source and volume of data that is collected to train and execute ML algorithms for Affective Computing. Some of the popular techniques are:

- Sentiment analysis

- Facial expressions recognition

- Voice analytics

- Eye tracking

- Internal physiology

- AR VR data

- Wearables and connected devices

Today, we have developed AI-driven augmentative machine intelligence tool to understand and analyze human emotions using non-verbal cues in the most effective manner. However, the unregulated nature, increasing risk of biases and a lack of re-calibration for cultural differences makes it important to understand its acceptability among Generation Z, the demographic most vulnerable to it. AI researchers from Japan and Vietnam put forward the finding of their investigation on the role of AI and its the socio-cultural influence on the attitude towards new AI technology, providing guidelines for their future design and regulation.

Who are Gen Z?

The demographic cohort referred to as the “Generation Z” population (colloquially used as Gen Z in media as people born between 1995 and 2010) make up for one of the biggest markets for brands and influencers. Even from a non-commercial angle, the Gen Z population is looked up on as trend-changers in socio-economic and technology fields. For so many reasons, these people are called the iGeneration and Homeland Generation for their role in accepting technology as a necessity to sustain high-quality life. However, there is one concern with the way technology has entered our private lives. And, AI’s role is under scanner for so many reasons that require a granular level study. A new study looks at the socio-cultural factors that influence the acceptance of new AI technology among Generation Z.

Emotional AI and Gen Z: Can Technology be Trusted?

AI is the governing framework and foundation of digital transformation journeys developed as part of the “connected” and “smart technology” economies. AI is ubiquitous and omnipresent, yet they lack the ability to keep human engaged to actions and conversations. Reason: AI doesn’t quite understand emotions at a level that we had hoped would happen given the kind of research and investments put into the field. From self-driving cars to voice assistants on our smartphones, AI is there— but more is required for us to make them more reliable and engaging.

That’s what brings Emotional AI into the picture.

Machine learning (ML) algorithms can now identify, sense and calibrate different types of human emotions. This allows machines to interact with humans and engage them in personalized conversations. The process uses “non-conscious data collection”(NCDC). The ML algorithm is embedded with NCDC platform to extract information related to a person’s heart and respiration rate, iris movement, gestures, voice tones, micro-facial expressions, locomotion, etc. to analyze their moods and personalize its response accordingly.

Now, NCDC is still an non-regulated space. The unregulated nature of this Emotional AI technology has raised many ethical and privacy concerns.

According to the latest online study titled, “Rethinking technological acceptance in the age of emotional AI: Surveying Gen Z (Zoomer) attitudes toward non-conscious data collection,” a regression analysis on a dataset of 1015 Generation Z student respondents revealed the implications of poor data governance used in the contemporary emotional AI systems. The researchers demonstrated the pros and cons of traditional scientific theories such as the “Technological Acceptance Model” by Davis in accounting for cross-cultural factors such as religions and regions, given the transfer of new technologies across borders. Gen Z are clearly impacted by data governance or the lack of it in emotional AI systems. This could hamper technology adoption globally in the coming years.

Let’s understand this using the research on Emotional AI..

Making up 36% of the global workforce, Gen Z is likely to be the most vulnerable to emotional AI. Moreover, AI algorithms are rarely calibrated for socio-cultural differences, making their implementation all the more concerning.

In a new study made available online on 9 June 2022 and published in Volume 70 of Technology in Society on 22 June 2022, a team of researchers, including Prof. Peter Mantello and Prof. Nader Ghotbi of Ritsumeikan Asia Pacific University, Japan, Manh-Tung Ho, Minh-Hoang Nguyen and Hong Kong T. Nguyen, who are Doctoral students of Ritsumeikan Asia Pacific University, and Dr. Quan-Hoang Vuong of Phenikaa University, Vietnam, strove to uncover the factors governing Gen Z’s response towards emotional AI.

“NCDC represents a new development in human-machine relations, and are far more invasive compared to previous AI technologies. In light of this, there is an urgent need to better understand their impact and acceptance among the Gen Z members,” says Prof. Mantello. The study was a part of the project “Emotional AI in Cities: Cross Cultural Lessons from UK and Japan on Designing for an Ethical Life” funded by JST-UKRI Joint Call on Artificial Intelligence and Society (2019), Grant No. JPMJRX19H6.

The team surveyed 1015 Gen Z respondents spanning 48 countries and 8 regions worldwide. The participants were asked about their attitudes towards NCDC, used both by commercial and state actors. They then used a Bayesian multilevel analysis to control for variables and observe the effect of each variable at a time.

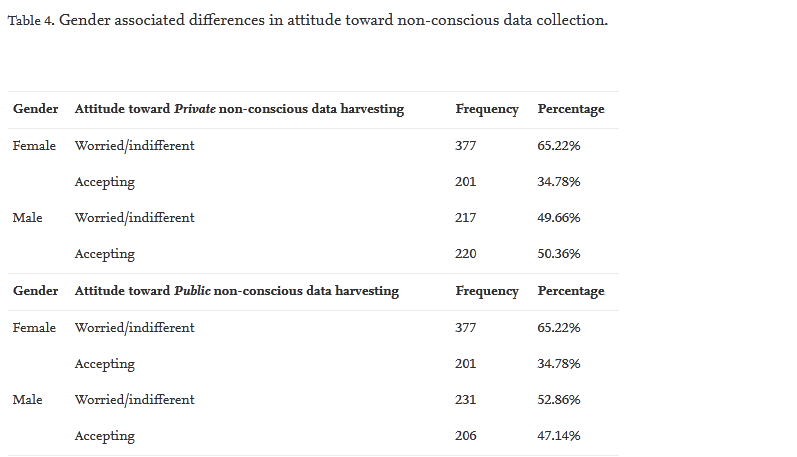

The team found that, overall, more than 50% of the respondents were concerned about the use of NCDC. However, the attitude varied based on gender, income, education level, and religion.

“We found that being male and having high income were both correlated with having positive attitudes towards accepting NCDC. In addition, business majors were more likely to be more tolerant towards NCDC,” highlights Prof. Ghotbi. Cultural factors, such as region and religion, were also found to have an impact, with people from Southeast Asia, Muslims, and Christians reporting concern over NCDC.

“Our study clearly demonstrates that socio-cultural factors deeply impact the acceptance of new technology. This means that theories based on the traditional technology acceptance model by Davis, which does not account for these factors, need to be modified,” explains Prof. Mantello.

Key Highlights: Gen Z’s View on Emotional AI and other Data Science

Technologies

Technology Acceptance based on social theories

AI researchers studied the implication of various traditional models to understand the socio-cultural factors on technology adoption among Gen Z. These models are:

- Theory of Reasoned Action

- Social Cognitive Model

- Technology Acceptance Model

None of these were able to quantify or analyze influence of cross-cultural factors on technology acceptance and rejection.

Cultural perceptions in the modern digital era

Newer approaches are gaining popularity in the digital era. We are witnessing a hype in technology adoption in areas related to Net Zero Carbon, Green washing, etc. Cultural perceptions differ in these areas as far as use of AI are concerned. People with different faiths and religions see the use of technology for social media, carbon capture, connectivity and banking differently.

Workplace use

Geographical differences and cultural ethos also influence also impact the way AI users are likely to perceive emotional AI and other technologies at workplace. With remote working in full force in most parts of the world, the gap in adoption will only widen if these issues are not addressed today.

Gender bias!

AI systems that run on personal data are bound to be biased. Female users are likely to be very restricted in sharing their app or device data with AI training models. This automatically prevents the ML algorithms from delivering their results. Compared to females, male AI users are more tolerant toward this bias and are likely to share personal health and behavioral data with the training models to improve results and experiences.

Biases could also arise out of differences in finance health and income disparities. Gen Z population from affluent families with higher per capita income are likely to bestow more trust on AI systems compared to others belonging to less affluent families.

Data harvesting and data surveillance

More research is required to be done to understand what Gen Z population truly feels about data harvesting and data surveillance techniques. Currently, these pervasive and persuasive AI models certainly affect Gen Z’s mental health who feel that they are being supervised and monitored all the time. Total dependence on technology also has serious impact on health resulting in loneliness, aloofness, emotional and social distancing, anxiety, depression, low self-esteem and rage.

In order to give more legitimacy and bestow more trust and accountability on AI systems, researchers should start working on developing ML models that understand cultural differences and other factors more accurately. Data governance is required, but it needs to be integrated with all the other factors that influence the way Gen Z population use AI for various needs.

This study is part of the project “Emotional AI in Cities: Cross Cultural Lessons from UK and Japan on Designing for an Ethical Life” funded by JST-UKRI Joint Call on Artificial Intelligence and Society (2019), Grant No. JPMJRX19H6. The authors would like to thank all the APU faculty members that helped us distribute the survey. Author Manh-Tung Ho would like to express his sincere gratitude for the support of the SGH Scholarship Foundation.

Reference: Mantello, et al, “Rethinking technological acceptance in the age of emotional AI: Surveying Gen Z (Zoomer) attitudes toward non-conscious data collection, Technology in Society, Volume 70, 2022, 102011, ISSN 0160-791X, https://doi.org/10.1016/j.techsoc.2022.102011.

[To share your insights with us, please write to sghosh@martechseries.com]

Comments are closed.