How to Get Started with Prompt Engineering in Generative AI Projects

If you are familiar with ChatGPT-led conversations, it’s very likely that you have already heard about “prompt engineering.’ For beginners, prompt engineering is changing the way programmers are bringing powerful capabilities to a whole new level in the AI game. According to search results, prompt engineering is one of the most “searched” topics for AI-related jobs and courses. Prompt engineering is a must-have skill for anyone who wants to get started with fantasy tools using AI-generated content. In this article, we will explore the world of prompt engineering in gen AI projects and unravel how to use intelligent prompts for real-world applications.

Is Prompt Engineering a Real Science?

YES. Prompt engineering is a real science, and learning the concepts can actually help you understand the concept of Generative AI easily. You would work closely with the most advanced family members belonging to Large Language Models (LLMs), who have a generic capability of generating new information using AI, and deep learning. With prompt engineering, you can cast new contextual knowledge graphs by integrating relevant inputs and existing data points. In short, you can let machine learning bots do all the talking once you have trained them enough using prompt engineering techniques.

Let’s get started with the idea.

What is Prompt Engineering?

Telling what an LLM should do — that’s prompt engineering in one line. Now, depending on the desired results one seeks from the LLM, a prompt could be based on zero-shot or few-shot prompt techniques.

Anyone can start with prompt engineering. The journey begins with a lot of simple prompts that are tested for efficacy based on the information you already have and the instructions you provide to the query box.

What are the components of a prompt?

The prompt engineering approach requires an engineer to design the most optimal workflow or conversation builder to instruct the model to perform a task. Access to world-class LLMs enables engineers to build out a prompt sequence for performing all types of advanced operations using AI. These include tasks such as conversational chats with virtual assistants, text-to-voice synthesis, mathematical probabilities, logical reasoning, and code generation.

Now, to manage the prompt workflow, you have to understand the components involved in the operation.

There are four components in a prompt workflow:

- Question / Instruction

- External stimuli/ context

- Input data model

- Output format

A standard prompt sequence would include <question> or <instruction> directed at the machine learning model chosen to perform the task. The ‘context’ adds enriching information to the data set to steer an engaging conversation. The input data model is the LLM which is used to find the response to the question or instruction. And finally, the output format is the structure in which the result has to be displayed to the viewer.

Prompt engineering functions on a chain-of-thought (CoT) approach that allows an engineer to feed two types of reasoning to the AI learning models. These reasoning techniques can generate human-like responses based on a unique set of instructions or queries, specifically related to a fine-tuned topic. Based on the level of expertise in prompt engineering techniques, you can quickly scale the NLP conversations with Gen AI-focused zero-shot and few-shot prompting. The ultimate aim of prompt engineering is to enhance the power, reach, and capabilities of generative AI tools with little or no human supervision, providing Gen AI platforms enough room to function across different modalities such as text, voice, images, videos, 3D models, and software codes.

How to Build Prompts: Some Examples

Q&A

Question-answering format is one of the most popular prompt-generation tools for engineers testing with Generative AI. It could consist of:

- Multi-choice questions

- Likert scale

- Open-ended questions

- Yes or No/ True or Fales

- Probing questions, and so on

With practice, the prompt engineering sequence can augment its learning with better context and input instructions that follow a more organized approach to question answering.

Text Summarization using NLP/ Deep Learning

Another popular application of prompt engineering is text summarization using NLP. This is applied to long-form articles, research papers, emails, and news articles for generating a concise and accurate summary of the content. Text summarization using prompts can provide an easy-to-read, accurate gist of any complex topic within seconds. This is very useful in breaking down the meaning of different types of topics and domain subjects, particularly, in healthcare, life sciences, engineering, law, education, and media and entertainment.

Information Extraction

Content analysts use Information Extraction (IE) from diverse sources, including websites and social media. Automated IE and retrieval are done by semantically annotating data from various textual references. In prompt engineering, IE helps in building a structured data pipeline for knowledge graphs. These are used in text analytics, semantic search, and content classification. Typically, IE in prompt engineering applications refers to knowledge discovery capabilities in the areas of medical research, business intelligence, financial data management, media monitoring, and enterprise content search. With AI, it is possible to classify unstructured data points and interlink them with the available known datasets for highly refined and customized prompt processing.

Text Classification

More than 80% of the available text information is labeled as unstructured. Collecting, analyzing, and then re-processing textual data is both time-consuming as well non-profitable. That’s why, machine learning is used to handle large volumes and varieties of texts using a technique called “text classification.” Technically, text classification is an NLP approach that is applied to any number of open-ended text data sets, and categorizes these into determinable categories based on the industry, age, publication, and other labeled groups.

Justin McCarthy, CTO, and co-founder, StrongDM says, “We are entering a magical moment in the world of technology where AI can be used to offload initial tasks that take five hours for humans to slug through, but it might take an AI five minutes. Artificial Intelligence has the potential to ease the burden on IT teams by automating access policy development and accelerating product innovations. It can also improve the effectiveness of Security Operations Centers (SOCs) by filtering out irrelevant activities and focusing on genuine threats thanks to a secondary layer of reasoning helping to filter out the thousands of activity notifications. Security teams may soon begin seeing lightened workloads and more productivity thanks to AI, and that’s something to look forward to as we celebrate World Productivity Day.”

Text classification using a prompt technique enables the big data teams to analyze large volumes of unstructured textual content. These are largely used in the customer service industry where a manager has to analyze millions of data points related to calls, emails, chats, and tickets to understand the customer sentiment on high-priority interactions. Automated text categorization, sentiment analytics, customer feedback management, web content moderation, and ML-based feature extraction are prominent examples of prompt engineering applications in this domain.

Reasoning

“Reasoning” is the toughest application in the AI-powered prompt engineering domain. It requires an exquisite coloration between human agents, software trainers, and machine learning models. Prompt techniques work extremely effectively with deduction and inductive reasoning models. There is still a lot of work to be done in the field of abductive and common-sense reasoning. Prompt-driven reasoning definitely helps machine learning models solve complex problems, communicate better with human agents, and convey intelligent decisions by drawing inferences from metadata and knowledge.

Virtual Conversation

When ChatGPT passed Turing’s Test in 2022, we knew that the sky would be the limit for prompt engineers in the near future. Anything and everything is possible with the generative tools that are trained on massive starry LLMs.

Chatbot conversation-builder is the most fascinating area of innovation in the generative AI era. By tagging along text classification, categorization, reasoning, and prompt mechanics, engineers are now able to build chatbots to stimulate near-human interactions. AI engineers have been able to develop generative chatbot conversation builders for different streams such as Art and Science, Music, Sales and Marketing, Automation, and Immersive Gaming.

Today, prompt-based virtual conversations can take the shape of poems, novels, and highly informative scientific papers.

Some examples:

I have spinach, honey, zucchini, and cucumber. What can I cook using these ingredients for breakfast?

How can I optimize my website landing page using automation tools?

I would like to win a game of chess in less than ten movements. Can you help me achieve this feat?

Which spell does Harry Potter use the most in the novel?

What are the top 10 destination wedding locales in North America?

How can I create an e-commerce website for healthcare products, targeting users above the age group of 50 years?

“Please help me write a romantic 500-word poem for my Valentine.”

“Please create a 500-word introductory speech for my passing out ceremony from the engineering college. It should be very professional, and humorous.”

“Can you create a biodata for me? I want to apply to NASA as a space researcher.”

Copilot/ Code Generation

The new generation of prompts is empowering copilot-led software development projects. A copilot is essentially a virtual co-worker that can harness the power of LLMs to build a series of AI-optimized tasks and workflows. For example, Microsoft Copilot is an embedded Generative AI capability that can be used alongside Word, Excel, PowerPoint, Outlook, Teams, and Business Chats. However, it is not alone. Open-sourced AI copilots have emerged in recent months, which can fundamentally shift the way programmers automate their coding projects. Apart from unlocking creativity and productivity, users can also leverage prompt-driven code generation to uplevel skills required to handle LLMs in a complex, volatile ecosystem– an ecosystem where you are constantly tested and investigated to prove your compliance with data privacy, governance, and security. If you are using copilots, you are likely to emerge as a successful programmer with fewer errors and events reporting.

Getting Started with Prompt Engineering in Easy Steps

Step 1: Deciding if you really need to engineer a prompt sequence

Figuring out the need to prompt-engineer a task could take a lot of time to initiate. Most AI developers and engineers would collaborate for days and weeks before figuring out the best prompt sequences to make AI tick. Because prompt engineering is not restricted to only technical professionals, it could really help a business if a prompt approach is defined before taking up the activities, confining the operations to only business case studies.

Step 2: Juxtapose E-A-T on Prompt Engineering

If you are skilled in EAT concept of SEO and content writing, you are more likely to emerge as a winner with your prompts. EAT stands for Expertise, Authoritativeness, and Trustworthiness. To begin early with Prompt in AI development, your EAT should be precise and simple. There are so many LLMs and computer domains to play with– starting with simple instructions with an interactive approach could help you break down a complex problem into smaller, simpler tasks that are both specific as well as coherent in response. As you get more accustomed to handling the AI part of the prompt writing, your responses will also improve with each iteration.

Step 3: Structure your instructions for simplicity and directness

Experts recommend that prompts should be simple to understand, yet unique in every way so that your model can decipher the true nature of your input signals. For example, instead of using ‘question’ or ‘input’, you can try using phrases such as “Book”, ‘Order”, “Play”, “Write”, “Generate”, “Add/ Deduct/ Multiply,” “Build”, ” Compute’ and strings of other relevant feeders.

The ultimate objective of utilizing this step for prompt engineering is to ensure your model continuously learns and augments its ability to understand and generate responses from a wider group of feeders such as engineers, analysts, content writers, artists, and amateur AI professionals.

Step 4: Apply precise wording and formatting

The anatomy of powerful prompt lies in its domain specificity, clarity of topic, and clear wording. Vague or ambiguous prompts would deliver wayward responses. Similarly, maintaining a clean and well-structured format would help the interpreter read the instructions with better outcomes. Better readability, using bullets and punctuations, and conditional references are key to building an effective prompt sequence for generative AI projects.

Step 5: Always Opt for Customization

Whether you are generating software instruction for IT engineers, a sales email for the SDRs, or a blog for an e-commerce marketing team — prompt specificity should allow for the customization of instructions at all stages. By providing a list of words and phrases based on the audience group you are targeting with customized instructions, your Generative AI tool can deliver much swifter and more coherent responses. You could customize the prompt generators based on age, gender, region, occupation, and many more classifiers that can be 100% covered by the LLM training set.

Step 6: Test, test, and more tests

Thankfully, there are many prompt generators that streamline the entire process of writing code for you. This means your prompt engineering cycle can more or less adopt a sequential set of presets or templatized query builders to get to a flying start. The time saved in generating prompts can be used to test the prompt sequences, and iterations can be further refined for better quality responses based on the industry use cases that you are targeting with your new Generative AI investments. These tests would also allow you to remove the AI biases and improve the overall quality of prompt responses.

Why Promote Jobs Linked to Prompt Engineering?

AI Prompt engineering is the number hack for productivity. Yes, AI is a hot topic today, but can businesses benefit from having skilled prompt engineers?

Raghu Ravinutala CEO and co-founder, Yellow.ai says, “It’s not a surprise that AI is a hot topic for World Productivity Day, and we can only expect to see this continue to rise over the next few years, particularly in the area of customer experience. AI will always serve as a powerful enabler for humans, enhancing productivity and augmenting capabilities. It assists in streamlining processes, improving decision-making, and accelerating innovation across various industries. More tactically, AI can also empower customer support agents with generative AI-powered summarizations where agents can get the entire context and current status of a query without the customer explaining the issue multiple times. This can make human agents 50 percent more productive and reduce operational costs by 60 percent, as we have recorded at Yellow.ai. From an employee experience perspective, incorporating automation helps take the load off of them by eliminating redundant tasks and focusing on strategic and high-value ones. It can help achieve 80% of employee queries being self-served and boost employee productivity by 30 percent. As companies consider employee productivity, they should actively look for ways to leverage AI to support their employees.”

Considered a part of the ever-growing family of natural language programming (NLP) concepts, Prompt Engineering in AI is everything that you should know to accomplish tasks using Gen AI. Today, prompt engineering is changing the way programmers build conversational AI models using large language models (LLMs).

The rise of generative Artificial Intelligence (Gen AI) has completely disrupted the way different AI programming teams use LLMs to write software applications and create new apps. Curious analysts have already begun researching the scope of prompt engineers in the AI programming projects, and have started verifying if showcasing prompt techniques could actually land you a job in the AI market. With everything changing so quickly in the Gen AI world, it is important to grasp what lies ahead in the field of AI-generated content.

So, keeping a tab on prompt engineering is the first thing you should do to get started with AI-based applications for your business.

Benefits of Learning Prompt Engineering in 2023

The biggest advantage of learning prompt engineering is the ability to scale content generation in real time. Teams can easily save up to 90 percent of their content generation time without sacrificing quality and authoritativeness. It means a lot to businesses that are willing to scale their AI game and allow gen AI platforms to take control and ownership of every response that they need to become competitive.

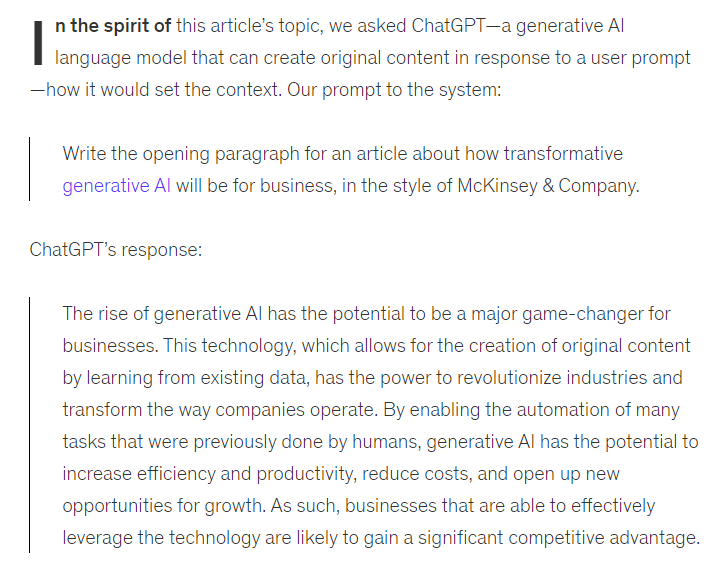

The second advantage is its ability to generate enriching chat conversations with superior control and responsiveness. Engineers can regulate the weights and scales to train LLMs for complex chat conversations, without slipping on the fact-checking and accuracy audits. For instance, here is the response from ChatGPT, when McKinsey’s prompt engineering team asked the generative AI tool to write an article based on its business style guide.

This response was generated in December 2022, almost within weeks of ChatGPT3’s launch. Since then, ChatGPT has received numerous upgrades, including the introduction of GTP4. Now, AI engineers are eyeing the introduction of GPT 5 and 6 already.

Customization of generative AI responses, powered by human supervision, holds the key to succeeding with ChatGPT and other precision-based AI models. Truly, if done right with accurate context setting, and when led by expert promoters, your investments in generative AI could reap huge benefits quickly and for a very long time.

Another advantage is the speed of prompt integrations. Many software companies are testing their co-pilot connections with Gen AI models such as ChatGPT, Dall-E, Midjourney, and GitHub Copilot. With AI-assisted coding modules, prompt engineers can quickly integrate their existing codes with Gen AI tools for faster outcomes. Auto-code generation, bug identification, and removal of redundant lines of code are useful in software and application development projects. Copilot-enabled AI prompt engineering can save programmers time and resources by automating repetitive tasks using accelerators.

So, if you want your business teams to align with the new trends in generative AI modeling, and give yourself a competitive edge in the market, starting with a prompt engineering team is the first thing you could do right now. With faster integrations and training on LLMs, prompt engineering would provide you enough scope to align your AI goals with your current and upcoming business use case scenarios.

Getting Started with Prompt Engineering: Skills to Master in the First 6 Months

Having a sound knowledge of how AI and machine learning techniques work behind the scene is a great skill to have when you start with prompt engineering. However, many non-technical engineers / prompt artists are also becoming quite resourceful with their skills when it comes to generating creative responses from ChatGPT, Dall-E, and others. We have compiled a list of top skills that you would need in your prompt engineering team members.

- Tools and techniques

- AI models

- Communication

- Subject matter expertise

- Logical reasoning

- Critical thinking

- Data-driven approach

- Multi-lingual writing and reading skills

- Creativity

- Editing

Watching Prompt Engineering in Action

AI prompt engineering solutions are flooding the market. Almost every industry now has a fairly clear idea about the role of AI prompts and how these could transform their operations.

We chose these real-world scenarios where the practical use of generative AI using prompts is most widespread in 2023:

Personalized conversations: AI models use customer data from CRMs and CDPs to provide highly contextual personalized recommendations, chats, and messages across the web, social media, email, and the metaverse. In a guided way, prompt solutions can engage audiences from all age groups and locations, fielding a totally new set of interactions based on behavior and preferences.

Content audit: AI content moderation tools can identify spurious information that can harm society. It could label adult content, fake news, and deep fake videos shared online on social media or news channels, providing captions and audited reports on the original status and source of such information.

We will cover more real-world applications of AI prompt engineering solutions in our upcoming articles.

For today, that’s a wrap.

Explore the largest gallery of ChatGPT and Generative AI-related guest posts written by business leaders. To participate, please write to us at news@martechseries.com

Comments are closed.