LLM-powered EUREKA Co-pilots Zero Shot Reward Generation

NVIDIA

AI-assisted robots can now perform complex hand movements, such as the legendary pen-spinning tricks. AI researchers, guided by NVIDIA and UPenn, developed EUREKA, a universal reward design and recognition algorithm built using coding Large Language Models (LLMs).

What is EUREKA?

EUREKA stands for “Evolution-driven Universal REward Kit for Agent.”

EUREKA taps the power of LLMs and in-context evolutionary search functions to achieve high-grade human-level reward design to control a wide range of robotic tasks and simulations. The AI development team behind EUREKA AI created the algorithm for 10 robotic iterations capable of performing 29 different tasks. These tasks span across two specific open-sourced environments. These are:

- NVIDIA Isaac Gym (Isaac)

- Bidexterous Manipulation (Dexterity)

Why is EUREKA AI Coding LLM Important in Modern Robotics?

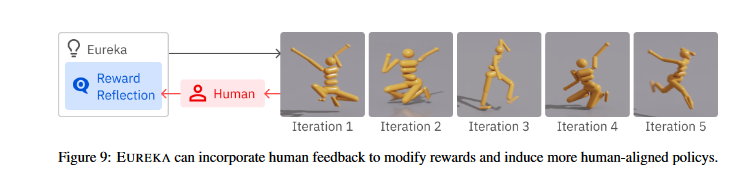

EUREKA is built with minimal intervention from human programming or prompt engineering. In recent months, prompt engineering-based AI simulations have gained massive coverage in generative AI innovations, particularly those involving GPTs. EUREKA acquires unmodified open-source code (unmodified RL without pre-defined reward templates) and language task descriptions to create a zero-shot reward design and recognition model. The coding LLM iterates between the different AI ML techniques such as GPU-accelerated reward evaluation, evolutionary reward search, and reward reflection. All these techniques come together to improve reward outputs from activities that require the dexterity of the robotic arms. For example, the EUREKA AI developers managed to reinforce curriculum learning to solve the dexterous pen-spinning challenge for the first time in the robotic space. Further improvement was possible through human feedback incorporated with human reward initialization and textual feedback approach.

EUREKA AI’s coding LLM powers can be tapped to learn and perform complex low-level manipulation tasks such as spinning a pen using fingers.

The AI model uses zero-shot generation and code writing performance of OpenAI’s GPT-4, to achieve high-grade reward outputs. According to the research paper, EUREKA can outperform human artists in 83% of tasks. This level of precision is possible by leveraging a diverse suite of RL structuring that includes 29 open-source environments and 10 robotic morphologies.

The importance of running EUREKA AI for complex low-level manipulation tasks can be understood from the fact that fewer RL-based reward designs deliver sub-optimal results with unintended behaviors. In contrast, EUREKA’s universal reward programming algorithm can quickly scale to perform a wide spectrum of robotic tasks without human intervention and prompt engineering templates.

Despite minimal human supervision, the reward model can accomplish tasks with complete adherence to safety and alignment policies.

Benefits of using EUREKA AI LLM

- EUREKA outperforms human rewards; and, either matches or exceeds human-led results on most Isaac tasks.

- EUREKA learns consistently and eventually surpasses human performance with novel evolutionary optimization.

- EUREKA can discover novel reward design principles that may run counter to human intuition.

- EUREKA utilizes the reward reflection to perform targeted reward editing.

- EUREKA demonstrates high levels of AI applicability to advanced policy learning approaches.

Top AI ML News: Advancing AI Security Together The Mission of OWASP AI Exchange

[To share your insights with us, please write to sghosh@martechseries.com]

Eureka: Human-Level Reward Design via Coding Large Language Models

Authors: Yecheng Jason Ma, William Liang, Guanzhi Wang, De-An Huang, Osbert Bastani, Dinesh Jayaraman, Yuke Zhu, Linxi Fan, Anima Anandkumar

Comments are closed.