Policymakers Showing Deep Interest in Artificial Intelligence; AI Mentions in Legislative Proceedings Jumped by 6.5% since 2016

AI policies are finally taking shape in legislative proceedings of many countries to restrict AI’s malfunctioning and its militarization against the nation states. According to a report, the mention of the phrase “Artificial Intelligence’ or AI jumped by 6.5% in the last seven years since 2016. It was reported that European Union tech regulation chief Margrethe Vestager has agreed to a political agreement on AI legislation — speculated to be the world’s first major artificial intelligence (AI) legislation. This legislation may closely follow the passing of EU’s Artificial Intelligence Act. Even OpenAI’s CEO has raised concerns about AI taking a Terminator-styled posture where AI systems override human supervision.

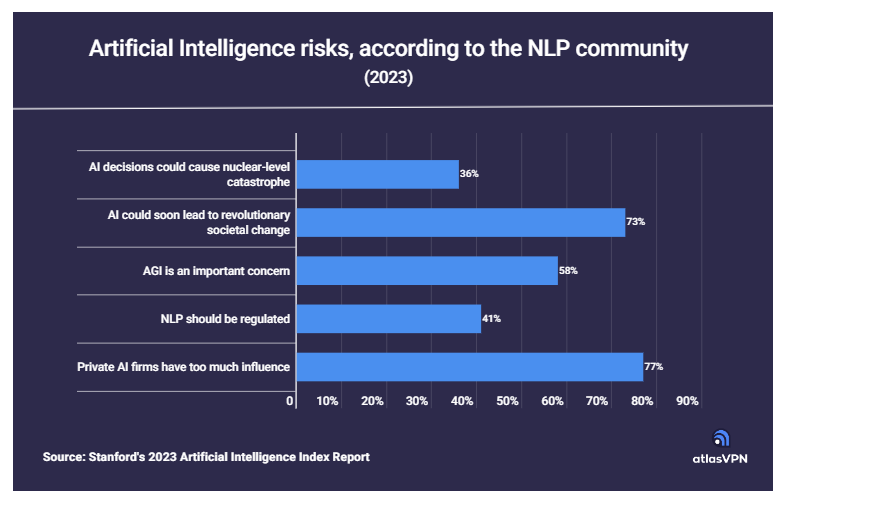

What could trigger the rise of AI policies across the world is the fear of AI taking over the nukes carried by the rogue states!

As the latest AI investments continue to surge past old records, the number of organizations that have actually benefitted from deploying AI for cost-cutting and workforce optimization are also growing in numbers. However, it’s the way leading countries are taking up AI bills and charters to the parliament is what makes the industry so much more dynamic than ever before. The US, by far, has passed or proposed the most number of AI-related federal bills in the parliament. The country managed to pass 10% of all federal AI bills in 2022, compared to a meagre 2% in 2021. It is clear that the US wants to have a closer view and tighter grip on what’s happening with the AI related policies and this has prompted regulators to craft consistent AI policies for all players.

Stanford’s report on AI policy highlights the steps taken by Australia, Brazil, Japan, the UK and Zambia. Even for a nation such as Australia that is lagging in its pace of adoption of AI and related technologies, emerged as a top lawmaker in this domain. The Human Technology Institute (HTI) at the University of Technology Sydney (UTS) would work collaboratively with civil society, industry and government to address AI Regulation in Australia.

In another independent analysis of the top AI technologies, OECD found how they use Google Trends to aggregate AI-related developments across nations, identifying the top topics of interest that are related to shaping AI policies. For example, significant AI developments and policy-related activities are happening in the US, Italy, France, the Netherlands and Korea.

As per Google Trends analysis done by OECD, this is what they reported about top AI technologies discussed across nations:

- Quantum machine learning in the United States

- Intelligent transportation systems in Italy, France, and the Netherlands, and

- Natural language processing in Korea

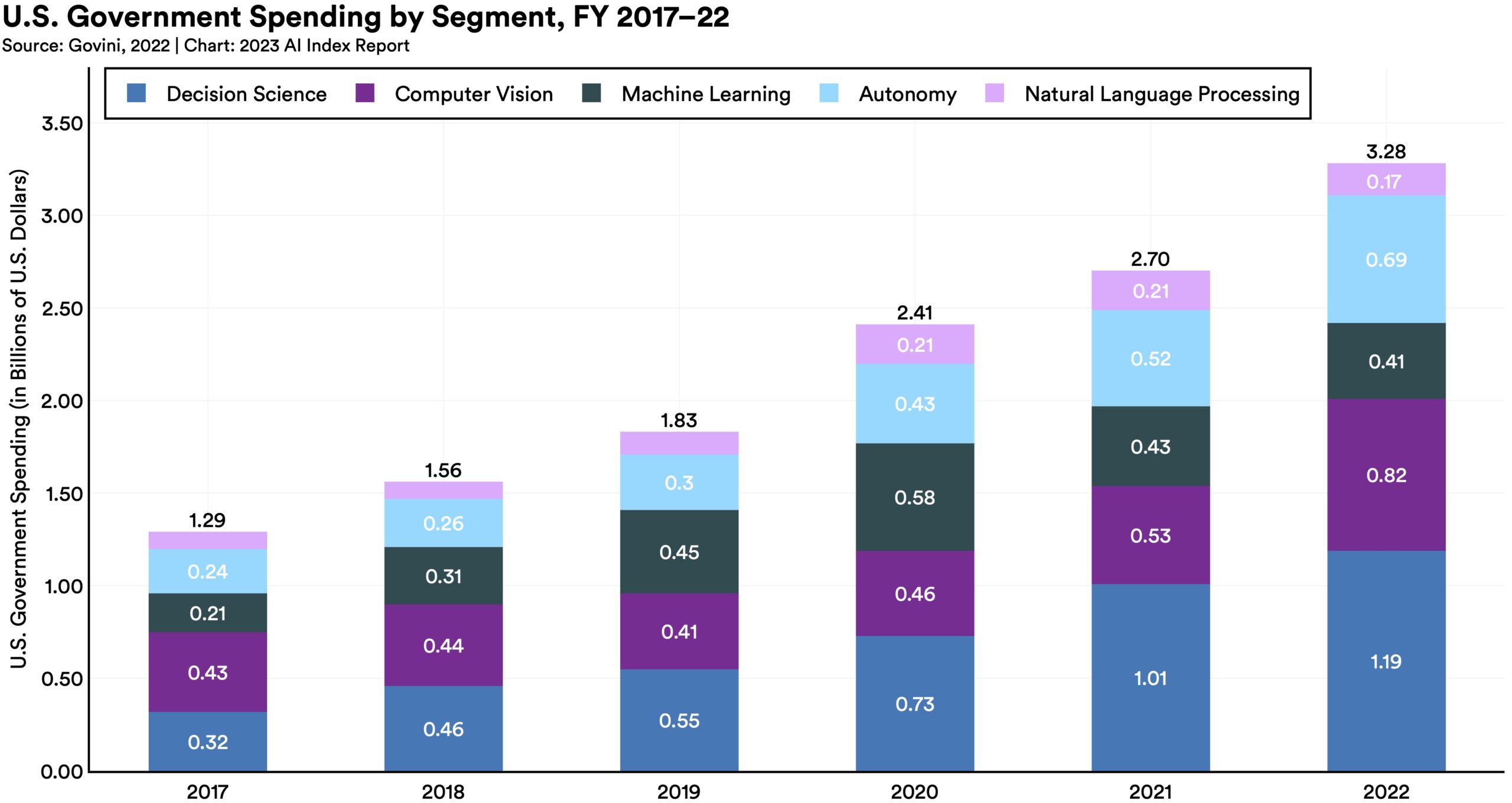

In terms of direct investments into AI and related fields, the US government emerged as the top investor.

In 2023, the US government spent 2.5 times more on AI-related contracts and solutions than in 2017. With AI fast catching up the trend in legal terms, it is clear that the US lawmakers are finally taking an active step in regulating AI ML development and their deployment in various industries. According to Stanford, 110 AI-related legal cases were reported in 2022 across the US, most of them arising from IPs, contractual proceedings and civil rights.

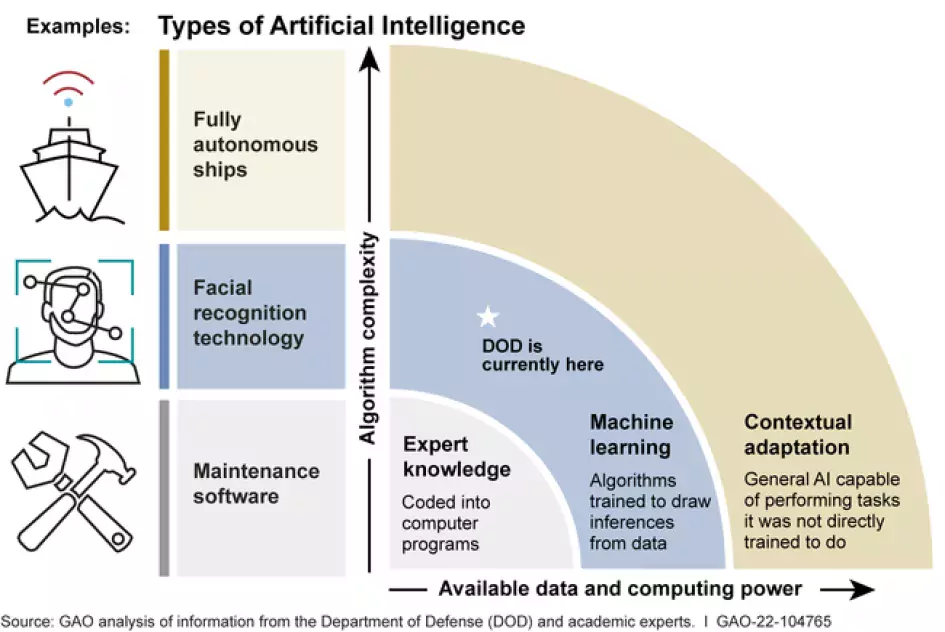

This article was published a few months before ChatGPT3 was released in 2022. It is clearly visible that the defense analysts were able to highlight the role of generative AI tools in transforming US’ national security.

With so much happening every day, it is very hard to predict what’s going to pop up for national security analysts — their understanding of AI and its raw power could be too much to handle in case a full-fledged war breaks out between nations. Both the underuse and overuse of AI is a problem, and fixing liability of AI outcomes could wreck the innovations and incentives associated with developing and working with such a fine technology.

It is worth a mention here that Italy, a key G7 nation, was the first country to block ChatGPT access last month, only to revoke the ban last week. The ban has however inspired other European nations to take a closer look into what ChatGPT really does with IP and personal data before launching independent probes.

Italy, a G7 member, took ChatGPT offline last month to investigate its potential breach of personal data rules. While Italy lifted the ban on Friday, the move has inspired fellow European privacy regulators to launch probes.

World’s largest countries by population, Chain and India are also fast-catching up with the AI developments. While China has shown a keen interest in regulating AI policies with a bit of flexibility, India is still playing a wait and watch game.

It has been reported that Chinese policy makers are enacting a new bill that would require all AI companies to submit a complete security assessment of their products and services before public release. The Cyberspace Administration of China has reportedly mentioned that AI-generated content should “reflect the country’s “core socialist values” and not encourage subversion of state power.”

It is still unclear if the Chinese policy on AI regulation would come this year or the next. However, one thing is clear — Chinese AI companies are at par with the global Machine Learning development forces even as big organizations such as Alibaba, JD.com and Baidu have already released a flurry of new so-called generative AI products that could soon compete with Microsoft’s ChatGPT and Google’s BARD.

Top Insights: ChatGPT’s Rival has Arrived: Hugging Face Introduces Open-Source Version of ChatGPT

By all means, drafting a powerful omnipotent and universally-accepted AI policy would have a far more drastic effect on AI machine learning technology compared to a self-induced AI Winter that 1100+ business leaders had proposed earlier this year! If global leaders can come together on one platform and announce they are going to regulate the AI through national and state-level AI policies, it could save AI users from getting into legal tangles with artists, AI generative tools and third-party promoters.

Comments are closed.