Big Data: Size Matters

In simple words, we tell what Big Data is, where it is used, who and how works with it. The term Big Data is more than ten years old, but there is still a lot of confusion around it.

We are available to talk about what “Big Data” is, where it comes from and where it is used, who the data analysts are and what they do.

Three Signs of Big Data

Traditionally, Big Data is characterized by three attributes (the so-called VVV rule):

Volume – The term Big Data implies a large amount of information (terabytes and petabytes). It is important to understand that to solve a particular business case, the value usually does not have the entire volume, but only a small part. However, in advance, this valuable component cannot be determined without analysis.

Velocity – Data is regularly updated and requires constant processing. Updating most often involves volume growth.

Variety – Data can have various formats and can be structured only partially or be completely raw, heterogeneous. It should be borne in mind that some of the data is almost always unreliable or irrelevant at the time of the study.

As a simple example, you can imagine a table with millions of rows of customers of a large company. Columns are user characteristics (full name, gender, date, address, telephone, etc.), one client – one row. Information is constantly updated: customers come and go, data is adjusted.

But tables are just one of the simplest forms of displaying information. Usually, the presentation of Big Data is much more florid and less structured.

A large volume involves a special storage infrastructure – distributed file systems. Relational database management systems are used to work with them. This requires the analyst to be able to make appropriate database queries.

Read More: Collaboration in AI: How Businesses Improve By Working Together

What Do People Do in Big Data?

Big Data tools are used in many areas of modern human life. We list some of the most popular areas with examples of business tasks:

- Search results (optimization of displayed links, taking into account information about the user, his location, previous search queries).

- Online shopping (conversion increase).

- Recommender systems (genre classification of films and music).

- Voice assistants (voice recognition, response to a request).

- Digital services (spam filtering in email, individual news feed).

- Social networks (personalized advertising).

- Games (in-game purchases, game training).

- Finances (making bank decisions on lending, trading).

- Sales (forecasting stock balances to reduce costs).

- Security systems (recognition of objects from video cameras).

- Autopilot vehicles (machine vision).

- Medicine (diagnosis of diseases in the early stages).

- Urban infrastructure (preventing traffic jams, predicting passenger traffic in public transport).

- Meteorology (weather forecasting).

- Industrial production of goods (conveyor optimization, risk reduction).

- Scientific tasks (decoding of genomes, processing of astronomical data, satellite images).

- Processing information from fiscal drives (forecasting the value of goods).

For each of these tasks, you can find examples of solutions using technologies within the scope of Data Science and Machine Learning. The amount of data used determines the strategy and accuracy of the solution.

What Do People Do in Big Data?

Big Data analysis is at the junction of three areas:

- Computer Science/IT

- Mathematics and Statistics

- Special knowledge of the analyzed area

Therefore, a data analyst is an interdisciplinary specialist with knowledge in Mathematics, Programming, and Databases. The above examples of tasks suggest that a person must quickly understand a new subject area, have communication skills. It is especially important to be able to find analytically sound and useful business results. It is important to correctly visualize and present these findings.

The sequence of actions in the study is approximately reduced to the following:

- Work with databases (structuring, logic).

- Extracting the necessary information (writing SQL queries).

- Data preprocessing.

- Data conversion.

- The use of statistical methods.

- Search for patterns.

- Data visualization (identification of anomalies, visual representation for business).

- Search for an answer, formulation and testing of a hypothesis.

Implementation in the process.

The result of the work is a compressed report with visualization of the result of an interactive panel (dashboard). On such a panel, updated data after processing appears in a convenient form for perception.

Read More: How to Get Started as an AI Developer for Self-Driving Cars

Key Analytics Skills and Tools

The skills and related tools used by analysts are usually as follows:

- Extracting data from data sources (MS SQL, MySQL, NoSQL, Hadoop, Spark).

- Data Processing (Python, R, Scala, Java).

- Visualization (Plotly, Tableau, Qlik).

- Study on the criteria of a business task.

- The formulation of hypotheses.

The choice of a programming language is dictated by existing developments and the necessary speed of the final solution. The language defines the development environment and data analysis tools.

Most analysts use Python as the programming language. In this case, Pandas is usually used to analyze large ones. When working in a team, the standard document standard for storing and sharing hypotheses is ipynb notepads, which are usually processed by Jupyter. This data presentation format allows you to combine cells with program code, text descriptions, formulas, and images.

The choice of tools for solving the problem depends on the case and the customer’s requirements for accuracy, reliability, and speed of execution of the solution algorithm. It is also important to explain the components of the algorithm from the data entry phase to the output of the result.

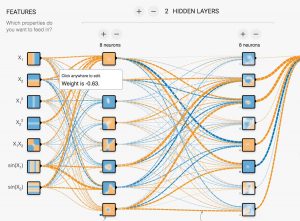

So, for tasks related to image processing, neural network tools, such as TensorFlow or one of a dozen other deep learning frameworks, are more often used. But, for example, when developing financial instruments, neural network solutions may look “dangerous”, because it turns out to be difficult to trace the path to finding the result.

The choice of the analysis model and its architecture is no less trivial than the computational process. Because of this, the direction of automatic Machine Learning has recently been developing. This approach is unlikely to reduce the need for data analytics but will reduce the number of routine operations.

How to Understand Big Data?

As can be understood from the above review, Big Data suggest from the analyst and a large amount of knowledge of their various fields. If you want to go deeper and try to consistently cover all aspects of the issue, study roadmap Data Science:

Where to Start If You Want to Try Right Now, but There Is No Data?

Experienced analysts advise getting to know Kaggle early. This is a popular platform for organizing contests for the analysis of large volumes of data. Here there are not only competitions with cash prizes for first place, but also ipynb-notebooks with ideas and solutions, as well as interesting datasets (data sets) of various sizes.

Read More: The Superpower of MarTech and Innovation Agencies

Comments are closed, but trackbacks and pingbacks are open.