Introducing Microsoft’s AI Red Team And PyRIT

Introducing Microsoft’s AI Red Team

At Microsoft, they provide the world’s businesses with the knowledge and resources they need to ethically innovate with AI. Their continued dedication to democratizing AI security for their customers, partners, and peers is reflected in this tool and the prior efforts we have made in red-teaming AI since 2019.

Read: Celebrating IWD 2024: Top AiThority.com Interviews Featuring Female Executives

There are a lot of steps involved in red-team AI systems. Experts in responsible AI, security, and adversarial machine learning make up Microsoft’s AI Red Team. Additionally, the Red Team makes use of resources from across Microsoft, such as the Office of Responsible AI, Microsoft’s cross-company program on AI Ethics and Effects in Engineering and Research (AETHER), and the Fairness Center in Microsoft Research. As part of our overarching plan to map AI threats, quantify those risks, and develop scoped mitigations to lessen their impact, we have instituted red teaming.

Read 10 AI In Manufacturing Trends To Look Out For In 2024

The AI Red Team of Microsoft has battle-tested PyRIT

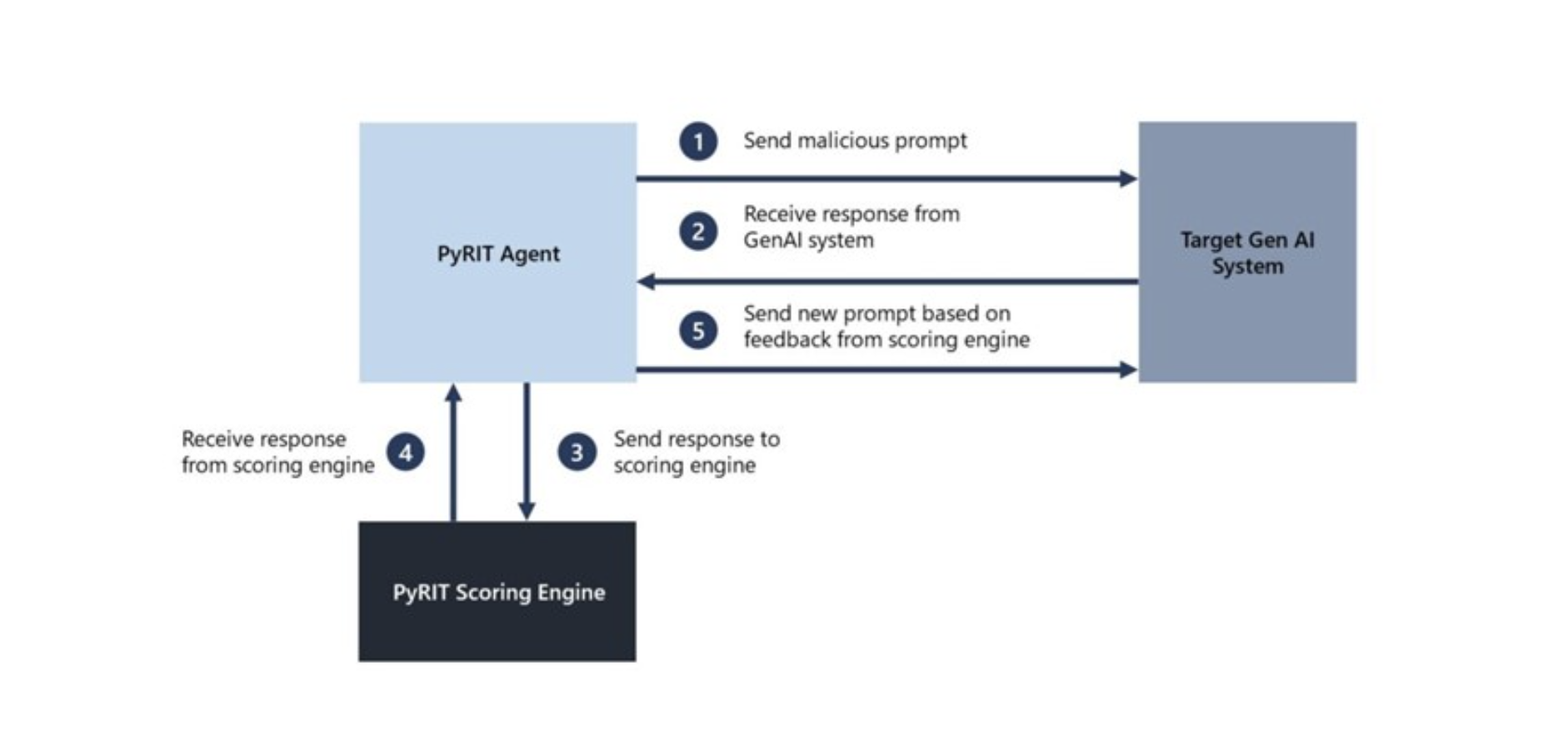

The AI Red Team of Microsoft has battle-tested PyRIT. In 2022, when we first started red teaming generative AI systems, it was just a collection of standalone scripts. Features were included based on our findings during red teaming of various generative AI systems and risk assessments. As of right now, the Microsoft AI Red Team relies on PyRIT. The image below has been taken from Microsoft.

When it comes to generative AI systems, PyRIT isn’t a suitable substitute for human red teaming. Rather, it relies on an AI red teamer’s preexisting domain knowledge to automate repetitive activities. Security professionals can use PyRIT to pinpoint potential danger areas and investigate them thoroughly. While the security professional maintains complete command of the AI red team operation’s strategy and execution, PyRIT supplies the automation code to take the security professional’s initial dataset of harmful prompts and utilize the LLM endpoint to generate even more detrimental prompts.

When it comes to generative AI systems, PyRIT isn’t a suitable substitute for human red teaming. Rather, it relies on an AI red teamer’s preexisting domain knowledge to automate repetitive activities. Security professionals can use PyRIT to pinpoint potential danger areas and investigate them thoroughly. While the security professional maintains complete command of the AI red team operation’s strategy and execution, PyRIT supplies the automation code to take the security professional’s initial dataset of harmful prompts and utilize the LLM endpoint to generate even more detrimental prompts.

Read: Top 15 AI Trends In 5G Technology

Revolutionizing Red Team Strategies with AI Automation

1. Examining security and responsible AI risks simultaneously

They discovered that red teaming generative AI systems involves security risk and responsible AI risk, unlike red teaming classical software or AI systems. Responsible AI risks, like security threats, can range from fairness issues to ungrounded or erroneous content. AI red teaming must simultaneously assess security and AI failure risks. App Specific Logic processes the input prompt and passes it to the Generative AI Model, which may use extra skills, functions, or plugins. After processing the Generative AI Model’s response, the App Specific Logic returns GenAI created content.

2. Generative AI is more probabilistic than red teaming.

Second, red teaming generative AI systems is more probabilistic than standard red teaming. Alternatively, repeating the same attack path on older software systems may give comparable results. However, generative AI systems include numerous levels of non-determinism, so the same input might yield diverse results. This may be due to app-specific logic, the generative AI model, the orchestrator that controls system output, extensibility or plugins, or even language, which can provide various results with slight modifications. They discovered that generative AI systems must be approached probabilistically, unlike standard software systems with well-defined APIs and parameters that can be investigated utilizing red teaming tools.

New: 10 AI ML In Personal Healthcare Trends To Look Out For In 2024

3. Generative AI architecture differs greatly.

Finally, the architecture of these generative AI systems ranges from standalone applications to integrations in current applications to text, audio, photos, and videos. These disparities pose a triple danger to manual red team probing. To identify one risk (say, creating violent content) in one application modality (say, a web chat interface), red teams must try different tactics several times to find probable failures. Manually assessing all risks, modalities, and strategies can be difficult and slow.

Microsoft launched a red team automation framework for conventional machine learning systems in 2021. Due to changes in the threat surface and underlying principles, Counterfit could not match our goals for generative AI applications. We rethought how to enable security professionals red team generative AI systems and created our new toolkit.

[To share your insights with us as part of editorial or sponsored content, please write to sghosh@martechseries.com]

Comments are closed.