Deci Delivers Breakthrough Inference Performance on Intel’s 4th Gen Sapphire Rapids CPU

The Intel-Deci breakthrough enables AI developers to achieve GPU-like AI inference performance on CPUs in production for both computer vision and NLP tasks

Deci, the deep learning company building the next generation of AI, announced a breakthrough performance on Intel’s newly released 4th Gen Intel Xeon Scalable processors, code-named Sapphire Rapids. By optimizing the AI models which run on Intel’s new hardware, Deci enables AI developers to achieve GPU-like inference performance on CPUs in production for both Computer Vision and Natural Language Processing (NLP) tasks.

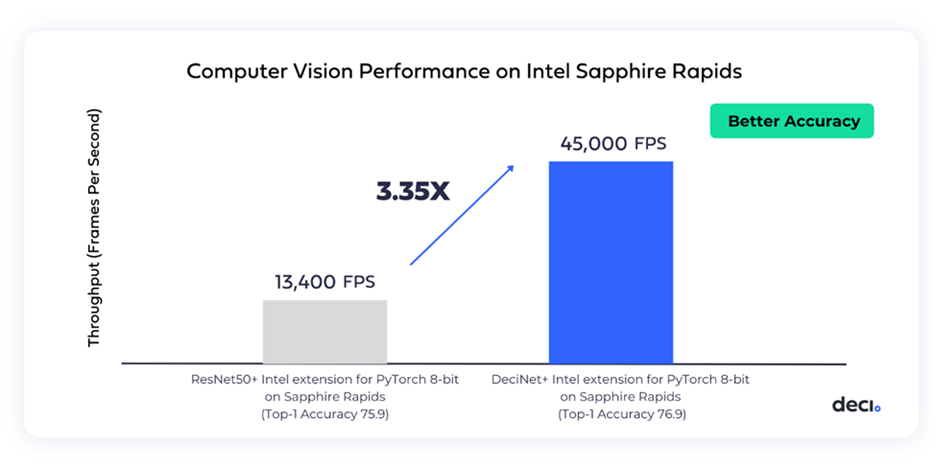

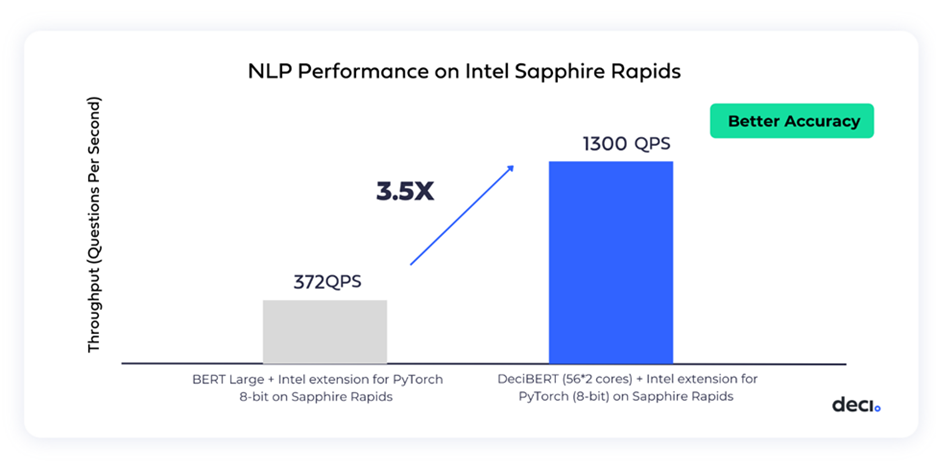

Deci utilized its proprietary AutoNAC (Automated Neural Architecture Construction) technology to generate custom hardware-aware model architectures that deliver unparalleled accuracy and inference speed on the Intel Sapphire Rapids CPU. For computer vision, Deci delivered a 3.35x throughput increase, as well as a 1% accuracy boost, when compared to an INT8 version of a ResNet50 running on Intel Sapphire Rapids. For NLP, Deci delivered a 3.5x acceleration compared to the INT8 version of the BERT model on Intel Sapphire Rapids, as well as a +0.1 increase in accuray. All models were compiled and quantized to INT8 with Intel® Advanced Matrix Extensions (AMX) and Intel extension for PyTorch.

Recommended AI News: Cereverse Has Been Selected for Plug and Play’s Accelerator Program in Malta

“This performance breakthrough marks another chapter in the Deci-Intel partnership which empowers AI developers to achieve unparalleled accuracy and inference performance with hardware-aware model architectures powered by NAS,” said Yonatan Geifman, CEO and Co-Founder of Deci. “We are thrilled to enable our joint customers to achieve scalable, production grade performance, within days”.

Recommended AI News: Lookout Announces the Industry’s Only Endpoint to Cloud Security Platform

Deci and Intel have maintained broad strategic business and technology collaborations since 2019, most recently announcing the acceleration of deep learning models using Intel Chips with Deci’s AutoNAC technology . Deci is a member of the Intel Disruptor program and has collaborated with Intel on multiple MLPerf submissions. Together, the two are enabling new deep learning based applications to run at scale on Intel CPUs, while reducing development costs and time to market.

If you are using CPUs for deep learning inference or planning to do so, talk with Deci’s experts to learn how you can quickly obtain better performance and ensure maximum hardware utilization.

Recommended AI News: LTIMindtree Partners with Duck Creek and Microsoft to Build a Cloud Migration Solution for Insurers

[To share your insights with us, please write to sghosh@martechseries.com]

Comments are closed.