Explainable AI (XAI) in Security Applications

Explainable AI (XAI), is a transformative approach to artificial intelligence, moving away from the idea that sophisticated AI systems must function as “black boxes.” XAI focuses on making AI’s complex decision-making processes transparent and understandable to humans, thereby fostering greater trust and collaboration.

At its core, XAI acts as a “cognitive translator” between machine intelligence and human understanding. Similar to how language translation bridges cultural gaps, XAI translates the intricate workings of AI models into formats that align with human reasoning. This two-way communication not only enables humans to grasp AI’s decisions but also allows AI systems to present explanations in ways that resonate with human logic. This alignment paves the way for enhanced human-AI collaboration, leading to advanced decision-making systems that harness the combined strengths of both human and artificial intelligence.

In essence, XAI builds trust and transparency in AI systems by making their operations more interpretable and accessible to non-experts, offering significant potential for future applications in hybrid decision-making environments.

Also Read: The Growing Importance of Data Monetization in the Age of AI

Why XAI Matters in Security Applications

Explainability in AI-powered cybersecurity systems is essential for ensuring transparency and trust. While AI detects and responds to rapidly evolving threats, XAI enables security professionals to understand how these decisions are made. By revealing the reasoning behind AI predictions, XAI allows analysts to make informed decisions, quickly adapt strategies, and fine-tune systems in response to advanced threats.

XAI enhances collaboration between humans and AI, combining human intuition with AI’s computational power. This transparency leads to improved decision-making, faster threat response, and increased trust in AI-driven security systems.

Key Differences Between AI and XAI

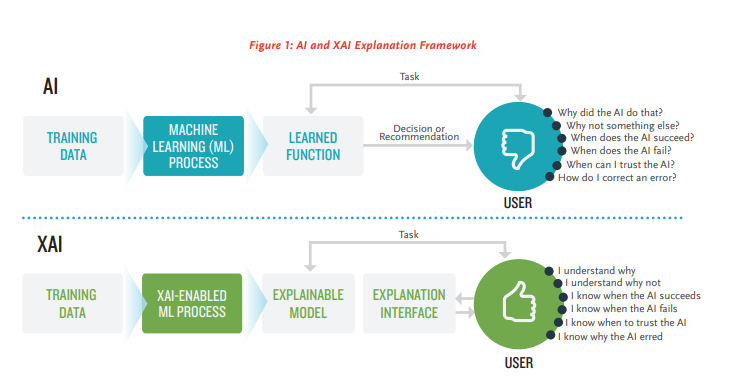

The primary distinction between AI and XAI lies in transparency. XAI employs methods that allow each decision in the machine-learning process to be traced and explained. In contrast, traditional AI systems often produce results without clear insights into how those outcomes were reached. This lack of explainability can compromise accuracy, control, accountability, and auditability in AI systems.

Implementing XAI in Cybersecurity: Key Challenges to Know

Despite the benefits of XAI in optimizing cybersecurity protocols, several challenges persist:

Adversarial Attacks: Threat actors can exploit XAI by manipulating AI models. As XAI adoption grows, this remains a significant concern.

Complex AI Models: Deep learning algorithms are often difficult to explain, even with XAI, making understanding AI decisions more challenging.

Resource Constraints: XAI requires additional processing power to explain decisions, straining organizations with limited computational resources.

Balancing Transparency and Cost: XAI’s transparency must align with budget constraints. Factors like infrastructure scalability, system integration, and model maintenance can increase financial pressure. Decisions regarding cloud vs. on-premise deployment also affect costs and control.

Data Privacy Risks: XAI techniques may inadvertently expose sensitive data used to train AI models, creating a conflict between transparency and privacy.

Understanding XAI Explanations: XAI explanations can be too technical for some security professionals, requiring customization for effective communication.

The Role of XAI in Cybersecurity

Explainable AI (XAI) is becoming increasingly vital in cybersecurity due to its role in enhancing transparency, trust, and accountability. Here’s why XAI is essential:

Transparency and Trust

XAI fosters transparency by helping security professionals understand the rationale behind AI decisions. It clarifies why an AI model flags certain activities as malicious or benign, allowing for continuous improvement of security measures.

Bias Identification

XAI provides insights into AI decision-making processes, enabling the identification and correction of biases. This ensures that cybersecurity measures are fair and upholds the integrity of protocols.

Rising Cyber Threats

The increasing frequency of global attacks, which surged by 28% in the third quarter of 2022 compared to the previous year, highlights the urgent need for XAI. It supports stakeholders—designers, model users, and adversaries—in exploring both traditional and security-specific explanation methods.

Applications Across Industries

Research by Gautam Srivastava emphasizes the application of XAI in various technology sectors, including smart healthcare, smart banking, and Industry 4.0. A survey of XAI tools and libraries facilitates the implementation of explainability in cybersecurity.

Comprehensive Reviews

Literature reviews encompassing 244 references detail the use of deep learning techniques in cybersecurity applications, such as intrusion detection and digital forensics. These reviews underline the need for formal evaluations and human-in-the-loop assessments.

Innovative Approaches

The X_SPAM method integrates Random Forest with LSTM for spam detection while employing LIME to enhance explainability. Surveys categorize XAI applications into defensive measures against cyber-attacks, industry potentials, and adversarial threats, stressing the necessity of standardized evaluation metrics.

Focus on Cyber Threat Intelligence (CTI)

XAI is integral to Cyber Threat Intelligence, addressing phishing analytics, attack vector analysis, and cyber-defense development. It highlights strengths and concerns in existing methods, proposing interpretable and privacy-preserving tools.

Ensuring Accuracy and Performance

Beyond explainability, ensuring accuracy and performance in AI models is crucial. XAI identifies imbalances in training datasets, thus improving system robustness.

Security of XAI Systems

The concept of Explainable Security (XSec) reviews how to secure XAI systems against vulnerabilities. Side Channel Analysis (SCA) employs AI to extract secret information from cryptographic devices by analyzing physical emissions, with XAI aiding in identifying critical features.

Interpretable Neural Networks

Interpretable neural networks, such as the Truth Table Deep Convolutional Neural Network (TT-DCNN), clarify model learning in SCA. Countermeasures like masking face challenges from AI, prompting methodologies like ExDL-SCA to evaluate their effectiveness. XAI also plays a crucial role in detecting hardware trojans.

Also Read: AiThority Interview with Adolfo Hernández, Technology Managing Director for Telefónica at IBM

XAI Use Cases in Cybersecurity

Threat Detection

XAI empowers cybersecurity analysts by providing insights into why specific activities or anomalies are flagged as potential threats, clarifying the decision-making processes of detection systems.

Incident Response

XAI aids cybersecurity investigators in identifying the root causes of security incidents and efficiently recognizing potential indicators of compromise.

Vulnerability and Risk Assessment

XAI techniques enhance transparency in vulnerability and risk assessments, allowing organizations to understand the rationale behind prioritizing certain vulnerabilities. This enables clearer prioritization of security measures and resource allocation.

Compliance and Regulation

XAI helps organizations comply with regulations such as GDPR and HIPAA by offering clear explanations for AI-driven data protection and privacy decisions. Given the need for transparency, black-box AI poses legal risks for regulated entities.

Security Automation

XAI increases the transparency of automated security processes, such as firewall rule generation and access control decisions, by elucidating the actions taken by AI systems.

Model Verification and Validation

XAI supports the verification of the accuracy and fairness of AI models used in cybersecurity, ensuring they function as intended and do not exhibit biases or unintended behaviors.

Adopting XAI with Ethical AI Practices: Key Implementation Considerations

Balancing AI’s Potential for Good and Adversarial Uses

AI has the power to drive positive change, but it also presents adversarial risks. In adopting XAI, organizations must consider its impact on areas like data fusion, theory-guided data science, and adversarial machine learning (ML). Adversarial ML, where small alterations in data lead to incorrect predictions, highlights the need for vigilance in safeguarding AI algorithms and ensuring their confidentiality.

The Growing XAI Market

Although the XAI startup market remains small, with fewer than 50 companies, it is rapidly evolving. Many companies integrate XAI as a feature within existing platforms, rather than offering standalone XAI solutions. As this field grows, leaders should keep a close eye on XAI developments to stay competitive and make informed decisions on its adoption.

Key Recommendations for XAI Implementation

- High-Impact Industries: XAI raises ethical standards in industries where fairness, transparency, and accountability are critical. Organizations should adopt XAI to ensure data is ethically used, especially in sectors where public trust is paramount. As regulations around AI ethics evolve, integrating explainability into AI frameworks is essential.

- Partnership Prioritization: Partnering with AI-driven firms can help organizations lighten their compliance load. XAI provides deeper insights into data and automates workflows, making it a strategic asset in industries reliant on AI-driven investment decisions and regulatory compliance.

- Technical Investments: Investing in XAI can enhance trust between organizations and the public by making AI systems more transparent. By automating compliance outreach and embedding XAI into business processes, organizations can improve efficiency and reduce reliance on opaque AI models.

- Workforce Transformation: XAI will require ongoing human involvement to ensure AI systems perform accurately. Organizations should foster the development of flexible, interpretable AI models that collaborate with human experts. This approach will support the integration of XAI into existing business workflows and enhance the trustworthiness of AI-driven decisions.

[To share your insights with us as part of editorial or sponsored content, please write to psen@itechseries.com]

Comments are closed.