NEC Develops Two High-speed Generative AI LLMs to Improve User Experience

"NEC cotomi Pro" and "NEC cotomi Light" achieve the same quality as global models at more than 10 times the speed

NEC Corporation (NEC; TSE: 6701) has expanded its “NEC cotomi” generative AI services with the development of “NEC cotomi Pro” and “NEC cotomi Light,” two new high-speed generative AI Large Language Models (LLM) featuring updated training data and architectures.

With the rapid development of generative AI in recent years, a wide range of organizations have been considering and verifying business transformation using LLMs. As specific application scenarios emerge, there is a need to provide models and formats that meet customer needs in terms of response time, business data coordination, information protection, and other security aspects during implementation and operation.

NEC’s newly developed NEC cotomi Pro and NEC cotomi Light are high-speed, high-performance models that deliver the same high performance as global LLMs, but at more than ten times the speed.

NEC’s newly developed NEC cotomi Pro and NEC cotomi Light are high-speed, high-performance models that deliver the same high performance as global LLMs, but at more than ten times the speed.

Generally, to improve the performance of an LLM, a model needs to be made larger, but this slows down the operating speed. However, NEC has succeeded in improving both speed and performance with the development of an advanced new training method and architecture.

Top AI ML News: GenAI Enabler Langdock Raises $3 Million to Boost LLM-powered Workplace Productivity

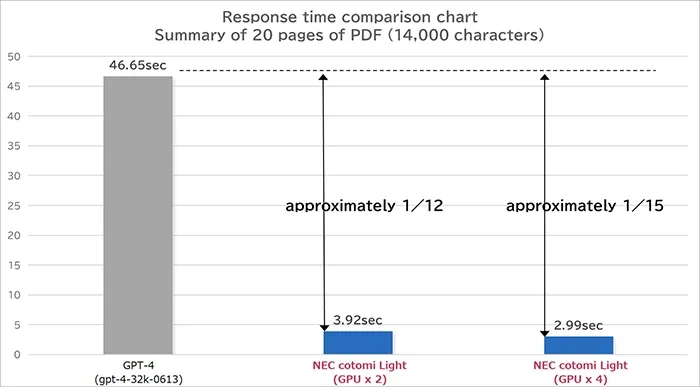

“NEC cotomi Pro” achieves performance comparable to top-level global models such as “GPT-4” and “Claude 2,” with a response time that is approximately 87% faster than GPT-4 using an infrastructure of two graphics processing units (GPU). In addition, the even faster “NEC cotomi Light” has the same level of performance as global models such as “GPT-3.5-Turbo,” but can process a large number of requests at high speed with an infrastructure of about 1 to 2 GPU, providing sufficient performance for many tasks.

Specifically, in an in-house document retrieval system using a technique called RAG, the system achieved a correct response rate higher than GPT-3.5 without fine-tuning and a correct response rate higher than GPT-4 after fine-tuning, with a response time that is approximately 93% faster.

Features of NEC cotomi Pro and NEC cotomi Light

High throughput for various tasks

Both “NEC cotomi Pro” and “NEC cotomi Light” have high levels of processing power that are at the top level globally in terms of knowledge and ability to handle various tasks, such as document summarization, logical reasoning, and question answering.

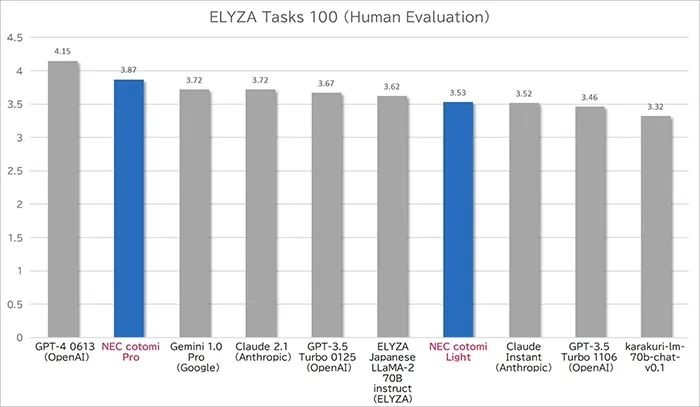

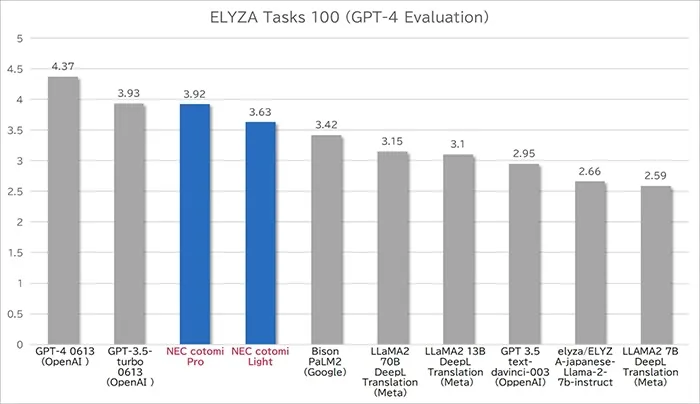

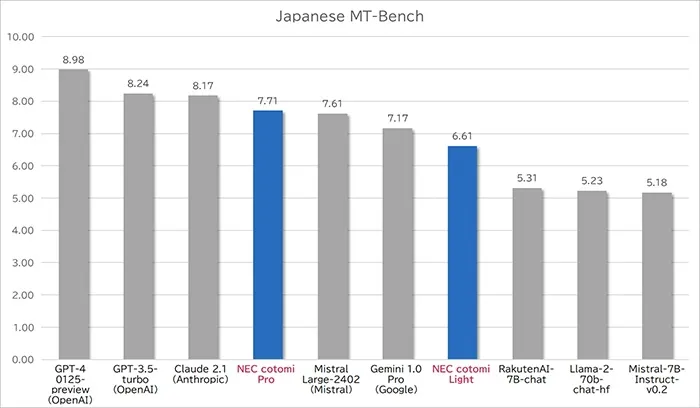

Specifically, NEC confirmed top-level performance, not only in Japan but also globally, in two benchmarks commonly used to measure overall LLM performance: “ELYZA Tasks 100” and “Japanese MT-Bench.” NEC cotomi Pro provided results more than five times faster than GPT-4 (*) on a standard server with two GPUs. Moreover, it outperformed the speed of models such as “Gemini 1.0 Pro” and showed performance that is comparable to Claude 2 and GPT-4. At the same time, “NEC cotomi Light” provided results that are more than 15 times faster than GPT-4 while outperforming large models such as “LLaMA2-70B” and demonstrating performance that is comparable to GPT-3.5-Turbo.

Specifically, NEC confirmed top-level performance, not only in Japan but also globally, in two benchmarks commonly used to measure overall LLM performance: “ELYZA Tasks 100” and “Japanese MT-Bench.” NEC cotomi Pro provided results more than five times faster than GPT-4 (*) on a standard server with two GPUs. Moreover, it outperformed the speed of models such as “Gemini 1.0 Pro” and showed performance that is comparable to Claude 2 and GPT-4. At the same time, “NEC cotomi Light” provided results that are more than 15 times faster than GPT-4 while outperforming large models such as “LLaMA2-70B” and demonstrating performance that is comparable to GPT-3.5-Turbo.

Read More: Here’s How AI Can Help New Businesses Save Up to $5,000!

High-speed Performance LLMs in the Cotomi Lineup

In addition to high performance during inference, the time (speed) between sending a request and receiving a response is also important for the practical application of LLM. NEC cotomi Pro and NEC cotomi Light have achieved high-speed processing that is 87% to 93% faster than GPT-4 with two standard GPUs. This has been accomplished thanks to architectural innovations that have enhanced both performance and speed, as well as a large Japanese dictionary (for tokenization) in the model. These innovations not only increase the speed of inference but also increase the number of simultaneous accesses and reduce the training time required for fine-tuning.

Moreover, additional speed improvements can be made by increasing the number of GPUs to 4 or 8, allowing for flexible construction to meet the needs of each application.

By utilizing a model that achieves high processing power with high speed and mass access, it is possible to significantly shorten the response time of business applications that utilize generative AI and improve user experience. In addition, high processing power can significantly improve performance after fine-tuning of individual data for each company.Going forward, NEC will continue to strengthen cooperation with partners and provide safe, secure, and reliable AI services based on the expanded NEC cotomi lineup, with the goal of helping customers to solve a wide range of complex challenges.

By utilizing a model that achieves high processing power with high speed and mass access, it is possible to significantly shorten the response time of business applications that utilize generative AI and improve user experience. In addition, high processing power can significantly improve performance after fine-tuning of individual data for each company.Going forward, NEC will continue to strengthen cooperation with partners and provide safe, secure, and reliable AI services based on the expanded NEC cotomi lineup, with the goal of helping customers to solve a wide range of complex challenges.

(*)Experiment provided 16-bit calculation precision in a GPU environment equipped with two L40sShare

NEC Corporation has established itself as a leader in the integration of IT and network technologies while promoting the brand statement of “Orchestrating a brighter world.” NEC enables businesses and communities to adapt to rapid changes taking place in both society and the market as it provides for the social values of safety, security, fairness, and efficiency to promote a more sustainable world where everyone has the chance to reach their full potential.

Latest Insights: DataRobot, the Leading AI Platform Supercharged With NVIDIA

Comments are closed.