Scaling Digital Twins Made Possible -Neural Reconstruction for Automotive Simulation Application

By: Szabolcs Janky, SVP of Product at aiMotive

Validating automated driving software requires millions of test kilometers. This not only implies long system development cycles with continuously increasing complexity, but it also reveals the problem that real-world testing is resource intensive, and safety issues might arise as well. Consequently, virtual validation suites are essential to alleviate these burdens of real-world testing.

Since automated driving (AD) and Advanced Driver Assistance Systems (ADAS) rely on closed-loop validation, accurate 3D environments for representing real-world scenarios are essential. Building these 3D environments by 3D artists is a highly manual process, especially if centimeter-level accuracy is targeted. It often requires months to create a few km long section of a map in the simulated world. Thus, virtual validation also has challenges, most importantly in scalability and due to the Sim2Real domain gap.

Also Read: Humanoid Robots And Their Potential Impact On the Future of Work

With continuous development in the field of neural reconstruction (or neural rendering), it has become a promising technique to mitigate the issues of scalability and the domain gap. Neural reconstruction builds on the combination of deep learning and the physical knowledge of computer graphics, enabling the creation of controllable 3D scenes with adjustable lightning and camera parameters – all in a fraction of the time compared to manual recreation.[1] In the following, we focus on introducing neural reconstruction primarily from an automotive perspective, but keep in mind that the solution has promising results in a variety of applications involving computer graphics.

What is neural reconstruction?

As introduced above, neural reconstruction (or neural rendering) is a novel solution to create 3D environments and scenes efficiently, building on deep learning combined with traditional computer graphics. The input data for such reconstructions are usually 2D camera images, and some sort of sensor data, like Lidar point clouds, if depth estimation is needed for the scene. Based on this input data, the neural network can generate 3D environments with accurate representation of the details, such as building decorations or vegetation.

For automotive simulation application, the two key forms of neural rendering are Neural Radiance Fields (NeRF) and 3D Gaussian Splatting, both having their own advantages and disadvantages. NerRF leverages deep learning to generate detailed 3D images from 2D images, while 3D Gaussian Splatting is a rendering technique borrowed from computer graphics, projecting data points onto 2D plane using Gaussian Disribution. While both can generate high-fidelity outputs, 3D Gaussian Splatting falls behind in absolute quality, but has the great advantage that it can achieve real-time rendering. In addition, NeRF has a more user-friendly codebase and is more scalable regarding the areas to be reconstructed. However, none of them are yet optimal for depth-estimation, which is critical for most simulation use-cases.

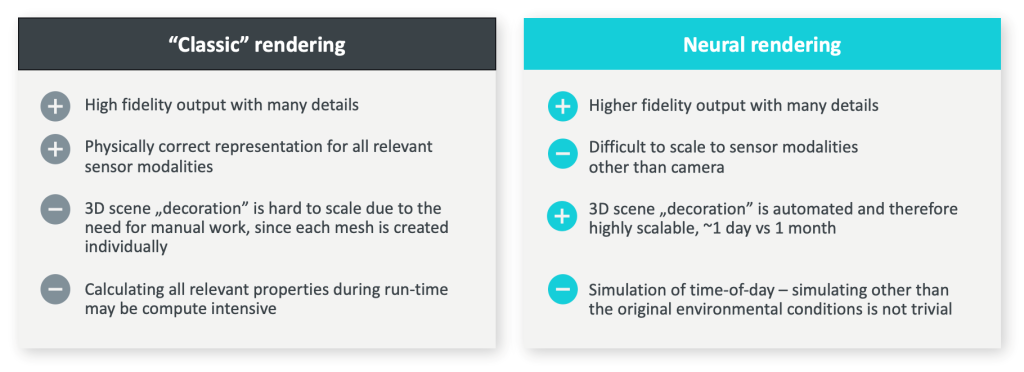

Compared to “classic” rendering, neural reconstruction can achieve high-quality photorealism in a scalable way for real time application, as in “classic” rendering each mesh needs to be created individually and manually – increasing the time and cost of modelling. However, these generative models are yet struggling with simulating time-of-day, generating sensor modalities other than cameras, inserting previously unseen objects into the 3D environment, and representing dynamic objects correctly. Also, geometric inconsistencies may arise, especially in depth-predictions and projective geometry.

Thus, as of now, neural reconstruction is not suitable for automotive-grade validation, if not with a twist.

Hybrid solution: integrating neural reconstruction

To illustrate the current application of neural reconstruction in simulation, here is the method aiMotive applies to overcome the current issues with the technique. aiMotive’s end-to-end simulation tool aiSim is already applying neural rendering for content generation, using a mixed approach. In the simulation with aiSim, the scenes generated using neural reconstruction are augmented with 3D assets created by 3D artists, such as additional vehicles or VRUs (e.g., pedestrians, cyclists), to enable the variation of the scenes. These 3D scenes then can be varied with weather conditions, additional assets, and can be played from a variety of camera angles. All this while maintaining the well-established physics-based rendering of aiSim.

Also Listen: AI Inspired Series by AiThority.com: Featuring Bradley Jenkins, Intel’s EMEA lead for AI PC & ISV strategies

To overcome the depth estimation challenge, in addition to camera images, aiMotive also uses Lidar point cloud for depth regulation. Then, the collected sensor data is annotated by an internal Auto Annotator, which also removes dynamic objects to tackle the problems of their representation. Then, once the neural network is trained, the 3D environments can be loaded into aiSim, where custom scenarios and virtual sensors can be added to the scene to achieve the desired customization and variation.

This way, the following features are already available in aiMotive’s aiSim:

1 Virtual Dynamic Content Insertion is made possible, meaning that dynamic objects with realistic and ambient occlusion can be added, while environmental effects like rain, snow and fog can be simulated for more diverse scenarios.

2 Multi-Modality Rendering can be achieved in aiSim, thus, enabling the generation of accurate RGB images, depth-, and LiDAR intensity maps from arbitrary camera viewpoints (as seen below, with GT in the first row). aiMotive is also supports working on semantic segmentation masks and works on radar simulation.

2 Multi-Modality Rendering can be achieved in aiSim, thus, enabling the generation of accurate RGB images, depth-, and LiDAR intensity maps from arbitrary camera viewpoints (as seen below, with GT in the first row). aiMotive is also supports working on semantic segmentation masks and works on radar simulation.

3 With Camera Virtualization, various virtual camera setups, including various camera alignments and models can be simulated, as shown below.

What holds the future for neural reconstruction?

Overall, neural reconstruction is as essential technique to ramp-up large-scale testing, since manual work can be a hindering factor in scaling the simulation pipeline.

In automotive simulation, there are three important factors to consider: quality, efficiency, and variability. 3D Gausiann Splatting provides adequate answers to the first two much faster than any traditional technique, and improving hybrid rendering could bring endless testing options for automated driving in the virtual world.

For instance, simulating certain weather elements such as falling rain and snow is already possible; we are working on making it visible as precipitation coverage in the reconstructed static environment as well. Similarly, we should be able to set any time of day, and shadow lighting should change accordingly. One of the biggest tasks is to bring high-fidelity non-camera sensor modalities into these automatically created 3D environments. Our development team already developed a solution for Lidars and has an implementation plan for enabling radar simulation.

As the entire neural reconstruction field is evolving daily, we are continuously researching new approaches that could be applicable or adaptable to make autonomous driving testing better, faster, and safer.

If you are interested in further articles on “Automotive Simulation Meets AI”, sign up to aiMotive’s newly launched newsletter on LinkedIn.

[To share your insights with us as part of editorial or sponsored content, please write to psen@itechseries.com]

Comments are closed.