Google Introduces SIMA: A Versatile AI Agent for 3D Environments

What is a Scalable Instructable Multiworld Agent (SIMA)?

When it comes to testing the limits of AI systems, video games are crucial. Games are dynamic learning environments with dynamic objectives and responsive, real-time settings, just like the actual world. Their AlphaStar system plays StarCraft II at a human-grandmaster level, building on our long history of work with Atari games. Google DeepMind has a rich background in artificial intelligence and games. Google is exploring new AI models to bolster AI-powered 3D virtual gaming with a new AI agent.

Read 10 AI In Manufacturing Trends To Look Out For In 2024

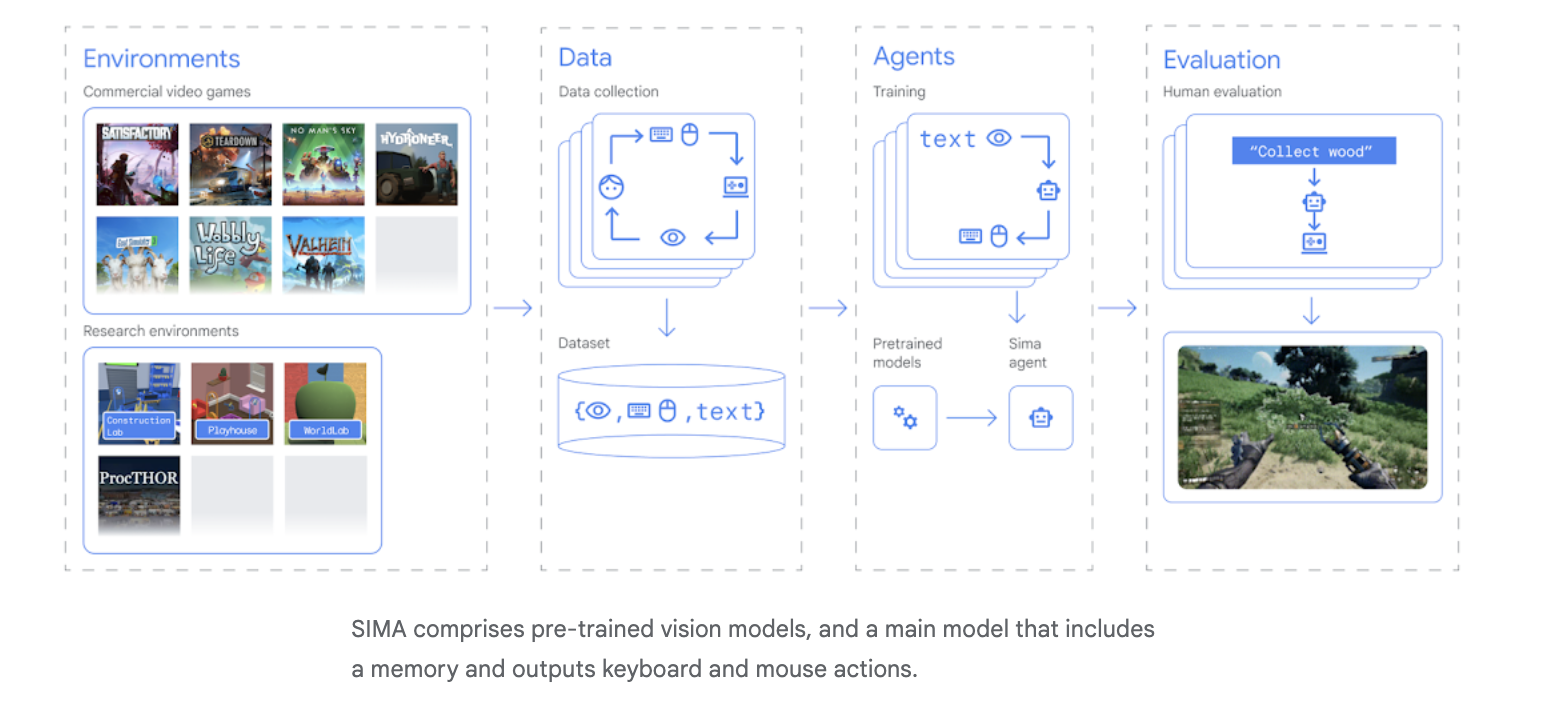

The above image has been taken from the Google website.

Scalable Instructable Multiworld Agent (SIMA), a generalist artificial intelligence agent for 3D virtual environments, is introduced in a new technical report. To teach SIMA how to play different games, the AI engineers collaborated with game developers. For the first time, an agent has proven in this study that it can comprehend a variety of game worlds and, like a person, execute tasks within them by following natural-language instructions.

Benefits

- Future agents can tackle tasks involved in high-level strategic planning and multiple sub-tasks to complete, such as “Find resources and build a camp”.

- SIMA is trained to perform simple tasks that can be completed within about 10 seconds.

- It can perceive and understand a variety of environments, and then take action to achieve an instructed goal.

- SIMA was evaluated across 600 basic skills, spanning navigation, object interaction, and menu use.

- This is an important goal for AI in general because while Large Language Models have given rise to powerful systems that can capture knowledge about the world and generate plans, they cannot currently take action.

- SIMA “understands” your commands as it has been trained to process human language. So when you ask it to build a castle or find the treasure chest, it understands exactly what these commands mean. One distinct feature of this AI Agent is that can self-learn and adapt in a virtual 3D environment.

How Does it Operate?

Scoring well in video games is not the point of this effort. much though it’s impressive that AI can pick up a single game, it may be super-impressive if it could learn to follow instructions in several gaming settings, allowing it to create more versatile AI agents that could be useful in any context. They demonstrate in their research how to leverage a language interface to convert the capabilities of sophisticated AI models into practical, real-world actions.

Read: Celebrating IWD 2024: Top AiThority.com Interviews Featuring Female Executives

They have established several collaborations with game creators to expose SIMA to various environments for our research. To train and test SIMA on nine separate video games, including Hello Games’ No Man’s Sky and Tuxedo Labs’ Teardown, the AI designers worked with eight game developers. Learn everything from basic menu navigation and navigation to mining materials, spaceship piloting, and helmet-making in each of SIMA’s games. Each game opens up a new interactive environment. An artificial intelligence agent called SIMA can sense and comprehend different situations and act accordingly to accomplish a predetermined objective. Part of it is a video model that can anticipate what’s going to happen on screen and another part is a model for accurate image-language mapping. We used training data that was unique to the SIMA portfolio’s 3D settings to fine-tune these models.

Neither the game’s source code nor any custom APIs are required for their AI agent to function. The only inputs needed are the visuals displayed on the screen and the user’s straightforward, natural-language commands. To make the game’s protagonist follow these commands, SIMA reads input from the player’s keyboard and mouse. It may be able to communicate with any virtual world because it uses the same straightforward interface that people use.

Navigation (e.g., “turn left”), object interaction (“climb the ladder”), and menu use (“open the map”). The present SIMA version is tested over 600 fundamental abilities. Using their training data, they have programmed SIMA to do basic tasks in under a minute. A new generation of generalist, language-driven AI bots may be within reach, according to SIMA’s findings. This is still preliminary research, but the SIMA DevOps team is expanding the scope of experimentation with advanced training scenarios and models.

[To share your insights with us as part of editorial or sponsored content, please write to sghosh@martechseries.com]

Comments are closed.