The Future of Manufacturing: Industrial Generative AI and Digital Twins

The recent breakthroughs in the GPT and LLM landscapes have bore impressive results, such as Industrial Generative AI solutions. Industrial generative AI or GenAI capabilities can improve the efficiency of manufacturing processes by fostering advanced digital transformations. According to a recent report1, 73% of US-based manufacturers and industrial leaders accelerated their adoption of new-gen technologies in response to the pandemic. Nearly four out of ten industrial leaders believed the use of Artificial intelligence and machine learning (AI ML) technologies can help their ability to predict and mitigate risks in manufacturing and supply chains. By using GenAI, manufacturers can reduce operational costs, reduce production times, predict bottlenecks, and improve industrial output. Above all, trained Gen AI professionals can work with industrial workers to boost the quality of products and improve user satisfaction. 82% of organizations that are either using or considering the use of Gen AI in their operations expect significant change and transformation in their industry.2

Working with industrial Generative AI platforms could be a tricky situation, especially for first-time users. Data security, privacy, and trustworthiness of LLMs and Gen AI tools can disrupt industrial operations with flawed data management practices. An industrial AI solution provider, Cognite provides a user-friendly, secure, and scalable industrial Gen AI platform to analyze and put in motion complex industrial data and collaborate in real-time. To understand how Cognite works with industrial generative AI models, our journalist, Pooja spoke to the Field CTO of the company, Jason Schern.

Here’s the full interview with Jason.

Hi Jason, welcome to our AiThority Interview Series. Please tell us about your AI journey so far.

In 2023, I joined Cognite as Field CTO within their sales division. Before Cognite, I spent the last 25 years working with some of the world’s largest manufacturing companies to help them dramatically improve their data operations and machine learning analytics capabilities. I was seeing a growing and persistent struggle to scale solutions because of the increasing complexity and diversity of industrial data. I saw in Cognite a team that was focused on solving these challenges, at scale, and wanted to contribute to the impact they were having in the industry.

Recommended: 10 AI In Manufacturing Trends To Look Out For In 2024

What changed in the AI landscape since the arrival of GenAI capabilities?

The technology holds the potential to advance asset-heavy industries by improving operations, creating a safer environment for organizations, and ramping up efficiencies. However, to make Generative AI truly work for industry, data must be liberated from siloed source systems in ways that allow us to leverage the reasoning power of Large Language Models while limiting or eliminating their ability to be creative in the absence of deterministic data (hallucinations). AI disruption ultimately benefits industry by acting as a forcing function for companies to address their foundational data problem.

Data users often spend 40%-70% of their time searching, gathering, and cleaning data, costing businesses millions of dollars in working hours every year. This bottleneck toward productivity will only become worse in legacy architectures as IDC predicts data generation in asset-heavy organizations to increase by 3X in the next two to three years. Industrial organizations must first liberate all data across numerous siloed data sources, then get the right data to the right subject matter experts (SMEs), with the right context, and at the right time. When industrial data has been liberated from traditional silos and made accessible through deterministic frameworks like an industrial knowledge graph, Generative AI becomes incredibly disruptive by enabling insanely simple access to data through natural language interfaces that eliminate the complexity that had previously required advanced coding and data engineering by SMEs.

Please tell us more about Cognite? What are your core offerings?

At Cognite, we create software that turns industrial data into customer value in three main ways:

- Delivering AI-assisted operational improvements with simple access to industrial data for all workers.

- Maximizing production efficiency with AI to detect and deal with production disruptions.

- Enabling safe and sustainable operations with AI and robotics to reduce total energy consumption, remove people from hazardous environments, and meet GHG goals.

Top Data Storage Insights at AiThority.com: Optimizing AI Workloads and Storage: From Data Collection to Deployment

Cognite Data Fusion® delivers revolutionary cross-domain collaboration to drive operational excellence with capabilities that include:

Cognite AI

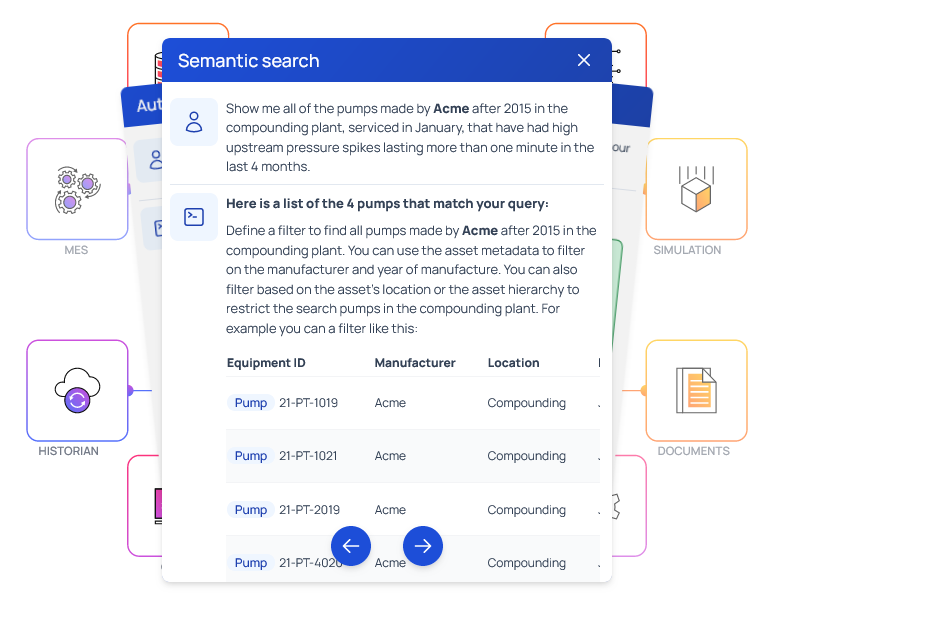

An innovative architecture that unifies Generative AI (LLMs such as GPT 3.5/4) with Cognite’s specific Data Modeling and Retrieval-Augmented Generation (RAG) capabilities. Cognite AI uniquely augments the ability of generic-purpose LLMs to retrieve data from customers’ private sources to generate more purposeful, accurate, and sophisticated outputs based on customers’ individual private industrial data, within the customers’ secure and protected SaaS tenant. Cognite AI provides business end-users with a way to create low-code applications using natural language.

Industrial DataOps

Data onboarding from all OT, IT, engineering, and robotics data sources, complete with lineage, quality assurance, and governance. These market-leading Industrial DataOps capabilities are a requirement for a robust data foundation to scale asset-to-asset and site-to-site in hours and weeks, not months.

Data Modeling

Built-in AI contextualization to automatically map data relationships, create a living industrial knowledge graph, and build the foundation for real-time digital twins that are automatically enriched with live data and event streams. Capture field worker notes and observations all in context, all instantly sharable.

Industrial Canvas

Get all OT, IT, engineering, and robotics data (time series, events, P&IDs, documents, work orders, asset hierarchies, images, simulation, 3D, and more) in a single, collaborative workspace with native AI Copilot functionality to answer operational questions, compile and develop no-code applications, and analyze complex scenarios up to 90% faster than before.

APM Intelligence App Suite

Three ready-to-use cross-data-source applications for digital-first-age business workflows across reliability, operations, and maintenance, and accelerate value realization from existing APM investments without complex and costly rearchitecting and change management programs.

How does Cognite integrate into the contemporary enterprise technology landscapes? What AI capabilities should a company have to benefit from your solutions and services?

Cognite is not working to solve generic data challenges, we are committed to delivering a level of simple access to complex industrial data that is only possible when the technology and people have the domain expertise to understand why that complexity exists and how it can be addressed in a way to deliver trusted, interactive-level performance when accessing that data.

With a convergence of capabilities coming together through robotics, Generative AI, and new ways of accessing diverse data, Cognite is well-equipped to help industrial companies become safer, more efficient, and sustainable at any stage of their digital transformation journey.

What are the different ways to leverage LLMs to get more deterministic answers when working with Industrial Data?

The practical applications of LLMs in business operations are vast. LLMs can assist in automating repetitive tasks, generating personalized content, and analyzing customer feedback. Additionally— and of most interest to the audience of this book—LLMs can process vast amounts of text documents, extract relevant information, and summarize key findings, helping to extract insights from large volumes of unstructured data. This capability can assist in research and development, data analysis, and decision-making processes, enabling businesses to derive insights from diverse sources of information more effectively.

For example, an LLM-based system can analyze maintenance reports, sensor logs, and operator notes to help operators efficiently navigate and discover relevant data, leading to better decision-making and improved operational efficiency.

LLMs are a powerful tool for industry, improving operations in various ways that minimize downtime, reduce costs, and achieve higher overall efficiencies. With their ease of use, adaptability, and practical applications, LLMs offer a user-friendly solution that can streamline operations, automate tasks, gain valuable insights, and drive innovation in their respective industries.

What is the recommended approach for Chief Technology Officers (CTOs) when navigating the transition to Generation AI (Gen AI) platforms?

Effective CTOs and digital transformation leaders embody the characteristics of a digital maverick, who are characterized, most broadly, as leaders who seek to take advantage of converging technologies in a way that drives broad, meaningful transformations to the business.

To successfully navigate the transition to generative AI, CTOs and industrial leaders should prioritize:

Ensuring safety and accuracy in AI implementation:

Creating a reliable digital representation of your industrial operations, like an Industrial Knowledge Graph, ensures Generative AI tools have a deterministic view of the semantic, meaningful relationships across highly divergent data types and provide precise responses. For instance, by mapping every aspect of a manufacturing plant into a comprehensive digital twin, LLMs can interpret a natural language prompt (i.e. “find all work orders for Siemens pumps that exceeded their scheduled completion time”) into code that navigates the knowledge graph and provides a deterministic response.

Partnering with reliable third-party vendors:

Choosing a trustworthy data partner that prioritizes quality ensures accurate and reliable AI outcomes. Without contextualized data, generative AI lacks the required information for deterministic and trustworthy responses. For instance, in the oil and gas industry, having precise data on drilling processes significantly impacts AI-driven predictive maintenance accuracy.

Swiftly scaling high-value initiatives:

Approach digital transformation with a focus on scaling impactful use cases rapidly across the enterprise. Digital mavericks who pursue ‘land and expand’ approaches that integrate new technology in the context of high-value use cases, with clear pathways from initial test concepts into scaled deployments, can achieve results that are 10x more valuable.

Reconsidering traditional thinking around DIY (do-it-yourself) projects and technology:

Challenge traditional beliefs around DIY AI solutions by prioritizing time-to-value over tech stack sophistication. Recognize the drawbacks of solely relying on in-house solutions and consider off-the-shelf components for faster and more cost-effective AI adoption. For example, an AI-driven customer service solution implemented through a SaaS model resulted in quicker deployment and lower long-term costs compared to a customized DIY solution.

As the industrial landscape continues to shift towards automation and data-driven decision-making, what does it truly require to enable conversational operations and software-based Gen AI co-pilots?

As the industrial landscape transforms automation and data-driven decision-making, enabling conversational operations and software-based gen AI co-pilots necessitates the liberation of data from numerous siloed sources. The key lies in providing simple access to complex industrial data, ensuring that the right data reaches the appropriate subject matter experts (SMEs) with contextual relevance and timeliness. This approach facilitates the integration of formerly isolated SMEs, departments, platforms, and data deployed by both Operational Technology (OT) and Information Technology (IT) teams.

Industrial organizations must embrace Industrial DataOps, a strategy centered on breaking down silos and optimizing the broad availability and usability of industrial data. This is particularly crucial in asset-heavy industries such as oil and gas and manufacturing. By unifying goals and Key Performance Indicators (KPIs) across the enterprise, Industrial DataOps contributes to the enhancement of operational performance in the evolving landscape of industrial automation and data-driven decision-making.

What are the true performance benchmarks to measure the effectiveness and quality of AI-assisted business operations?

Digital mavericks know that their organization’s charter and KPIs must be ever more tied to business value and operational gains. Instead of deploying proofs of concepts, their KPIs must reflect business impact, successful scaling, and other product-like metrics:

- How can business value be measured, quantified, and directly attributed to the digital initiative? What ‘portfolio’ or ‘menu’ of value is being developed?

- How is adoption measured and user feedback implemented in the feedback loop?

- How many daily active operations users are actually in the tools delivered? How is the workflow changing as a result?

- How much do these business applications and solutions cost to deploy and maintain?

- What does it cost to scale to the following asset, site, or plant?

- What new business capabilities for multiple stakeholders are gained due to deploying a solution?

- Are these capabilities short-term, or will they persist (and drive value) over the long term?

Could you please highlight AI-powered digital twins that would transform siloed industrial environments?

AI-powered digital twins have the transformative potential to break down siloed industrial environments by fostering relationships across Operational Technology (OT), Information Technology (IT), and engineering data. The key lies in the development of an open industrial digital twin through contextualization pipelines.

A significant advantage of employing data modeling for digital twins is the departure from a singular, monolithic approach. Instead, the focus is on creating smaller, tailored twins that cater to the specific needs of different teams within the industrial ecosystem. The industrial knowledge graph serves as the foundational element for the data model of each twin, offering a point of access for data discovery and application development.

To effectively address diverse operational decision-making processes, companies should adopt multiple digital twins, each tailored to different decision types. These may include digital twins for supply chain management, various operating conditions, maintenance insights, visualization, simulation, and more.

Crucially, the value of data is realized only when businesses trust and actively use it. Industrial enterprises must instill trust in the data they integrate into solutions, spanning from dashboards to digital twins and even extending to generative AI-powered solutions. This trust ensures that AI-powered digital twins become integral tools in enhancing the overall understanding and efficiency of industrial operations.

What AI initiatives are you currently focusing on and why?

We are heavily focused on knowledge graphs constructed by combining data sets from diverse sources, each varying in structure.

Knowledge graphs use machine learning to construct a holistic representation of nodes, edges, and labels through a process known as semantic enrichment. By applying this process during data ingestion, knowledge graphs can discern individual objects and comprehend the relationships between them. This accumulated knowledge is then compared and fused with other data sets that share relevance and similarity.

Where do you see AI/ML heading beyond 2024?

One of the most common misconceptions about AI in data-heavy industries is that the cost will be a dealbreaker. While it’s true that standard generative AI can be a substantial cost (consider that with current API rates, each query to GPT4 would cost tens of dollars), we can use Retrieval Augmented Generation, or RAG, to control the cost. RAG allows us to send only the most relevant data to the LLM for analysis. This not only provides a mechanism to work within the LLM’s finite token limits and significantly increases the trustworthiness of the response, but it also reduces the need to over-engineer the prompts or perform expensive/extensive re-training of the LLMs. One could argue that RAG is to LLMs what data engineering is to analytics: carefully selecting and preparing the correct data before sending it for analysis.

Thank you, Jason! That was fun and we hope to see you back on AiThority.com soon.

1. The Resilience of Manufacturing Report, by The Workforce Institute at UKG

2. Google Cloud Gen AI Benchmarking Study, July 2023

[To share your insights with us as part of the editorial and sponsored content packages, please write to sghosh@martechseries.com]

Jason serves as Cognite’s Field CTO. He has spent the last 25 years working with some of largest discrete manufacturing companies to dramatically improve their Data Operations and Machine Learning Analytics capabilities.

Jason’s experiences led him to Cognite, passionate about the value and impact of trusted, accessible contextualized industrial data at scale.

Cognite makes Generative AI work for industry. Leading energy, manufacturing, and power and renewables enterprises choose Cognite to deliver secure, trustworthy, and real-time data to transform their asset-heavy operations to be safer, more sustainable and more profitable. Cognite provides a user-friendly, secure, and scalable industrial DataOps platform, Cognite Data Fusion®, that makes it easy for all decision-makers, from the field to remote operations centers, to access and understand complex industrial data, collaborate in real-time, and build a better tomorrow.

Comments are closed.