Role of AI in Cybersecurity: Protecting Digital Assets From Cybercrime

Introduction

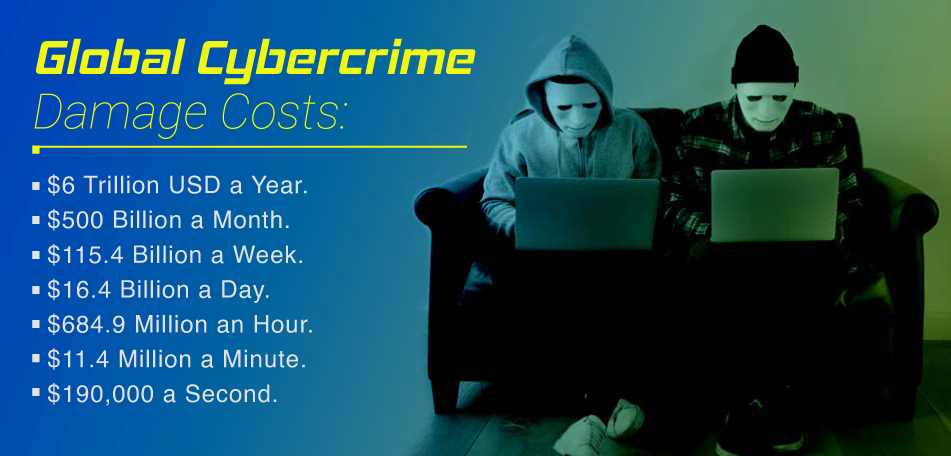

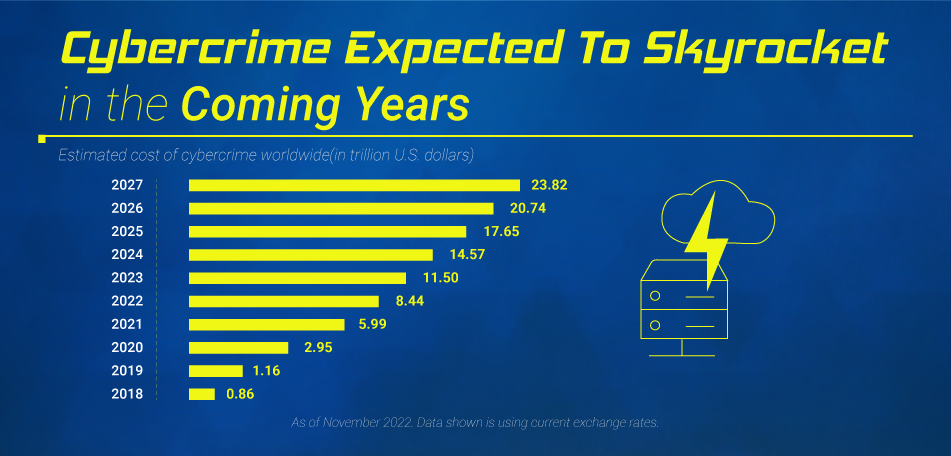

Cybercrime To Cost The World $10.5 Trillion Annually By 2025

Are your digital assets safe online?

Do you know how you keep your things safe at home?

Well, digital assets are like that, but they’re stored on computers or the internet instead of at your home. Some digital assets are for fun, like sharing photos with friends or watching videos. Others are more serious, like important documents for your work. But, we need to protect all of them for privacy concerns.

So, let’s discuss the key Strategies for protecting digital assets, the AI tools for cybersecurity and how you can keep yourself safe.

Cybercrime poses significant threats to digital assets, including data breaches, ransomware attacks, and identity theft. Implementing robust cybersecurity measures is essential to safeguard sensitive information and mitigate risks. Data Encryption and Endpoint Security will also be discussed.

Cybercrime poses significant threats to digital assets, including data breaches, ransomware attacks, and identity theft. Implementing robust cybersecurity measures is essential to safeguard sensitive information and mitigate risks. Data Encryption and Endpoint Security will also be discussed.

Before, diving into this topic deeper with the role of AI in Cybersecurity, let’s first understand what are digital assets, and what exactly is cybercrime.

TOC

- What are Digital Assets?

- What is Cybercrime?

- What are the Common Types of Cyber Attacks?

- Common Types of Ransomware

- 11 Exclusive Insights from Industry Leaders

- Potential Impact of Cybercrime on Digital Assets

- Key Strategies for Protecting Digital Assets

- Current trends: Role of AI in Cybersecurity

- Advantages and Disadvantages of AI in Cybersecurity

- Top 15 AI Tools for Cybersecurity

- Legal and Regulatory Considerations

- Use Cases

- Statistics- AI in Cybersecurity

- FAQ’s

- Conclusion

What Are Digital Assets?

Digital assets are stuff you have on your desktop or online. It could be anything from pictures, videos, documents and even virtual money like Bitcoin. Anything you own in the digital world is a digital asset. Like, think about all the photos you have on your phone or computer – those are digital assets. And if you’ve ever created a cool video or written any story on your computer, that’s also a digital asset. Even the money you might have in an online account or a cryptocurrency wallet is a digital asset.

Digital assets include:

- Documents

- Audio content

- Animations

- Media files

- Cryptocurrencies

- Intellectual property

- Personal photos and videos

- Financial records

- Other valuable digital content

What Is Digital Asset Management (DAM)?

Business processes and information management technologies or systems might be called digital asset management (DAM). Many organizations use DAM to centralize their media assets. A DAM solution centralizes digital asset management and optimizes rich media production, especially in sales and marketing businesses.

Automatic asset updates and brand rules provide a single source of truth for enterprises and a more consistent user experience for external audiences. DAM’s search tools let modern digital content management teams and marketers reuse information, lowering production costs and redundant work streams. To create brand authority and commercial growth, brands must maintain consistent images and messaging on digital venues like social media.

What Is Cybercrime?

When criminals commit crimes using computers/internet, they commit cybercrime. Hacking, phishing, fraud, malware are all part of this category. Cybercriminals steal personal data, and financial assets, or disrupt business operations by taking advantage of security loopholes in technology.

People, companies, and governments all are vulnerable to cybercrime. It has the potential to cause monetary losses, harm to one’s reputation, and the exposure of private information. Using robust passwords, keeping software up-to-date and keeping caution when disclosing personal information online are all measures to protect oneself. Investigating incidents, implementing cybersecurity measures, and raising public awareness are all ways that law enforcement and cybersecurity professionals fight cybercrime.

Often criminals use bogus emails and websites. They may impersonate your bank and request your password or credit card information. The term is phishing. Another method is transmitting malware or viruses. Small bugs infect your computer and steal or damage your data. They may even seize your computer or files until you pay. That’s ransomware. Attacks that flood websites with traffic crash them. This is a DDoS assault, a cyber traffic congestion. Let’s have a look on the types of cyberattacks which will enhance your knowledge in this perspective and help you to be more safe.

New: 10 AI ML In IT Security Trends To Look Out For In 2024

What Are The Common Types Of Cyber Attacks?

Common types of cyber attacks include phishing, malware attacks, ransomware, DDoS (Distributed Denial of Service) attacks, and insider threats. Cybercriminals often use phishing emails, fake websites, malware, or hacking techniques to steal personal information like passwords, credit card numbers, and social security numbers.

1. Malware: This is short for “malicious software.” Malware includes viruses, worms, Trojans, and ransomware. It’s designed to infiltrate computer systems and cause damage, steal data, or demand ransom.

2. Phishing: Phishing attacks trick people into giving away their personal information, such as passwords or credit card numbers. Attackers send fake emails or messages that look like they’re from legitimate sources, like banks or companies, to lure victims into clicking on malicious links or providing sensitive information.

3. Denial-of-Service (DoS) and Distributed Denial-of-Service (DDoS) attacks: These attacks overwhelm websites or computer networks with a flood of traffic, causing them to crash or become unavailable to legitimate users. DoS attacks come from one source, while DDoS attacks come from multiple sources, making them more difficult to stop.

4. Man-in-the-Middle (MitM) attacks: In MitM attacks, hackers intercept communication between two parties, such as a user and a website, without either party knowing. This allows attackers to eavesdrop on or manipulate the communication, potentially stealing sensitive information.

5. SQL Injection: SQL injection attacks target websites or web applications that use SQL databases. Hackers inject malicious SQL code into input fields on websites, exploiting vulnerabilities in the database to gain unauthorized access or manipulate data.

6. Cross-Site Scripting (XSS): XSS attacks inject malicious scripts into web pages viewed by other users. When unsuspecting users visit these pages, their browsers execute the malicious scripts, allowing attackers to steal cookies, session tokens, or other sensitive information.

7. Password Attacks: Password attacks involve guessing or stealing passwords to gain unauthorized access to accounts or systems. These attacks can take various forms, including brute force attacks (trying many possible passwords until the correct one is found), dictionary attacks (trying common words or phrases), or using stolen password databases.

8. Social Engineering: Social engineering attacks manipulate people into revealing confidential information or performing actions that compromise security. This can involve techniques such as impersonation, pretexting, or baiting to trick individuals into trusting the attacker and divulging sensitive information.

Read: 10 ChatGPT Trends In Content Marketing for 2024

Other Cyberattacks Includes

- Insider threats, where individuals within an organization misuse their access privileges to compromise security.

- Zero-day attacks, exploiting vulnerabilities that are unknown to software developers or security experts.

- Drive-by downloads, where malware is automatically downloaded onto a device when visiting a compromised website.

- Botnet attacks, using networks of infected devices to carry out coordinated cyber attacks.

- Cryptojacking, secretly using someone else’s device to mine cryptocurrency without their consent.

- Advanced Persistent Threats (APTs), long-term targeted attacks by skilled adversaries to gain unauthorized access to systems.

- Fileless attacks, exploit vulnerabilities in software or hardware without leaving traditional traces like files or registry entries.

- Wi-Fi eavesdropping, intercepting Wi-Fi communications to capture sensitive information.

- Ransomware attacks, encrypt files or systems and demand payment for their release.

- Credential stuffing attacks, using stolen username and password combinations to gain unauthorized access to accounts.

- Supply chain attacks, compromising software or hardware through vulnerabilities in third-party providers to infiltrate target systems.

Common Types Of Ransomware

1. Ryuk

One kind of highly targeted malware is Ryuk. The most typical vectors for its distribution include spear phishing emails and the exploitation of stolen credentials to access company computers through RDP. Once Ryuk has invaded a system, it demands a ransom in exchange for decrypting specific files. One of the most notoriously costly forms of ransomware is Ryuk. The average ransom demanded by Ryuk is more than $1 million. Because of this, businesses with sufficient resources are the primary targets of the hackers behind Ryuk.

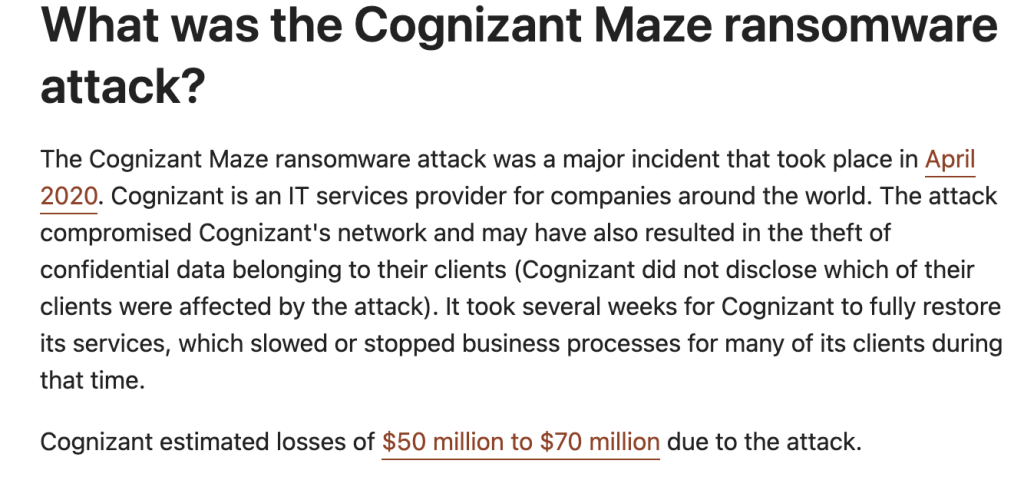

2. Maze

The Maze ransomware made headlines when it was the first of its kind to encrypt files and steal personal information. In response to targets’ refusal to pay ransoms, Maze started encrypting sensitive data collected from victims’ PCs. This data would be made publicly available or sold to the highest bidder if the ransom demands were not fulfilled. An extra motivation to pay up was the prospect of a costly data leak. But that doesn’t imply ransomware is any less of a menace. It is thought that the Egregor, Maze, and Sekhmet ransomware variants share a common source, and some Maze associates have switched to utilizing Egregor.

3.REvil

3.REvil

Large organizations are also targeted by the REvil group, which goes by the name Sodinokibi. Among ransomware families, REvil is among the most recognizable online. Since 2019, the Russian-speaking REvil organization has been operating the ransomware group that was responsible for numerous high-profile breaches, including ‘Kaseya’ and ‘JBS.’ It has been vying with Ryuk for the honor of the most costly ransomware version for the past few years. It is well-known that REvil wanted a ransom of $800,000. Although REvil started as a standard ransomware strain, it has now changed its tactics, encrypting files and stealing data from organizations utilizing the Double Extortion approach. If a second payment is not made, the attackers may threaten to reveal the stolen material in addition to demanding a ransom to decrypt it.

4. Lockbit

A new Ransomware-as-a-Service (RaaS), LockBit has been encrypting data since September 2019. To evade detection by security appliances and IT/SOC teams, this ransomware was designed to encrypt huge organizations swiftly.

5. DearCry

Microsoft patched four vulnerabilities in their Exchange servers in March 2021. One new kind of ransomware, DearCry, takes advantage of four security holes in Microsoft Exchange that were just found.

As an example, the DearCry ransomware encrypts specific file formats. After the encryption process is complete, victims will be prompted to send an email to the ransomware operators with instructions on how to decrypt their files. This is all explained in the ransom message that DearCry displays.

6. As an example of its extortion tactics, the cyber gang has threatened to reveal confidential information to its victims unless they meet the gang’s demands. Some of the companies the gang has boasted about hacking include Samsung, Nvidia, and Ubisoft. To make malware files look legitimate, the gang uses stolen source code.

AITHORITY 11 Exclusive Insights From The Industry

“AI’s threat to cybersecurity is often framed in terms of existing defenses being overwhelmed by a flood of new, automated, cheap-to-initiate attacks from the outside. At Mindgard we see an even bigger threat. As businesses race to adopt AI and reap its competitive advantages, they are inadvertently creating a massive new attack surface that today‘s cybersecurity tools are ill-equipped to defend. Even advanced AI systems like ChatGPT and foundation models now being incorporated into enterprise applications exhibit vulnerabilities to an array of threats, from data poisoning and model extraction to outright data leakage by malicious actors.

The risks are as much internal as external. AI models can memorize sensitive data used in training, creating the danger of data leakage through model inversion attacks. Attackers who gain access to APIs can probe models to syphon intellectual property and reconstruct proprietary models at a fraction of the original development cost. Bad actors can embed backdoors and trojans into open-source models which then get deployed into enterprise systems. Documents uploaded to an LLM can contain hidden instructions that are executed by connected system components.

Legacy vulnerability scanning, pentesting and monitoring tools simply aren’t designed for the complexities of modern AI. Conventional pentesting and red teaming, if performed at all, is expensive, time-consuming, and quickly outdated by frequent model releases and updates. Meanwhile, the talent gap for AI security experts is vast and growing. The result is a rapidly-expanding universe of unseen AI vulnerabilities across organisations, threatening both acute damage like data breaches as well as long-term erosion of IP and competitive positioning. To stay ahead of these threats, a fundamentally new approach is needed – one that applies the speed and scale of AI itself to the challenges of red teaming, vulnerability detection, and automated remediation. Only by embracing AI as a cybersecurity defence can organisations securely harness its potential and safeguard their digital futures.”

Dr. Jane Thomason, World Metaverse Council,Web3.0 Leader of the Year

“This post is drawn from the excellent Chainalysis 2024 Cryptocrime Report. In recent years, cryptocurrency hacking has become a significant threat, leading to billions of dollars stolen from crypto platforms and exposing vulnerabilities across the ecosystem. Attack vectors affecting DeFi are sophisticated and diverse. Therefore, it is important to classify them to understand how hacks occur and how protocols might reduce their likelihood in the future. On-chain attack vectors stem not from vulnerabilities inherent to blockchains themselves but rather from vulnerabilities in the on-chain components of a DeFi protocol, such as their smart contracts. These aren’t a point of concern for centralized services, as centralized services don’t function as decentralized apps with publicly visible code the way DeFi protocols do.

The classification of attacks are summarised below :

- Protocol exploitation – When an attacker exploits vulnerabilities in a blockchain component of a protocol, such as ones about validator nodes, the protocol’s virtual machine, or in the mining layer.

- On-chain Insider attack: When an attacker working inside a protocol, such as a rogue developer, uses privileged keys or other private information to steal funds directly.

- Off-chain Phishing occurs when an attacker tricks users into signing permissions, often by supplanting a legitimate protocol, allowing the attacker to spend tokens on users’ behalf.

- Phishing may also happen when attackers trick users into directly sending funds to malicious smart contracts.

- Off-chain Contagion – When an attacker exploits a protocol due to vulnerabilities created by a hack in another protocol. Contagion also includes hacks that are closely related to hacks in other protocols.

- On-chain Compromised server: When an attacker compromises a server owned by a protocol, they disrupt the protocol’s standard workflow or gain knowledge to further exploit the protocol in the future.

- Off-chain Wallet hack – When an attacker exploits a protocol that provides custodial/ wallet services and subsequently acquires information about the wallet’s operation.

- Off-chain Price manipulation hack – When an attacker exploits a smart contract vulnerability or utilizes a flawed oracle that does not reflect accurate asset prices, facilitating the manipulation of a digital token’s price.

- On-chain Smart contract exploitation – When an attacker exploits a vulnerability in a smart contract code, which typically grants direct access to various control mechanisms of a protocol and token transfers.

- On-chain Compromised private key – When an attacker acquires access to a user’s private key, which can occur through a leak or a failure in off-chain software, for example.

- Off-chain Governance attacks – When an attacker manipulates a blockchain project with a decentralized governance structure by gaining enough influence or voting rights to enact a malicious proposal.

- On-chain Third-party compromised – When an attacker gains access to an off-chain third-party program that a protocol uses, which provides information that can later be used for an exploit.

Off-chain attack vectors stem from vulnerabilities outside of the blockchain. One example could be the off-chain storage of private keys in a faulty cloud storage solution, which applies to both DeFi protocols and centralized services. In March 2023, Euler Finance, a borrowing and lending protocol on Ethereum, experienced a flash loan attack, leading to roughly $197 million in losses. July 2023 saw 33 hacks, the most of any month, which included $73.5 million stolen from Curve Finance. Similarly, several large exploits occurred in September and November 2023 on both DeFi and CeFi platforms. On-chain attack vectors stem not from vulnerabilities inherent to blockchains themselves but rather from vulnerabilities in the on-chain components of a DeFi protocol, such as their smart contracts. Hacking remains a significant threat. Protecting your digital assets from hacking is of utmost importance, especially in the current scenario where cyber threats continue to increase. Measures to protect your digital assets include:

- 1. Use Strong Passwords: Create strong and unique passwords for all your accounts and avoid using the same password for multiple accounts. Use a mix of uppercase and lowercase letters, numbers, and symbols.

- 2. Two-Factor Authentication: Enable two-factor authentication for all your accounts where possible. This adds an extra layer of security to your accounts.

- 3. Keep Your Software Up-to-date: Keep all your software, including anti-virus and anti-malware software, up-to-date to ensure that it has the latest security patches.

- 4. Use a Hardware Wallet: Consider using a hardware wallet to store your digital assets offline. This will ensure your assets are safe even if your computer or mobile device is hacked.

- 5. Be Careful with Phishing Emails: Be wary of phishing emails that appear to be from legitimate sources. Do not click on any links or download any attachments from such emails.

- 6. Use Reputable Exchanges: Only use reputable exchanges to buy, sell, and store digital assets. Research the exchange thoroughly before using it.

- 7. Backup Your Data: Regularly back up your data to ensure that you keep access to your digital assets in case of a hack or a hardware failure”.

Chirag Shah, Global Information Security Officer, Model N

“Why Should You Integrate Cybersecurity into Your Company Culture?

Many organizations neglect the most important aspect of implementing a cybersecurity strategy — the need to foster a security-focused culture. Up to 90% of data breaches stem from human error, meaning even the most robust protection measures can be thwarted by employee mistakes. Education is a foundational aspect of cybersecurity, but a true security-centric culture goes beyond handbooks and online courses. To drive behavioral change, leaders must make training dynamic and relevant while positioning the security team as a strategic partner.

Training strategies for a security culture

Research indicates that only 10% of employees remember all of their cybersecurity training. Companies must get creative to raise that number. Consider the following strategies.

● Make training relevant.

People are more likely to adopt behaviors that protect them personally, but many organizations only highlight cybersecurity’s importance to the business. Leaders should reposition their training to focus on knowledge that is relevant beyond the workplace. Training programs should teach cybersecurity skills that employees can use in their personal lives, such as best practices for online shopping and using public Wi-Fi. This content directly impacts individuals, resulting in better engagement and retention. This approach benefits the companies in two ways. First, practicing good cybersecurity skills at home transfers to better practices at work. Second, many people use their work computers for personal activities. Any risky behavior they take on that device compromises company security.

● Build targeted training.

Companies should build educational content targeted to specific groups. Threats facing the finance department differ from those facing the IT department, so why would they receive the same training? Tailoring content to focus on the most relevant, pressing threats to each department or cohort makes training more engaging and impactful. Employees will understand the material’s relevance and can concentrate on the elements most pertinent to their specific job functions.

● Introduce variety

Seeing the same cybersecurity presentation repeatedly can cause employees to tune out. Security teams must execute a variety of educational approaches to maintain attention. Targeted, live presentations or small group sessions increase engagement by allowing opportunities for real-time discussions and questions. Lunch-and-learns are another approach. Everyone loves free food, and this type of event creates a more casual environment. Don’t be afraid to get creative. For example, consider hosting a unique training session tailored specifically for employees and their children. Parents can learn about protecting their kids online, while picking up useful skills to apply in their own lives. Unique experiences like this help demonstrate an organization’s commitment to fostering a culture of security that permeates every aspect of our employees’ lives.

- Evaluating training success

Offering training, however engaging, is only the first step to encouraging good cybersecurity practices. Organizations must evaluate the training’s effectiveness by monitoring engagement metrics, such as how many people use a phishing reporting tool. This proactive, data-driven approach enables organizations to continuously adapt and improve training programs.

- Cybersecurity is a partnership

When employees perceive cybersecurity as a laundry list of rules separate from their daily activities, they are more likely to bypass security controls or disregard guidance entirely. To overcome this divide, cybersecurity teams must become strategic partners rather than bureaucratic obstacles. Security professionals can establish themselves as trusted advisors by fostering strong relationships and showcasing a sincere commitment to enabling business success. Teams should engage with each department to understand its unique challenges and objectives and create policies that support organizational goals. Employees are more likely to embrace cybersecurity best practices from a source they perceive as having their best interests at heart.

When presented with a new initiative or technology proposal, the security department should focus on finding solutions rather than outright denying requests. Security professionals can work with business units to identify innovative approaches to achieve goals without compromising security. Actively listening and demonstrating a willingness to find common ground foster a culture of partnership and trust. Security is a business enabler. It must be deeply embedded into an organization’s essence, not treated as an afterthought. Effective security leaders recognize that their role extends far beyond mandatory training sessions; instead, they focus on inspiring a fundamental mindset shift in employees. By adopting a comprehensive, people-centric strategy, security becomes a shared value that permeates every level of the organization.”

Philipp Pointner, Chief of Digital Identity, Jumio Corporation

“Advancing AI-powered cyber threats have rendered businesses and customers at a heightened risk of identity theft. Leveraging sophisticated tools, cybercriminals can quickly launch convincing attacks involving synthetic identities or deepfakes of voices and images to bypass traditional security measures. With 90% of organizations suffering identity-related breaches, modern solutions are required to deter these threats. Now, there’s a powerful technology emerging that is helping businesses fight AI, with AI: predictive analytics. Traditional methods of fraud detection are often reactive, relying on analysis of past incidents. This leaves businesses vulnerable to new and ever-evolving threats. This is where AI-powered predictive analytics steps in. It goes beyond simple identity verification by incorporating advanced behavioral analysis. This allows for the identification of complex fraudulent connections with increased speed and accuracy, giving businesses a crucial edge.

AI-powered analytics can both prevent and predict fraudsters’ next move. By analyzing vast datasets and identifying patterns and connections in real time, this technology empowers security teams to mitigate threats before they even occur. This proactive approach is crucial in today’s fast-paced digital environment, where attackers are constantly innovating. Modern predictive analytics technology is also equipped with fraud risk scoring, which allows organizations to prioritize threats and allocate resources more effectively, focusing on the areas with the highest potential for loss. The combination of graph database technology and AI is also enabling security teams to visualize connections across entire networks. This unveils hidden patterns and exposes larger fraud rings, giving businesses a more comprehensive picture of the threats they face.

AI-powered predictive analytics technology represents a significant leap forward in the fight against fraud. By employing a data-driven defense strategy, organizations can gain valuable insights and proactively safeguard themselves against potential risks. This empowers them to not only react to the latest cyberattacks but anticipate them, ultimately staying ahead of the curve in this ever-changing digital landscape.”

Imtiaz Mohammady, Founder and CEO of Nisum

“Are your digital assets safe online?

When it comes to cybersecurity, hackers are constantly upping their game. The retail industry alone saw a 75% surge in ransomware attacks in 2022, meaning retailers need to be extra vigilant. Zooming in on the industry, I see POS systems as the bullseye for these attacks. Hackers will do anything to get their hands on customer credit card data. That’s why every store needs a layered defense. Strong, unique passwords; encryption for sensitive data is a must-have too. But the real bit that’s going to make a difference is training employees. They’re the frontline soldiers, so make sure they can spot suspicious activity before it penetrates the system.

Security isn’t a one-time thing. Without a doubt retailers have to keep updating POS software, operating systems, and anti-malware with the latest security patches. Same goes for e-commerce platforms. Regular checkups are key to identifying any weak spots hackers might try to exploit. Things have got trickier for customers, too. While phishing emails have been about since the 90s, they’ve definitely gotten sneakier. Hackers can practically copy and paste logos and ask AI to help them write in a specific tone. And the personal information that’s available on social platforms makes social engineering and impersonating others way easier.

Especially with the latest tech available. Deepfakes can make anyone look like someone else, and automation lets hackers launch these phishing campaigns at a much faster rate. We have to be most careful at our weakest moments. Since they capitalize on vulnerability. Let’s say a natural disaster hits. You can bet someone will see a fake relief organization email in their inbox. The good news is we can put defenses in place. Educating customers about these tactics will make them smarter and less likely to fall victim. We must build strong defenses for our systems, our employees, and our customers.”

Tod McDonald, CPA, CIRA, is the co-founder of Valid8 Financial

“Empowering Fraud Investigators with AI-Driven Insights

It’s estimated that businesses catch only one-third of all corporate fraud instances. The primary challenge to identifying fraud is a basic one — a lack of visibility. With online finance tools compounding an overwhelming volume of transactions, organizations cannot possibly monitor every transaction. As a result, criminals can hide their illegal activities under layers of financial complexity. AI helps bad actors further obfuscate their schemes. Technology can design elaborate routes to funnel money, automate transfers, and manipulate or forge invoices and documents. Automated bots have significantly increased the ease and efficiency with which malicious actors can execute account takeover attacks, compromising the safety of corporate bank accounts.The pace of AI-powered fraud far outstrips the speed of conventional investigative methods. Organizations must empower their teams to accelerate time to insight for effective fraud detection.

Leveraging AI for fraud detection

Conducting an audit isn’t likely to catch fraud. Audits are designed to provide assurance of the accuracy of financial statements. They might reveal red flags but cannot prove fraud. An additional forensic investigation is required. Resource constraints and manual workflows limit the efficiency and effectiveness of forensic accountants and financial investigators. Traditionally, professionals must gather, reconcile and categorize financial data themselves. This painstaking and time-consuming process only allows teams to analyze a sample of transactions, potentially leaving out critical evidence. Criminals can easily take advantage of this system. AI helps investigators overcome the hurdles of gathering relevant data from a deluge of documents. Verified financial intelligence (VFI) tools automatically ingest, extract and clean data from thousands of financial records in hours rather than weeks. The technology verifies, reconciles and sorts transactions across accounts, flagging any discrepancies or missing information. The automation allows investigative teams to process the entire financial history and spend their time on high-value tasks like following up on discrepancies, interpreting results and developing a narrative.

Manual processes limit investigations to only the most obvious and straightforward fraud cases. AI reduces the evidence-gathering timeline, provides more comprehensive data and surfaces less conspicuous issues, giving teams the capacity to investigate more complex scams and a higher volume of cases. Additionally, VFI platforms generate courtroom-ready evidence for effective prosecutions to convict criminals and deter future scam attempts. Reliance on manual fraud investigation methods is causing teams to fall behind scammers’ advancing tactics. AI solutions give organizations the speed and visibility necessary to protect their assets.”

John Grancarich, Chief Strategy Officer,

Fortra

“Role of AI in Cybersecurity

-

- AI is an incredibly exciting area of research, but one that at the current time seems to often raise more questions than it provides answers. This is understandable – every wave of technological innovation is accompanied by the question ‘How should we be utilizing this?’. The answer to this question should actually be another question: what problems are we trying to solve? By understanding the core problems first, we can better find a productive initial use for AI in cybersecurity.

- One very real problem in cybersecurity today is all the manual work that security operations teams are faced with on a daily basis. The more time that’s spent on high-volume, repetitive tasks, the less time that can be invested in research, learning, design & improvement. Couple this with routine challenges in finding talent for the open roles available, and we have both a security & productivity problem on our hands. It makes sense to incorporate AI using a ground-up approach, and perhaps consider a simpler concept to make it more tangible: automation. The good news is that we know how to introduce automation into various technology fields – start with a small number of routine, repetitive, high-volume tasks first. We can stack rank our tasks in cybersecurity not on a scale of most time consuming to least time consuming, but most complex to least complex. By starting with the least complex tasks first, we can not only deliver tangible value to our respective organizations but also develop new skills with AI. Then move up the complexity ladder over time. It’s tempting to want to go big with AI from the start, but a more pragmatic, stepwise approach has a higher probability of success and should also help develop some compelling new skills within the security team.

How can I secure my digital assets from cyber threats?

-

- There are some relatively simple steps one can pursue to help them better secure their personal or professional digital assets. It helps to understand a bit about how certain attacks work to improve your defensive techniques. Take SIM swapping for instance – also referred to as SIM jacking. SIM refers to Subscriber Identity Module and it’s what stores your mobile identity on your phone. When you go to upgrade your phone, your provider will simply port your mobile identity from one device to another. It’s typically a seamless experience. The problem occurs when an attacker – likely using a combination of other reconnaissance techniques like phishing – has determined enough characteristics of your identity to impersonate you to your provider & take ownership of your mobile identity by swapping it into a device they control and using text messaging to capture authentication codes that were meant for you. Assuming you have multi-factor authentication set up, when you try to log in to your investment account for example an SMS text will be generated that has now been hijacked by an attacker who can impersonate you and unfortunately often have their way with your digital assets. The way to address this is to shift to using the apps on your mobile device to authenticate instead of SMS text – for example, Google Authenticator or Duo Security, or in-app verification that financial services companies are starting to adopt. When a company you are doing digital business with online suggests using a different approach to authentication because of this danger, heed their warning – there is a very valid reason they are reaching out.”

Nick Ascoli, Senior Product Strategist at Flare

“Cybersecurity in the Age of AI: Offense and Defense Imbalance

Language models are having a massive impact on the threat landscape. The ability for companies and individuals to stay secure online is being outpaced by increasingly complex and advanced technology. The current trend is for companies to rush headlong into implementing “AI” across every workflow, with some only considering after the fact that AI is a new vector to leak potentially highly sensitive data through. At the same time cybercrime continues to increase in sophistication with criminals increasingly adopting AI tools themselves. The major buzz around ChatGPT last year included threat actor conversations, The screenshot below is from a dark web forum and references using GPT to write a phishing email.

Several threat actors have been “fine-tuning” open-weight language models to facilitate cybercrime. For example, last year “Dark Bard” was advertised as a language model that could help write malware, generate phishing emails, and assist threat actors in social engineering attacks. Historically, new technology often disproportionately benefits either offense or defense. For example the advent of massive stone walls benefited defense, while gunpowder and cannons helped armies on offensives. These precedents raise a critical question: will AI such as language models, voice synthesis programs, and eventually video positively or negatively impact security? Unfortunately, many of the initial indicators don’t look good.

AI language models stand out as having numerous opportunities to help cyber defenders. First and most importantly, language models can help security teams sift through huge amounts of log and threat intelligence data to identify critical events that analysts may miss. Secondly language models open up numerous opportunities to create adaptive training, simulated phishing, and even creating automated rules for SIEM/SOAR systems. One huge opportunity for the use of language models is to aggregate and analyze threat intelligence data.

Unfortunately the pernicious uses of AI could far outweigh the beneficial ones. Threat actors recently used AI voice cloning to steal more than $30 million from a financial institution in east Asia through a complex social engineering attack. But more troubling even than that are the possibilities that LLMs open up for threat actors to create mass-spear phishing at scale.

Contrary to popular belief most hacking isn’t incredibly technical users exploiting 0-day vulnerabilities. Instead social engineering attacks like phishing remain a primary driver of the vast majority of cyberattacks. Traditionally these attacks are not personalized in most cases, but LLMs look set to change this. Feeding basic data about individuals, easily found on social media sites like LinkedIn and Facebook to language models tuned for social engineering will allow threat actors to drastically scale up targeted attacks.

Another significant threat is expanded use of AI voice cloning technology which will enable fraud and scams. Threat actors already routinely target both individuals and businesses to steal gift cards, cryptocurrency, and money directly out of accounts. AI voice technology could enable them to carry out far more sophisticated schemes. Finally, AI video will present another significant avenue for would-be social engineers in the near-term future. Video will open up the opportunity to conduct video phishing against organizations and bring the threat of impersonation to the next level.

Social engineering represents the majority of successful attacks, and AI could serve as rocket fuel for social engineering campaigns, particularly as more open-weight AI models make it into cybercrime communities. We recommend utilizing a threat intelligence platform to rapidly identify emerging AI threats while also engaging in robust phishing training for employees.”

“Generative AI replaces manual labor. Just like it can write the first draft of an essay or code, so can it also analyze billions of data points, detect potential threats, and alert stakeholders – effectively mitigating consequences and automating the prevention of malicious attacks. Large Language Models enhance AI security through threat detection, vulnerability assessment, penetration testing, automated response and mitigation. In addition to detection and proactive protection, Generative AI also allows us to test AI system robustness and vulnerabilities by creating realistic simulations of attacks and imitating the behavior of real-world adversaries. Stakeholders are constantly looking for weak spots in digital assets, racing to find them before attackers. DeepKeep adds a layer of automation to this endless race with Generative AI, giving stakeholders a head start. We also use LLM to describe and scrutinize digital assets at a pixel and semantic level of detail, providing active protection way beyond a human’s ability and traditional cybersecurity methods. It leads us to understanding intent, which is a huge game changer.

But while AI improves security and plays a crucial role across all industries, it is a double edged sword. Generative AI poses several types of threats and weaknesses, making it vulnerable to adversarial attacks, cyber attacks, privacy theft, and risks like Denial of Service, Jailbreaking and Backdoor. Generative AI also isn’t trustworthy due to its tendency to hallucinate and perpetuate biases. GenAI is at its best when securing GenAI, due to its ability to comprehend the boundless connections and intricate logic of AI and LLM. We at DeepKeep believe in “fighting fire with fire,” by using AI-native security to secure AI.”

By Sashank Purighalla, Founder and CEO of BOS Framework.

“Are your digital assets safe online?

In 2024, a significant amount of people’s assets and data exist in virtual form, from photos, videos, and documents to cryptocurrency, NFTs, and metadata. Protecting these digital assets has never been so important. And with cybercriminals becoming more advanced, our digital assets face a large number of threats, unlike ever before, including hackers and malicious actors to viruses and ransomware. Yet, the answer here is quite simple: They’re as safe as you make them. And although it seems that 91% of people understand that reusing passwords is a security risk, more than three in five people admit to reusing passwords, the most popular being ‘123456.’

Potential impact of cybercrime on digital assets

Cybercrime can have a devastating impact in a variety of different ways, particularly regarding financial loss and reputational damage. Financial loss is usually viewed as the most common and hardest-felt impact of cybercrime. Research has shown that “publicly traded companies suffered an average drop of 7.5% in their stock values and a mean market cap loss of $5.4 billion per company.” For example, cybercriminals can steal credit card information, create fake identities in order to access accounts, or use phishing scams to trick unsuspecting users into revealing their private credentials. The leaking of sensitive information can cause significant reputational damage and is another huge potential consequence of cybercrime. Customers trust brands less—even brands like Apple and Sony—when informed of privacy breaches. And with it costing roughly five times more to acquire customers than keep them, imagine the harm this could do for a fledgling startup.

Key strategies for protecting digital assets

Protecting digital assets doesn’t need to be rocket science, and there are a multitude of ways that people and businesses can protect their data that don’t need to cost the Earth. Some traditional and affordable security measures include:

- Multi-factor authentication (MFA): Using a strong password should be the first port of call, so an extra step is to implement MFA whenever possible. Microsoft reports that MFA can block over 99.9% of account compromise attacks.

- Software updates: Continually check for software and operating system updates as these include the latest security patches. Software that is left outdated contains vulnerabilities that hackers can find and exploit.

- Access controls: Ensure that only authorized users can access precious digital assets. These access controls should be frequently checked and updated.

However, 61% of enterprises say they cannot detect breach attempts without using AI technologies. Therefore, as hackers and cybercriminals become more tech-savvy, so must cyber security solutions. Luckily, there is already a variety of AI-powered security solutions available on the market for individuals and businesses to leverage, including:

- Anomaly detection: AI algorithms can be leveraged to inspect user behavior and recognize patterns. This allows them to identify subtle anomalies that might indicate a cyberattack is imminent or potentially underway. This automated detection allows for early intervention, hopefully preventing significant damage or data loss. For instance, a common algorithm AI models can monitor is login attempts, with the intention of flagging suspicious activity, like strange login attempts from unusual locations and peculiar times.

- Threat prediction and prevention: AI can scan and interpret security data to identify threats and predict cyberattacks in real time. This proactive security strategy allows both individuals and businesses to strengthen their cybersecurity defenses and potentially identify and block access to attackers.

The key takeaway? While online assets are inherently vulnerable, a proactive and layered security approach—with some help from AI—can create a formidable digital vault, significantly improving the odds of keeping your data safe.”

Jon Clay, VP of Threat Intelligence at Trend Micro

“Monitoring underground criminal forums to understand how adversaries are discussing and using AI and generative AI technologies – as they are likely to become integral to their cybercrime strategies – is key to developing effective proactive defenses. Criminals and bad actors are exploring ways to ‘jailbreak’ existing generative AI tools, such as those developed by OpenAI, Grok, Bard, and Gemini. By circumventing the built-in security controls—which, for instance, prevent the creation of malware—adversaries can exploit these tools for malicious purposes. Additionally, AI is being used to refine socially engineered attacks and tactics, such as phishing and business email compromise. These advanced techniques enable criminals to craft highly realistic emails and text messages, increasing their chances of deceiving targets. The rapid advancement of deepfake technologies further compounds this threat, potentially allowing criminals to employ them in sophisticated scams.

Despite the AI-fueled increase in cybercrime, the new technology can be leveraged for good if organizations are prepared to adapt. A combination of zero-trust approaches and the use of AI is required to strengthen security. On the defensive side, generative AI can significantly boost the capabilities of security operations centers (SOCs). It enhances visibility into attack vectors and empowers SOC analysts to manage threats more efficiently and effectively. Generative AI can also help enhance proactive cyber defenses by enabling dynamic, customized, industry-specific breach and attack simulations. While formalized ‘red teaming’ has typically been available only to large companies with deep pockets, generative AI has the potential to democratize the practice by allowing companies of any size to run dynamic, adaptable event playbooks drawing from a wide range of techniques.

In response to these emerging threats, businesses must enhance their cybersecurity measures. Implementing robust security controls to detect AI-based attacks is crucial. Defending against these escalating threats requires an “all hands on deck” response that combines evolved security practices and tools with strong enterprise security cultures and baked-in security at the application development stage. Moreover, organizations should invest in tools to manage and monitor their own AI usage effectively. While cybersecurity awareness and AI literacy training remain essential, businesses need to back them up with defensive technologies.”

Potential Impact of Cybercrime on Digital Assets

The WannaCry attack in 2017 started the current ransomware trend. This well-publicized, massive assault proved that ransomware attacks could be done and even yield profits. A plethora of ransomware variations has been created and utilized in various assaults since then. Ransomware has been on the rise recently, and the COVID-19 outbreak is one factor. There were vulnerabilities in cyber defenses as companies rushed to implement remote work. There has been an upsurge in ransomware assaults because cybercriminals have used these weaknesses to spread the malware.

The WannaCry attack in 2017 started the current ransomware trend. This well-publicized, massive assault proved that ransomware attacks could be done and even yield profits. A plethora of ransomware variations has been created and utilized in various assaults since then. Ransomware has been on the rise recently, and the COVID-19 outbreak is one factor. There were vulnerabilities in cyber defenses as companies rushed to implement remote work. There has been an upsurge in ransomware assaults because cybercriminals have used these weaknesses to spread the malware.

Ransomware assaults have been reported by 71% of firms in this age of digital hazards, with an average financial loss of $4.35 million per incidence.

Ten percent of the world’s businesses were the targets of attempted ransomware attacks in 2023. This is a significant increase from last year when 7% of firms faced similar threats, the highest rate in recent years.

Cybercriminals may use AI to:

- Swiftly develop brand-new malicious software that can exploit newly discovered security flaws or evade detection.

- Come up with fresh, innovative, targeted phishing attempts. Reputation engines may struggle to keep up with the sheer volume of scenarios caused by such behaviors.

- Speed up data analysis and collection while assisting in the discovery of additional attack vectors.

- Make convincing deepfakes (audio or video) for social engineering assaults.

- Launch intrusion-style assaults or create brand-new hacking instruments.

Key Strategies For Protecting Digital Assets

How can I secure my digital assets from cyber threats?

Does this question often run through your mind?

This is a good read then.

- Cyber Awareness Training and Education– Ransomware frequently spreads through phishing emails, which is why cyber awareness training and education are important. It is critical to educate users on how to recognize and prevent ransomware attacks. One of the most significant protections a company can deploy is user education since many current cyber-attacks begin with a targeted email that does not even contain malware but merely a socially engineered message that induces the user to click on a harmful link.

- Regular data backups: According to the definition of ransomware, this type of software encrypts data and makes paying a ransom the only method to decrypt it. If a company has automated, encrypted backups, it can recover quickly from an attack without paying a ransom and with little data loss. The ability to retrieve data in the case of corruption or disk hardware malfunction depends on keeping regular backups of data as a routine operation. This will help prevent data loss. In the event of a ransomware attack, functional backups can aid firms in recovering.

- Patching: Keeping your systems patched is essential in the fight against ransomware. Attackers often check the updates for newly discovered vulnerabilities, and if a machine isn’t fixed, they will attack it. Because this decreases the amount of possible vulnerabilities within the company that an attacker could exploit, firms must make sure that all systems are patched up to date.

- User Authentication: Ransomware perpetrators frequently utilize stolen user credentials to access services such as Remote Desktop Protocol (RDP). An attacker may find it more difficult to utilize a guess or stolen password if robust user authentication is used.

- Deploy Anti-Ransomware Solution: Because it encrypts every user’s files, ransomware leaves a distinct digital footprint whenever it infects a computer. Those fingerprints are used to build anti-ransomware systems. Typical features of an effective anti-ransomware program

Domestic Trust

The United States is the site of the establishment of domestic asset protection trusts. The potential for legal trouble is reduced with this trust structure. Despite the restricted legal exposure, assets held in a domestic trust can nevertheless be subject to jurisdiction in some cases by U.S. courts. Asset protection trusts are codified in the statutes of South Dakota, Wyoming, and Nevada, however, domestic trust regulations differ from state to state.

Offshore Trust

Cryptocurrencies, digital assets, and traditional assets are all best protected under an offshore trust. The formation of a trust within the framework of the law of a jurisdiction located offshore is known as an offshore trust. An offshore trust’s primary perk is that it can evade court orders and judgments from the United States. The assets held by an offshore trust are vulnerable to litigation, but it can be expensive and time-consuming because it must be conducted in the jurisdiction where the trust is located.

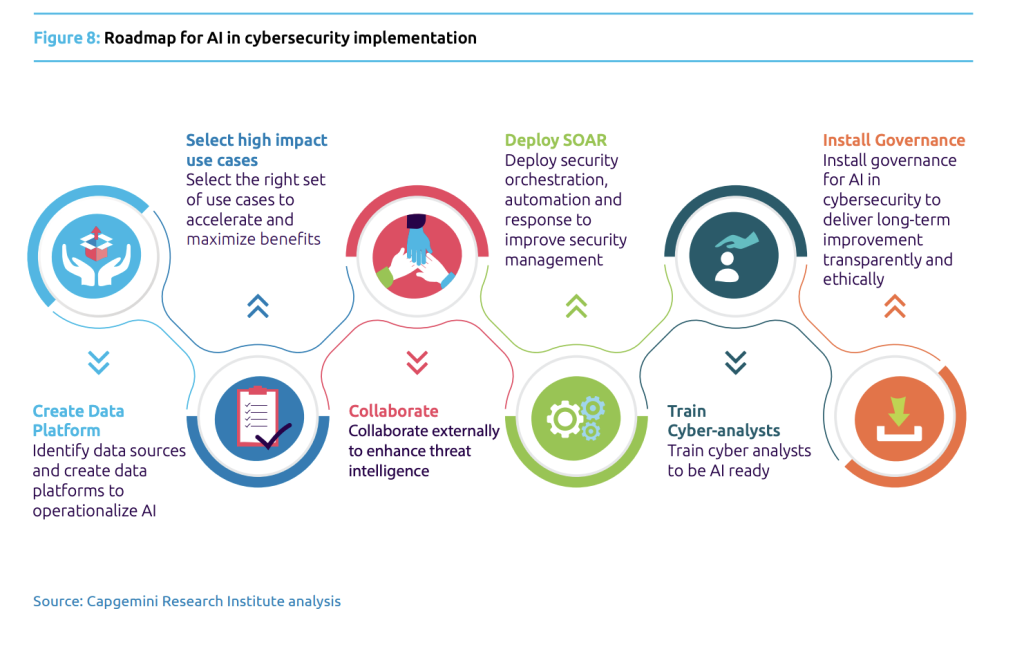

Current Trends: Role Of AI In Cybersecurity

The AI in cybersecurity market was valued at $20.19 billion in 2023 and is projected to reach $141.64 billion by 2032.

To begin, how would you define AI?

You might say it’s like a very intelligent computer program that can figure things out for itself. It’s like if you had a digital brain that could mimic human cognition and behavior, but at a far faster rate and without fatigue.

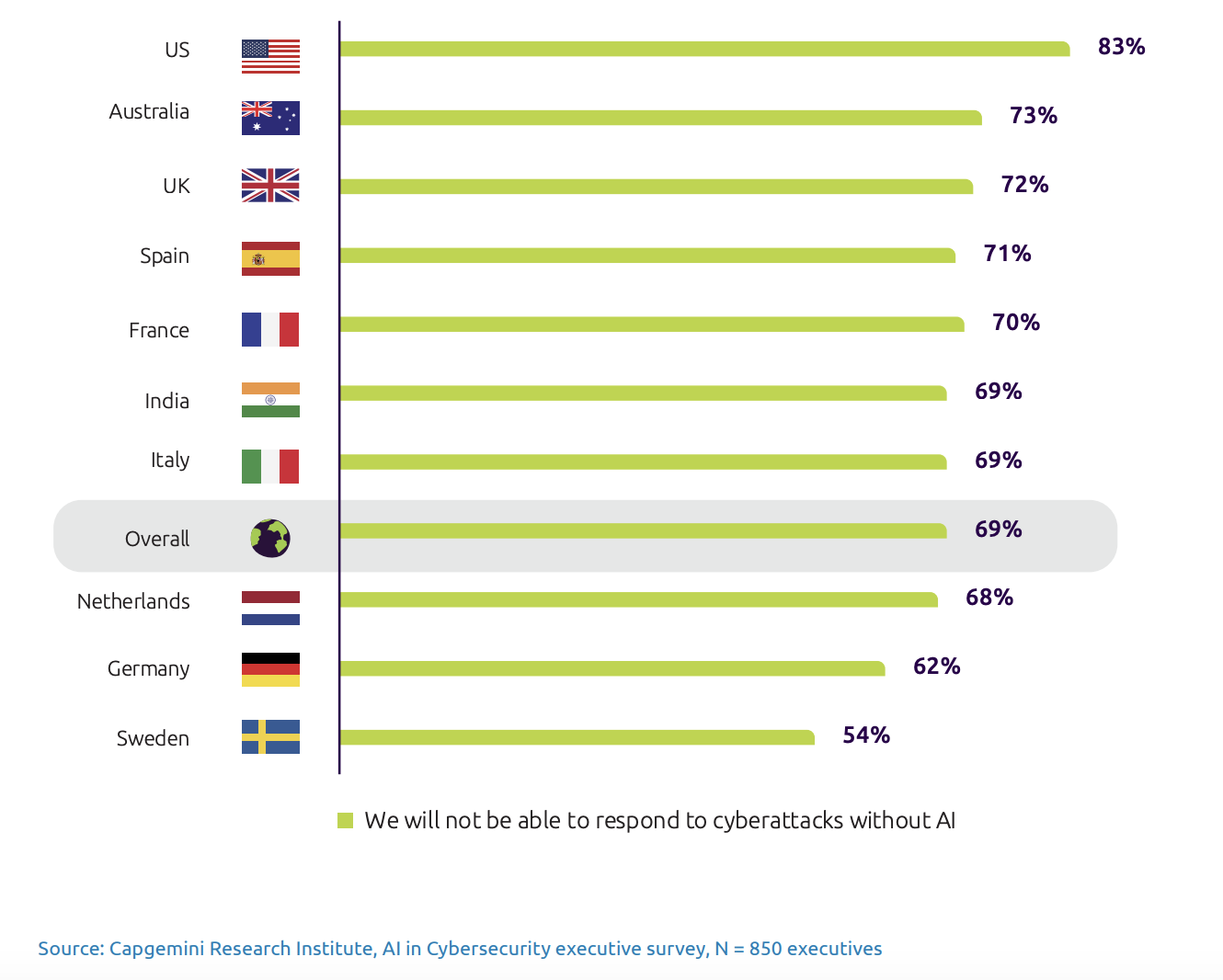

61% of enterprises say they cannot detect breach attempts without the use of AI technologies.

What follows is a discussion of AI’s applications in the field of cybersecurity. Using AI to prevent cyber assaults before they happen is currently a hot trend. You see, AI is capable of real-time analysis of massive amounts of data and can detect any odd activity that could indicate an assault. Having a computerized watchdog that can detect danger before it approaches is similar to this. Artificial intelligence also helps by bolstering our digital defenses. To effectively defend against future threats, it may continuously learn from previous attacks and adjust its security systems accordingly. It’s as if you had a guard who is exceptionally bright and who becomes better at his job daily.

Read: AITHORITY Weekly Roundup – AI News That Went Viral This Week

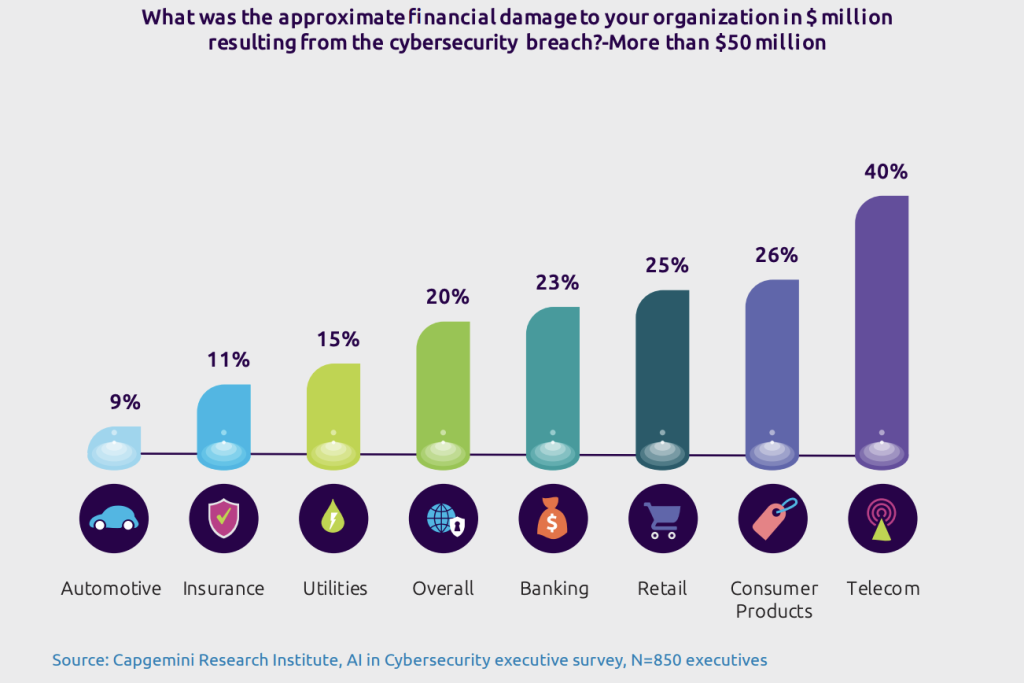

Below, is a figure taken from Capegemini describing how countries responded to their efficiency to handle cybercrime with help of AI.

Cybersecurity involves using AI to identify and counteract cyber threats as they happen. Artificial intelligence systems can sift through mountains of data in search of patterns that can indicate a cyber danger.

Cybersecurity involves using AI to identify and counteract cyber threats as they happen. Artificial intelligence systems can sift through mountains of data in search of patterns that can indicate a cyber danger.

Locating and repairing digital system security flaws

However, AI isn’t limited to thwarting criminals. As a bonus, it’s assisting us in locating and repairing digital system security flaws. To find vulnerabilities that hackers could exploit, AI can sift through massive volumes of code. Imagine having a digital handyman at our disposal, always ready to fix any vulnerabilities in our digital defenses. Even governments and large corporations can benefit from AI. Common people like you and I are also protected by it. Antivirus software that uses AI to detect and prevent malicious software is one example. It’s as if your laptop or smartphone had its very own personal bodyguard.

Adversarial attacks

However, certain obstacles must be considered, and AI is far from flawless. Concerning one major point, there are “adversarial attacks.” This is the point at which cybercriminals try to manipulate AI systems into making errors via devious tactics. That’s the same as trying to pass yourself off as a buddy when, in reality, you’re a thief to a guard dog. A further obstacle is guaranteeing the objectivity and fairness of AI systems. You see, artificial intelligence learns from its input data, which means that biased data might lead to unfair decisions made by the AI. The situation is analogous to a judge who favors one side over the other just because they were childhood friends.

Malware Detection

Cybersecurity faces a major threat from malware. To detect known malware variants, traditional antivirus software uses signature-based detection. To identify and counteract both common and uncommon forms of malware, AI-powered solutions employ machine learning algorithms. Algorithms trained by machine learning can sift through mountains of data in search of outliers and trends that would be impossible for humans to spot. Artificial intelligence (AI) can detect previously unseen malware strains by studying their behavior, something that conventional antivirus programs could overlook. A file’s maliciousness or benignness is one example of a tagged attribute that can be applied to labeled data. However, machine learning algorithms can be trained to detect patterns and outliers using unlabeled data, which is not tagged.

Phishing Detection

An increasingly common kind of cyberattack, phishing aims to trick both individuals and businesses. For the most part, blacklisting and rules-based filtering are the mainstays of traditional phishing detection methods. The problem with these methods is that they can only stop known attacks; they won’t be able to stop emerging threats. To detect any phishing attempts, AI-based solutions can also examine user behavior while interacting with emails. Artificial intelligence (AI) solutions can detect suspect behavior and notify security teams, such as when users enter personal information in response to a phishing email or click on a suspicious link.

Security Log Analysis

The capacity of traditional rule-based systems to detect new and developing risks is severely constrained when it comes to traditional security log analysis. The application of machine learning algorithms allows for the real-time examination of massive amounts of security log data in AI-based security log analysis. Even without a known threat profile, AI systems can still identify trends and anomalies that could mean a security compromise has occurred. This allows businesses to lessen the likelihood of security problems like data breaches by swiftly detecting and responding to any security incidents.

Network Security

It is possible to train AI algorithms to detect unauthorized devices on a network, suspicious activities, and strange traffic patterns. Through the monitoring of network devices, AI can also enhance network security. Security staff might be alerted when AI algorithms are trained to detect unauthorized devices on the network. As an example, the AI system can identify potentially dangerous network activity, such as the presence of an unapproved device, and alert the appropriate authorities. To identify possible dangers, AI can also track the actions of network devices, looking for things like strange patterns of activity.

Endpoint Security

Computers, mobile phones, and other endpoints are common targets for hackers. The signature-based detection method used by most antivirus programs today can only identify specific types of malware. By examining their activity, AI can detect unknown kinds of malware. Endpoint security solutions powered by AI examine endpoint activity and identify possible dangers using machine learning techniques. An endpoint security solution that uses AI, for instance, can detect malicious software and isolate it in a quarantine. Endpoint activity can be monitored and any suspicious behavior can be flagged as a potential security risk.

Computers, mobile phones, and other endpoints are common targets for hackers. The signature-based detection method used by most antivirus programs today can only identify specific types of malware. By examining their activity, AI can detect unknown kinds of malware. Endpoint security solutions powered by AI examine endpoint activity and identify possible dangers using machine learning techniques. An endpoint security solution that uses AI, for instance, can detect malicious software and isolate it in a quarantine. Endpoint activity can be monitored and any suspicious behavior can be flagged as a potential security risk.

Finally, AI is helping us identify and avoid cyber assaults, fortifying our digital defenses, and safeguarding regular people from dangers that exist on the internet. The future of cybersecurity powered by AI is bright, even though there remain obstacles to overcome.

Regardless of these obstacles, AI’s impact on cybersecurity will continue to expand. The more expansive and intricate our digital world becomes, the more assistance we will require to ensure its security. And now we have an effective tool in the battle against cyber dangers thanks to AI.

Advantages of AI in Cybersecurity

- Advanced Threat Detection and Prevention

- Real-time Monitoring and Response

- Enhanced Incident Response

- Adaptive Learning

- Reduction in False Positives

- Predictive Analysis

- Identifying Insider Threats

- Scalability and Efficiency

- Vulnerability Management

- Adaptive Access Control

- Insider Threat Detection

Disadvantages of AI in Cybersecurity

- Bias and Errors

- Ethical Implications in AI Decision-Making

- Challenges in Algorithmic Accuracy

- Sophisticated Attacks on AI

- Threats Targeting AI Systems

- Security Risks in AI Models

- Dependency and Overreliance

- Potential Human Skill Erosion

- Interpretation of Complex Threats

- Skill Gap

Top 15 AI Tools for Cybersecurity

- Microsoft Security Copilot

- IBM’s Guardium

- Zscaler Data Protection

- McAfee MVISION

- Darktrace

- Kaspersky’s Endpoint Security

- Tessian’s Complete Cloud Email Security

- Cylance

- Cybereason

- Tenable’s Exposure AI

- SentinelOne’s Singularity

- Malwarebytes

- Splunk’s Enterprise Security

- Force Point’s Behaviour Analytics

- OpenAI’s ChatGPT

Legal and Regulatory Considerations for Digital Assets

Legal and regulatory considerations for digital assets are important because they help keep things fair and safe in the world of online currencies and investments. Digital assets are things like cryptocurrencies (such as Bitcoin or Ethereum), digital tokens, and other virtual items that have value.

One big concern is ensuring people using digital assets are protected from fraud and scams. Governments and organizations create rules to prevent people from being tricked or cheated when they buy, sell, or trade digital assets. These rules are there to keep the market safe and fair for everyone involved.

Another important aspect is taxation. Just like with regular money, governments often have rules about how digital assets are taxed. This means that when people make money from buying or selling digital assets, they might need to pay taxes on those profits. There’s also the issue of security. Since digital assets are stored online, there’s always a risk of hackers trying to steal them. That’s why many countries have regulations in place to make sure that companies handling digital assets have strong security measures to protect their users’ money.

Moreover, there are rules about who can trade digital assets and how they can do it. For example, in some places, you might need to prove your identity before you can buy or sell digital assets. This is to prevent things like money laundering and illegal activities. Furthermore, governments and financial institutions are still figuring out how to regulate digital assets because they’re relatively new. This means that the rules and regulations can change quite a bit as they learn more about how digital assets work and how they affect the economy.

Use cases

Google scans over 300 billion Gmail attachments every week to ensure safety.

- Managed threat detection system AWS GuardDuty is one such service; it scours multiple data sources, such as DNS logs, AWS CloudTrail logs, and VPC Flow logs, for signs of suspicious activity that might point to a security breach. For example, it may detect abnormally high volumes of API calls, strange patterns of network traffic, or efforts to get unauthorized access to private information.

- An AI-powered platform for threat identification and response is central to Wells Fargo’s cybersecurity strategy. Data such as network traffic, email communications, and files are analyzed by this platform using sophisticated machine-learning techniques. The artificial intelligence system can detect suspicious trends and outliers by analyzing this data in real time.

- Network logs, system events, user activity, and other sources of massive volumes of data are analyzed by the Splunk Enterprise Security platform’s machine-learning algorithms. The platform can identify trends and abnormalities that may indicate possible vulnerabilities or harmful behaviors in real time thanks to this AI-driven methodology.

- With the help of AI, systems, and networks can be automatically scanned for vulnerabilities, making it easier to identify possible entry points for attackers. Artificial intelligence (AI) aids in the reduction of manual work and vulnerability exposure by recommending and prioritizing necessary security updates. One example is how the managed security services team at IBM was able to automate 70% of alert closures and cut their threat management timeframe in half in only one year after using these AI capabilities.

- Among other things, Plaid uses sophisticated machine learning algorithms to examine a large amount of data, which includes the customer’s name, address, and Social Security number. There is less room for mistake or fraud when using the AI system to identify and verify bank accounts; the process takes only seconds and is accurate and flawless.

- Transaction analysis is a critical component of PayPal’s cybersecurity approach that makes use of AI. Manually examining each transaction for indications of fraud would be an enormous undertaking given the platform’s daily transaction volume. Here, AI’s processing speed shines as it quickly looks for warning signs in every single transaction.

- Google employs AI to assess threats on mobile endpoints, providing organizations with valuable insights to safeguard the increasing array of personal mobile devices.

- Zimperium and MobileIron have joined forces to facilitate the implementation of mobile anti-malware solutions infused with artificial intelligence. By combining Zimperium’s AI-driven threat detection with MobileIron’s robust compliance and security engine, they tackle issues such as network, device, and application threats effectively.

- To determine if a transaction is valid or fraudulent, Mastercard’s Decision Intelligence uses AI and ML. This exemplifies the continuous learning and enhancement of AI by preventing fraudulent transactions while allowing legal ones to proceed unabated.

Read: 10 AI News that Broke the Internet Last Week: Top Headlines

Statistics: AI in Cybersecurity

- 56% of companies have adopted AI-powered cybersecurity systems.

- Cybercrime steals about 1% of the world’s GDP.

- 69% of senior executives say AI is essential to respond to cybersecurity threats.

- There’s 72% annual growth in AI-powered cybersecurity startups.

- Frequency: There is a victim of cybercrime every 37 seconds or 97 victims per hour.

- Data leakage: In 2022, 2 internet users had their data leaked every second, an improvement from 2021 when 6 users had their data leaked every second.

- Damage: In 2021, global cybercrime caused $6 trillion in damages, or $684.9 million per hour.

- Number of attacks: There are 2,200 cyber attacks per day or 39 seconds on average.

- Malware: In 2023, 30 million new malware samples were detected.

- Polymorphic malware: In 2019, 93.6% of malware observed was polymorphic, meaning it can constantly change its code to evade detection.

- Reinfection: In 2022, 45% of business PCs and 53% of consumer PCs that got infected once were re-infected within the same year.

FAQ’s

Is AI completely replacing human cybersecurity professionals?

No, AI complements human cybersecurity professionals by automating repetitive tasks, augmenting their capabilities, and enabling them to focus on more strategic aspects of cybersecurity such as threat analysis and policy development.

Can AI be used by cybercriminals?

Yes, cybercriminals can leverage AI to automate and enhance their attacks, such as developing more sophisticated malware or launching targeted phishing campaigns. This underscores the importance of organizations staying ahead of adversaries by continuously evolving their cybersecurity defenses.

Can AI detect previously unknown or zero-day cyber threats?

AI-powered systems can find and stop zero-day threats, flaws unknown to cybersecurity experts. Even without prior knowledge, machine learning algorithms can identify threat trends.

Are there legal protections for digital assets?

Legal protections for digital assets vary by jurisdiction but may include intellectual property laws, contracts, and regulations governing data privacy and cybersecurity.

Are there specific industries or sectors where AI cybersecurity solutions are more beneficial?

- Financial services

- Manufacturing

- Logistics

- Cybersecurity

- E-Commerce

How does AI-powered threat detection work?

AI-powered threat detection works by analyzing large volumes of data to identify patterns indicative of cyber threats. Machine learning algorithms can detect anomalies in network traffic, user behavior, and system activity that may signal potential attacks.

What is ransomware?

Ransomware is a type of malware that encrypts a victim’s files, making them inaccessible.

What is identity theft?

Identity theft occurs when someone steals your personal information, such as your name, Social Security number, or credit card number, to commit fraud.

Can cybercriminals access my online accounts without my knowledge?

Yes, cybercriminals can access online accounts through various means such as password theft, phishing attacks, or exploiting security vulnerabilities.

How do cybercriminals use malware to target digital assets?

Cybercriminals use malware to gain unauthorized access to devices or networks, steal sensitive information, encrypt files for ransom, or disrupt operations.

What are the risks of using public Wi-Fi networks for accessing digital assets?

Public Wi-Fi networks are vulnerable to interception, allowing cybercriminals to eavesdrop on communications and steal sensitive information like passwords or financial data.

How do DDoS attacks affect digital asset security?

DDoS attacks overwhelm websites or online services with excessive traffic, causing disruptions in availability and potentially impacting the security of digital assets hosted on those platforms.

What role does encryption play in protecting digital assets?

Encryption scrambles data to make it unreadable without the proper decryption key, providing a layer of protection against unauthorized access or interception of digital assets.

What types of AI algorithms are commonly used in cybersecurity?

Common AI algorithms used in cybersecurity include supervised learning, unsupervised learning, and reinforcement learning. Supervised learning is often employed for classification tasks such as malware detection, while unsupervised learning can detect anomalies in data. Reinforcement learning may be used for adaptive security measures.

Are there insurance options available for safeguarding digital assets against cyber threats?

Yes, some insurance companies offer cybersecurity insurance policies that provide financial protection in the event of cyber-related losses or damages to digital assets.

How do I detect if my digital assets have been compromised?

Monitor for unusual activity on accounts, devices, or networks, watch for unauthorized changes or transactions, and use security tools to scan for malware or vulnerabilities.

Can social engineering tactics be used to steal digital assets?

Yes, social engineering tactics like pretexting, phishing, or impersonation can be used to manipulate individuals into revealing sensitive information or granting access to digital assets.

What are the consequences of failing to secure digital assets against cyber threats?

Failing to secure digital assets can lead to financial losses, identity theft, reputational damage, legal liabilities, and disruptions to business operations or personal life.

What data privacy and security considerations should be taken into account when implementing AI in cybersecurity?

Organizations must ensure that sensitive data used to train AI models is securely stored and anonymized to protect privacy. Additionally, AI systems should be designed with built-in security measures to prevent unauthorized access and data breaches.

How does AI help in the analysis of security logs and data?

AI automates the analysis of security logs and data by identifying patterns, anomalies, and suspicious activities that may indicate security incidents. AI-driven analytics platforms can process vast amounts of data in real-time, enabling faster threat detection and response.

Are there any regulatory implications associated with using AI in cybersecurity?

Yes, organizations must comply with regulations such as GDPR, HIPAA, and CCPA when implementing AI in cybersecurity. These regulations impose requirements for data protection, transparency, and accountability in AI-driven systems.

How does AI contribute to the automation of routine security tasks?

AI contributes to the automation of routine security tasks by performing tasks such as threat detection, incident triage, and malware analysis without human intervention. This frees up cybersecurity professionals to focus on more strategic initiatives.

Can AI be integrated with existing security infrastructure and tools?

Yes, AI can be integrated with existing security infrastructure and tools through APIs, plugins, and interoperability standards. This allows organizations to leverage AI capabilities alongside their existing security investments.

Can AI be used for deception techniques to trap attackers?

Yes, AI can be used for deception techniques such as honeypots, honeytokens, and fake credentials to lure attackers into controlled environments and gather intelligence about their tactics, techniques, and procedures (TTPs).

How do AI-driven security solutions impact the overall cost of cybersecurity operations?

AI-driven security solutions can reduce the overall cost of cybersecurity operations by automating routine tasks, improving operational efficiency, and enabling faster detection and response to security incidents. However, initial investment costs and ongoing maintenance may vary depending on the complexity of the AI deployment.

What are some common misconceptions about AI in cybersecurity?

Common misconceptions include the belief that AI can replace human cybersecurity professionals entirely, the assumption that AI is infallible and immune to attacks, and the idea that AI can solve all cybersecurity challenges without human oversight or intervention.

Conclusion

There is a revolutionary chance to improve the efficacy and efficiency of security measures brought about by the growing usage of AI in cybersecurity. Artificial intelligence (AI) has many uses that have the potential to change the way cybersecurity is done. By streamlining processes, increasing precision, and decreasing expenses, AI might greatly fortify our defenses against ever-changing cyber threats. With the help of machine learning algorithms, which can sift through mountains of data in search of patterns that people might miss, businesses may use AI into their cybersecurity measures to monitor for and react to attacks as they happen.

In the ever-changing world of cybersecurity, where attacks can appear and change at any moment, the ability to detect and respond to threats in real-time is more important than ever. Organizations may enhance their security posture and keep up with the constantly changing cybersecurity landscape by leveraging AI, which has the potential to transform the industry. However, before implementing AI, it is essential to fully comprehend the dangers involved and take the necessary steps to reduce them.

[To share your insights with us as part of editorial or sponsored content, please write to sghosh@martechseries.com]

Comments are closed.