Sneak Preview: Google ‘uDepth’ Improves Real-time 3D Sensing for Smartphones

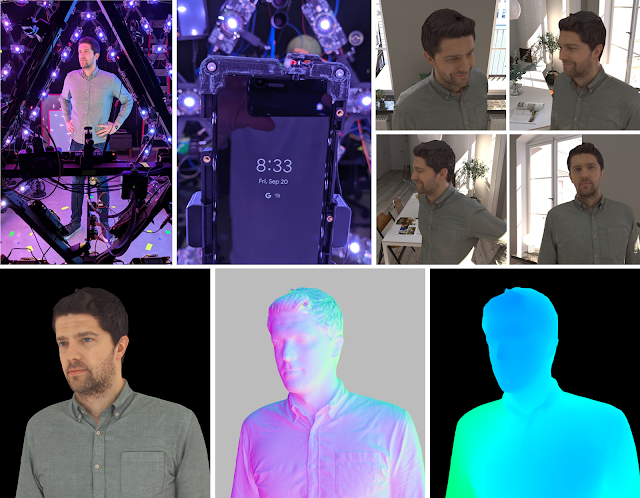

Depth sensing technology is taking camera-based photography to new heights. The technology using some of the most advanced sets of real-time 3D sensors, Face Detection, and Computer Vision algorithms. With smartphone technology more or less stagnant when it comes to Voice calling, ED-based depth-sensing cameras for Video calls and photography open up new opportunities for the OEMs. Giving a boost to its Pixel 4 series of smartphones, Google has announced a new 3D Depth Sensing technology called uDepth.

According to Google, uDepth is housed at the front of Pixel 4 in the form of a real-time IR Active Stereo Depth Sensor (ASDS).

uDepth by Google is a powerful depth-sensing application that combines innovations related to Computer Vision, AI-based face detection, AR VR mode, and typical photography corrections. uDepth, when synced with Pixel 4’s Face Unlock feature, helps faster authentication of the user, while protecting the device from “spoof attacks”.

Apart from user authentication, Google’s uDepth also supports advanced photo touch-up capabilities and 3D effects to improve depth sensing in selfies.

Depth Sensing: Why it Matters today?

Depth sensing technology is a fast-growing smartphone technology that makes other connected devices relevant to AR VR experiences. It is used extensively to measure distance, volume, and illumination. Together with gesture sensing, obstacle avoidance and object tracking sensors, depth sensing platforms such as uDepth improve overall 3D imaging experience, in particular portrait mode.

Theoretically speaking, Depth sensing cameras work as a software magic to improve the clarity of blur images in portrait mode, picking up distant objects even in darkness.

uDepth and The Jargon

We came across at least 4 very important technical terms associated with uDepth principles. These are:

- Neural Depth Refinement

- Computational Photography

- Volumetric capture system

- Autocalibration

Using Deep Learning, uDepth accurately provides metric to Face Lock feature. Using uDepth in combination with the Pixel 4 Hardware and camera sensors, a user is able to achieve ground truth in real images. Using it all together in a synthetic frame, Pixel 4 selfie becomes a smartphone magic potion for post-capture bokeh effects and Social Media.

We are yet to compare the Pixel 4 Depth Sensing performance with that of other leading ToF providers. For example, Samsung Galaxy Note10+ uses the DepthVision Camera, an advanced Time of Flight (ToF) that can accurately judge depth and. This advanced photography smartphone camera with Live focus video, Quick Measure, and 3D Scanner.

Source: Google AI Blog

Comments are closed, but trackbacks and pingbacks are open.