Why multimodal AI is taking over communication

By: Ian Reither, Co-Founder of Telnyx

The way businesses communicate with customers is undergoing a radical conversion. What was once a clear distinction between voice calls and text messages is fading as multimodal AI agents blur the lines between channels. These AI-driven assistants can seamlessly converse across SMS and voice, starting a conversation by text, switching to a call for more nuanced issues, and following up again via message, all without losing context.

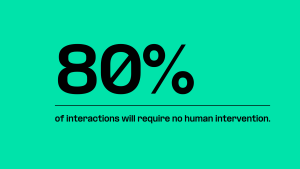

Companies are rethinking how they engage customers, solve issues, and scale. That change is driven by a fundamental shift in approach. Analysts suggest that AI will be involved in virtually every customer interaction, and with 80% of interactions requiring no human intervention, the rise of voice and SMS agents is both inevitable and essential.

Also Read: Unpacking Personalisation in the Age of Predictive and Gen AI

From bots to conversational AI

The earliest bots were simple SMS responders. Businesses used them for basic alerts, FAQs, or appointment confirmations. Messaging bots first gained traction in the 2010s. Not long after, voice assistants like Siri and Alexa went mainstream, making spoken interactions with machines feel natural.

In the world of business, however, voice was slower to progress. Early IVR systems were clunky, and most agent builders were focused on text. But thanks to advancements in natural language processing (NLP), automatic speech recognition (ASR), and expressive text-to-speech (TTS), we now have real-time voice bots that sound and respond almost like humans.

Future-ready platforms support both voice and SMS natively. An AI agent can initiate contact via text, escalate to a call mid-conversation, and then summarize the interaction via SMS, all while maintaining continuity and tone. This convergence of modalities will become central to modern communication.

Why multimodal matters

Customers don’t care how they connect, they just want answers. Some prefer to talk, others to text, and many switch back and forth. A multimodal AI agent provides that flexibility while preserving context and personalization.

More importantly, it enables redundancy. If a customer doesn’t answer a call, AI can send a follow-up message. If a voice call is hard to hear, it can offer to text a link or a summary. By combining the strengths of each medium, empathy and speed via voice, convenience and clarity via text, businesses provide a complete and responsive experience.

This integration also avoids the fragmented experiences of the past. No more repeating details because the SMS assistant “didn’t know” what you told the phone agent. With shared AI brains across modalities, customers get consistent answers, tone, and service.

The technical challenge: Latency and infrastructure

Real-time voice AI is hard. Latency (the delay between hearing and responding) is a conversation killer. Texting is forgiving, but voice demands sub-second reactions to feel natural. Achieving real-time responses requires lightning-fast speech recognition, rapid LLM-based comprehension, and TTS systems that can begin speaking in milliseconds.

Companies like ElevenLabs have led breakthroughs in TTS latency and realism, but that’s only part of the puzzle. Network delays, especially in international calls, can add hundreds of milliseconds. The physical infrastructure, where the AI is hosted, and how it connects to telecom networks plays a huge role.

That’s why providers that offer global points of presence and private IP networks stand out. They bring the AI closer to the user, reducing lag and ensuring crisp, clear calls. AI companies working with such CPaaS providers often avoid needing latency-masking tricks altogether.

But beyond speed, there’s complexity in navigating telecom regulations, managing global phone number provisioning, and ensuring delivery across different voice and messaging networks with varying rules. AI-powered voice and SMS are deeply reliant on robust global infrastructure.

Equally important is the ease of implementation. Platforms offer intuitive APIs and developer-friendly tools, making it simpler for businesses to build and deploy real-time voice and messaging agents without needing deep telecom expertise.

Great voices aren’t enough without a full stack

High-fidelity voice models like ElevenLabs and Sesame have raised the bar for synthetic speech. With human-like tone, pacing, and emotion, they make AI agents sound remarkably real, and that matters because a natural voice builds trust while keeping users engaged.

However, sounding human isn’t enough. Voice AI also requires a fast, accurate “ear” for speech recognition, an intelligent “brain” for contextual understanding, and deep integrations with business systems to actually get things done. That includes accessing CRMs, databases, and internal APIs, as well as having the ability to transfer live calls to human agents without missing a beat. Without those capabilities, even the most advanced-sounding voice falls short. A pleasant-sounding voice that can’t check order status or reschedule an appointment is still a dead end.

Beyond that, great agents need awareness and emotional intelligence to adjust their tone if a user is upset and the ability to switch from voice to text or escalate to a human when needed. The orchestration layer is what makes AI agents useful, turning conversations into action.

Integration is the invisible engine powering AI agents

Deep integration into business systems is what separates basic bots from truly beneficial AI agents. Take healthcare, for example. A voice assistant that reminds a patient about an appointment must update the scheduling system if the appointment changes. In logistics, an AI agent answering “Where’s my package?” must access real-time tracking systems. In retail, handling returns requires accessing order databases, refund processes, and customer profiles.

The same applies to contact centers: a voice agent might authenticate a user, access recent purchases, and even update a support ticket all mid-conversation. Without access to these systems, the AI is limited to surface-level Q&A.

The best AI platforms prioritize these connections. Integration is what allows AI to actually act, whether it’s via APIs, native data connectors, or embedded business logic.

Also Read: The Next Era of Machine Translation: Real-Time Adaptation for Enterprises

How CPaaS providers are enabling real-time voice and SMS AI

AI-powered voice and SMS assistants depend heavily on the underlying communication infrastructure. That’s where CPaaS (Communications Platform as a Service) providers come in.

- Twilio pioneered developer-friendly APIs for voice and messaging, making it a go-to for early chatbot builders. Its vast ecosystem remains a strong asset.

- Companies take a different approach, owning a voice-centric private global network to minimize latency and improve its infrastructure-first philosophy appeals to AI builders who care about milliseconds.

- Infobip offers unmatched SMS connectivity, especially in emerging markets. Their strategy positions SMS as the foundational layer for AI.

- Sinch and Vonage bring telecom muscle and carrier interconnects, enabling global scale with more of an SMS bent, whereas some platforms are programmable voice-first.

The next winners in this space will be those who combine infrastructure control with AI-aware features: fast ASR/TTS, edge hosting for LLMs, and tools for developers to tune latency and performance.

Where multimodal AI agents are winning across industries

Healthcare is using voice and SMS agents to schedule appointments, remind patients, and even monitor recovery via check-ins. One large provider reported a 30-40% operational cost reduction using voice AI for front-office tasks.

Logistics firms are deploying voice assistants for delivery coordination, ETA updates, and customer tracking inquiries. AI agents handle huge call volumes with no wait time, especially during peak seasons, reducing the need for seasonal hiring.

Customer service is changing quickly. AI agents can now resolve common issues (password resets, order status, billing questions), hand off complex cases with context, and even upsell customers with personalized recommendations. Across these industries, multimodal agents are enabling 24/7 service, reducing costs, and boosting customer satisfaction. NPS improvements of +20% and significant cost savings are not uncommon in large-scale rollouts.

The human element

As AI agents become more capable, the human element is also changing. Customers are increasingly open to engaging with AI-powered assistants, especially when it means faster service. Many even prefer it for routine tasks.

However, when issues get complex or emotionally charged, customers expect a smooth handoff to a real person. That’s where many AI systems fall short. Without the ability to transfer mid-call to a human agent, both gracefully and with context, the experience quickly breaks down.

while more complex or sensitive moments are handed off to a human without breaking the flow of the conversation. This seamless escalation is essential for building trust, and it’s only possible with infrastructure that’s purpose-built for real-time communication.

AI that understands, talks, and gets things done

We’re at the beginning of a massive shift. Voice and SMS AI agents are changing the way businesses interact with customers, it’s less about replacing people and more about making conversations faster, smarter, and more useful. The best agents hold natural conversations, complete tasks, adjust to context, and move smoothly between voice and text.

To get there, businesses must:

- Invest in fast, low-latency

- Choose CPaaS partners that understand both voice and SMS in the context of AI

- Integrate deeply with 3rd party data

- Embrace multimodality as the new

The result is AI agents that resolve issues instantly, scale to global audiences, and deliver a level of responsiveness no human team can match while still providing an experience customers actually prefer.

The bots are talking and texting, and increasingly, the world is listening.

Comments are closed.