Making AI More Accessible: AWS & Hugging Face Partner Up To Democratize Machine Learning Tools

In recent years, artificial intelligence has conquered the technological realm in the best way possible. The recent developments in the fields of machine learning, especially generative AI, have empowered developers with countless opportunities.

Today, Generative AI can accomplish various tasks such as answering questions; writing essays, and jokes, text summarization, and code generation. Generative AI is creating novel content and at the same time, transforming the way we generate content and consumer consume content.

To enable more people to innovate and use the latest AI tools and models, Amazon Web Services (AWS), which has an earnest fondness for Generative AI has announced in its blog an expanded collaboration with AI startup Hugging Face. The most common and somewhat one of the earliest examples of Amazon’s generative AI tool is Alexa, who effortlessly delivers a seamless conversational experience.

Recommended: ChatGPT: What Cybersecurity Dangers Lurk Behind this Impressive New…

The collaboration aims to speed up training, and effectively execute large language and vision models to create generative AI applications. The two leaders have a common vision – making the next-generation AI and machine-learning models more accessible to everyone. With this collaboration, AWS will empower developers to access high-end tools at marginal costs.

Generative AI Market – Growth and Investment

Generative AI has become a living dream for brands and it has the strength to transform the entire content creation landscape.

According to a report by the Financial Times, more than $2 billion has been invested in Generative AI, indicating a massive jump of 425% since 2020.

According to insights by Reportlinker.com, the Global Generative AI Market size is predicted to touch $53.9 billion by 2028, recording a growth of 32.2% CAGR during the forecast period.

Gartner predicted that by 2025, generative AI techniques will help to discover more than 30% of new drugs and materials.

Uniform Distribution of AI – the Need of the Hour

Clement Delangue, CEO of Hugging Face very categorically stated that the future of AI had commenced but without any uniform distribution. He stressed the importance of accessibility and transparency and how they play a vital role in creating tools and sharing progress.

“Amazon SageMaker and AWS-designed chips will enable our team and the larger machine learning community to convert the latest research into openly reproducible models that anyone can build on.”

Adam Selipsky, CEO of AWS eloquently put the benefits of Generative AI stating that it had the potential to transform entire industries, the only glitch is its cost and the required expertise which keeps it out of reach of most developers. He further added,

“Hugging Face and AWS are making it easier for customers to access popular machine learning models to create their own generative AI applications with the highest performance and lowest costs. This partnership demonstrates how generative AI companies and AWS can work together to put this innovative technology into the hands of more customers.”

Additionally, Amazon’s M5, an internal group within Amazon Search that helps different Amazon teams to bring large models to their applications, trained large models to improve search results on Amazon.com.

AWS is known for its continuous improvisations across ML including infrastructure, tools on Amazon SageMaker, and AI services, such as Amazon CodeWhisperer (a service that improves developer productivity by generating code recommendations based on the code and comments in an IDE. AWS also created purpose-built ML accelerators for the training (AWS Trainium) and inference (AWS Inferentia) of large language and vision models on AWS.

Hugging Face selected AWS because it offers flexibility across state-of-the-art tools to train, fine-tune, and deploy Hugging Face models including Amazon SageMaker, AWS Trainium, and AWS Inferentia. Developers using Hugging Face can now easily optimize performance and lower costs to bring generative AI applications to production faster.

Generative AI – Enabling Cost Effectiveness and Improved Performance

Generative Ai is a lucrative field but at times, it can be both expensive and an incredibly exhaustive process. The reason is building, training, and deploying large language and vision models are high on the pocket, and some levels of deep expertise in machine learning (ML) are required. In this situation, as some of the models are dynamic and complex with billions of parameters, generative AI becomes an exclusive, out-of-the-reach circuit for many developers.

Hugging Face and AWS’s collaboration will be the much-needed solution for bridging this gap and simultaneously enabling developers to access AWS services and make the most of Hugging Face models, especially for generative Ai apps.

Let’s look at some examples from Amazon

The Amazon EC2 Trn1 powered by AWS Trainium delivers faster time to train at 50% cost-to-train savings over comparable GPU-based instances.

In the case of Amazon EC2’s new Inf2 which is powered by the latest generation of AWS Inferentia, is purpose-built to deploy the latest generation of large language and vision models and raise the performance of Inf1 by delivering up to 4x higher throughput and up to 10x lower latency. Developers can use AWS Trainium and AWS Inferentia through managed services such as Amazon SageMaker, a service with tools and workflows for ML. Or they can self-manage on Amazon EC2.

How to Get Started:

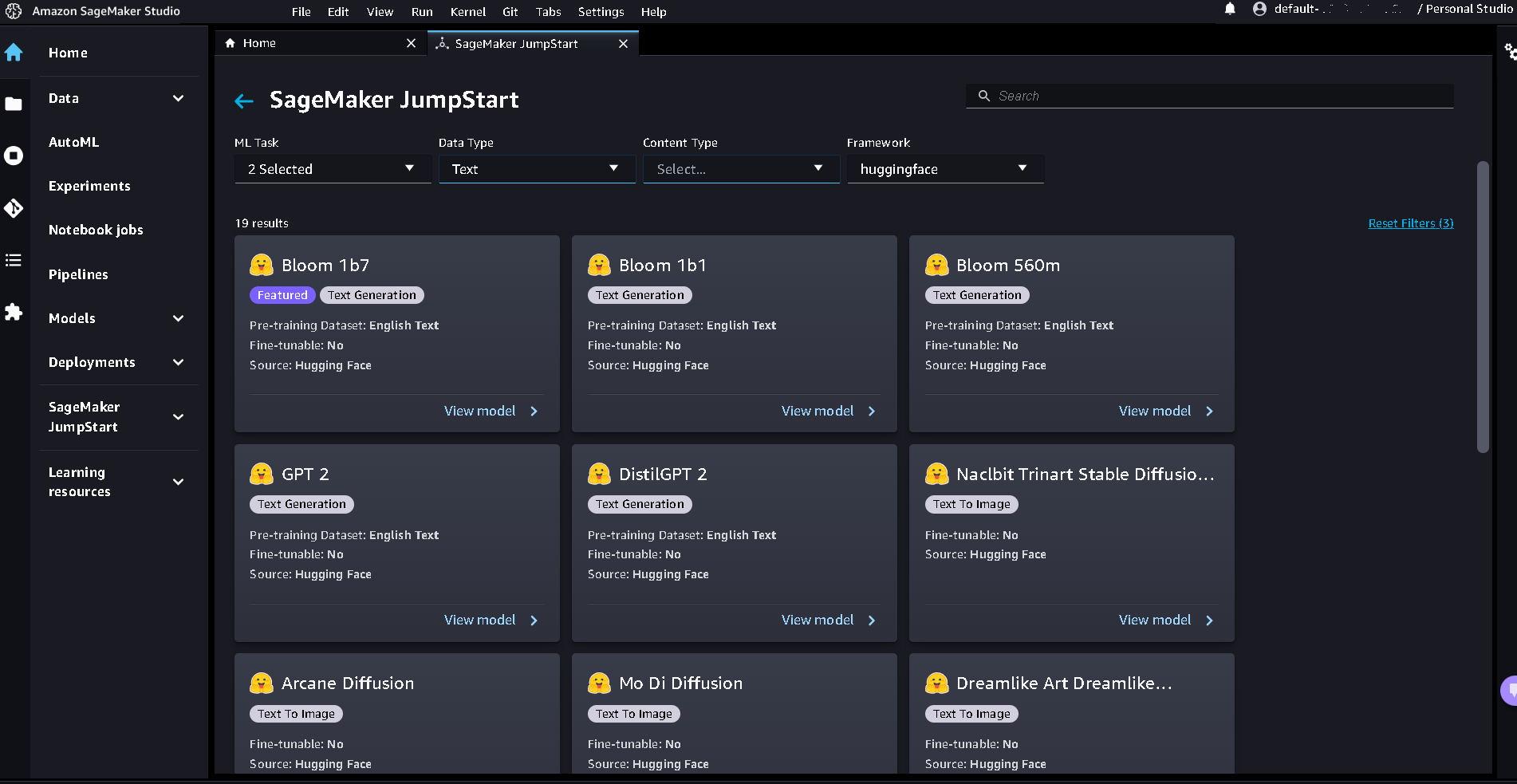

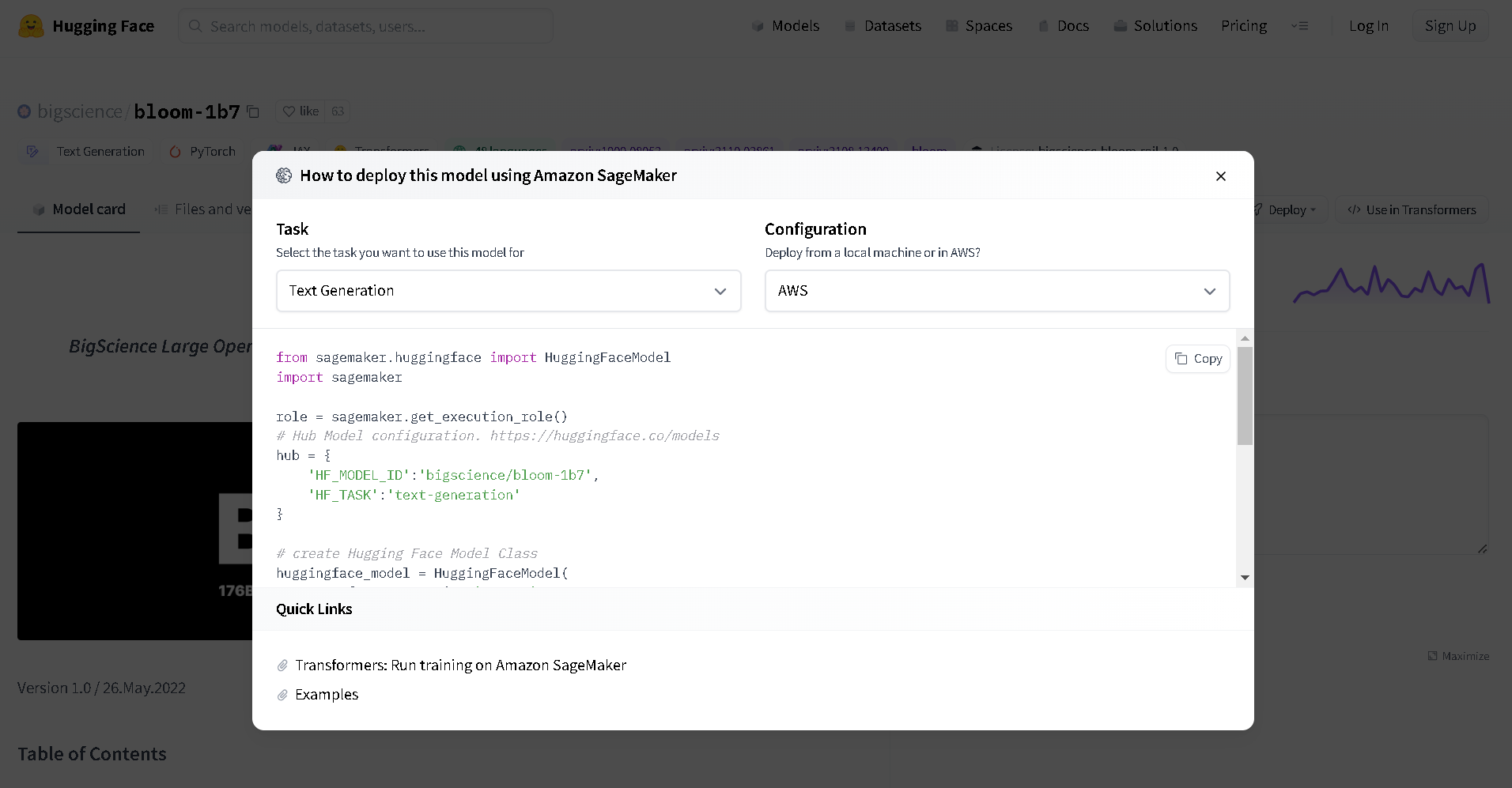

Users can start using Hugging Face models on AWS through – SageMaker JumpStart, the Hugging Face AWS Deep Learning Containers (DLCs), or the tutorials to deploy your models to AWS Trainium or AWS Inferentia.

The Hugging Face DLC is packed with optimized transformers, datasets, and tokenizers libraries to enable you to fine-tune and deploy generative AI applications at scale in hours instead of weeks – with minimal code changes. SageMaker JumpStart and the Hugging Face DLCs are available in all regions where Amazon SageMaker is available and come at no additional cost. Read the documentation and discussion forums to learn more or try the sample notebooks today.

Final thoughts

Generative AI is a work in progress with enormous potential. Most brands have already embedded it in their applications because in the coming years, this branch of AI will be leading the digital content segment. And to match up with the tremendous growth and innovation, the partnership between Hugging Facae and AWS will ensure that AI is open to all. Developers get a chance to build, train and deploy the newest entrants in the machine learning space with the help of tailor-made tools.

Comments are closed.