The Five Key Takeaways That We Should Expect From The AI Safety Summit In The UK

The first annual Artificial Intelligence (AI) Safety Summit will take place on November 1 and 2, 2023, in Bletchley Park, the site of British codebreaking during World War II. With an emphasis on foundation models (huge networks that create text that sounds human, like OpenAI’s GPT and Google’s Gemini), the government hopes to urge a global, coordinated approach to AI safety during the meeting. Risks associated with these cutting-edge models will be discussed during the summit, along with strategies for promoting the responsible and secure advancement of AI.

What Potential Effects May the AI Safety Summit Have?

Concerns that advanced artificial intelligence (AI) could help or speed up the creation of deadly bioweapons or cyberattacks that bring down the global internet, posing an existential threat to humanity or modern civilization, lie behind the references to biosecurity and cybersecurity on the summit agenda.

Read: AI and Machine Learning Are Changing Business Forever

Outlook Of The forthcoming Summit

The forthcoming Summit represents the British government’s position on AI, which is supportive of research and development but wary of potential consequences. Therefore, the current system for regulating AI development aims for AI will be produced inside a secure environment, making the United Kingdom a prime spot for AI developers.

According to official statements, the Government views the Summit as a “first step” in its efforts to have worldwide debates about AI safety.

According to official statements, the Government views the Summit as a “first step” in its efforts to have worldwide debates about AI safety.

One interesting trend is the government’s recent prodding of AI firms like OpenAI and DeepMind to disclose more details about their models’ inner workings. The government hopes to establish an understanding of the scope and technical aspects of this information in time for the Summit.

As a result of these changes, the law and regulation of AI may be expected to increase to a higher extent than was previously thought.

Who Is Attending the AI Safety Summit?

The UK government’s original plan for the conference was to bring together “country leaders” from the world’s top economies with academics and representatives from tech businesses leading the way in AI research to establish a new global regulatory agenda.

There will be several world leaders there, including the Prime Minister of the United Kingdom, Sunak, and the Secretary of State for Technology, Michelle Donelan; the Vice President of the United States, Kamala Harris; the President of the European Commission, Ursula von der Leyen; and the Prime Minister of Italy, Giorgia Meloni.

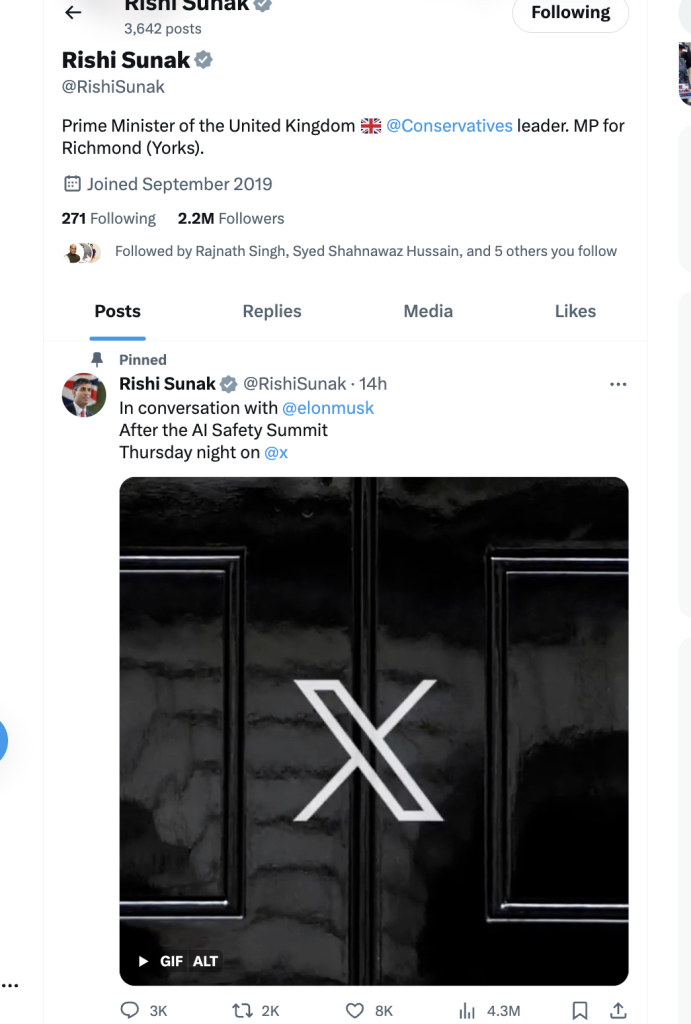

Government sources have confirmed that Elon Musk will be present at Rishi Sunak’s AI safety symposium this week at Bletchley Park and that the two men will hold a live conversation on the billionaire’s social media platform X on Thursday.

Ursula von der Leyen, head of the European Commission, and Giorgia Meloni, prime minister of Italy, are the two most famous politicians slated to attend the meeting.

Executives from a variety of digital organizations, such as Google’s artificial intelligence (AI) division Google DeepMind Demis Hassabis, OpenAI officials, and Mark Zuckerberg’s company Meta, will be in attendance. Former UK Deputy Prime Minister and current president of Meta’s global affairs department Nick Clegg will be present.

Read: Riding on the Generative AI Hype, CDP Needs a New Definition in 2024

Focus

According to official documents, we may expect coverage of the two categories of AI systems described above, although the emphasis will be placed on cutting-edge research.

What Is a ‘Frontier AI’ Model?

Frontier AI refers to extremely powerful, multifunctional AI models (e.g., foundation models) that match or surpass the capabilities present in today’s most advanced models, and hence offer major hazards related to abuse, unanticipated developments, and loss of control over the technology.

These are cutting-edge base models used by systems like OpenAI’s GPT-3 and GPT-4 (the basis for the well-known ‘ChatGPT’). While there is no doubt that these models have great promise and promise to spur significant innovation, their power to harm should not be taken lightly. As these models improve toward human capability, they present serious threats to public safety and global security by being used to attack flaws in software systems and disseminate compelling misinformation on a massive scale.

The “human in the loop” technique is already in use by companies like Unilever to get value from GPT, but typically in non-critical circumstances and usually to propose courses of action for an employee to examine and approve.

Read the Latest blog from us: AI And Cloud- The Perfect Match

The Five Key Takeaways That We Should Expect from The AI Safety Summit in the UK

There are five basic objectives of this summit which have been discussed below in depth so that our readers gets a fair idea

1. Recognize the dangers posed by Frontier AI and the necessity to take corrective measures.

This is the primary objective of the summit. Understanding the risks posed by Frontier AI, and the need for action will be the key issue that shall be taken away in this summit. Protection of Society Around the World from Abuses of Emerging AI, including but not limited to the use of AI in biological or cyber assaults, the creation of potentially harmful technologies, or the tampering with essential infrastructure. Risks of Unpredictable ‘Leaps’ in Frontier AI Capability as Models Are Rapidly Scaled, New Predictive Methods, and Implications for Open-Source AI’s Future Development will be discussed.

Risks associated with advanced systems deviating from human values and intentions will be discussed in Loss of Control, while risks associated with the integration of frontier Artificial intelligence will be discussed in Integration of Frontier AI, and include issues such as election disruption, bias, crime, and online safety, and the exacerbation of global inequalities.

2. Create a plan for future international cooperation on Frontier AI safety issues, such as how to effectively assist existing national and international institutions.

Questions quoted below will be the factors of discussion in the submission based on its second objective.

What should Frontier AI developers do to scale responsibly?

What should National Policymakers do about the risks and opportunities of AI?

What should the International Community do about the risks and opportunities of AI?

What should the Scientific Community do concerning the risks and opportunities of AI?

Sunak believes that it will lead to an agreement over the dangers of unrestrained AI growth and the most effective means of protecting against them. For instance, authorities are now debating how to word a statement on the dangers of artificial intelligence; a purportedly leaked draft warns of “catastrophic harm” that AI may bring about.

3. Establish company-wide safety protocols.

To determine the steps each company may take to improve AI security.

The forthcoming Summit represents the British government’s position on AI, which is supportive of research and development but wary of potential consequences. Therefore, the current legislative structure is meant to ensure that AI is created in a secure environment, making the UK a top destination for AI developers. According to official statements, the Government views the Summit as a “first step” in its efforts to have worldwide debates about AI safety.

4. Explore possible areas of collaboration in AI safety research.

Things such as the assessment of model capabilities and the creation of new standards to aid in governance will be the key factors. The goal is to identify possible areas for cooperation in the field of AI safety research.

One interesting trend is the government’s recent prodding of AI firms like OpenAI and DeepMind to disclose more details about their models’ inner workings. The government hopes to establish an understanding on the scope and technical aspects of this information in time for the Summit.

5. Demonstrate how the advancement of AI in a secure way will pave the way for its application to societal good.

To demonstrate how protecting AI’s progress might pave the way for its use in philanthropic causes worldwide. This will showcase factors ensuring the safe development of the AI domain and will also enable AI to be used as a tech for good on a global level.

The prospects to increase productivity and benefit society at large presented by rapidly developing artificial intelligence are immense. Up to $7 trillion in growth over the next 10 years and substantially faster drug discovery are possible because of the introduction of models with increasingly universal capabilities and step changes in accessibility and application.

The summit’s participants hope to agree on concrete measures to lessen the impact of cutting-edge AI on society. The most crucial areas for international cooperation will be evaluated, and strategies for making those areas operational will be discussed.

What Is the Summit Likely to Achieve?

The government hopes to negotiate with at least some AI firms to slow down the research and development of Frontier AI. They think that if the big players in AI were all in the same room, it would put more pressure on them to work together.

Following the model of the G7, G20, and Cop conferences, Sunak hopes this will be the first of many annual worldwide AI summits. Even if he doesn’t get to go to another one because he gets voted out of office next year, these events might be one of his most enduring legacies if they continue.

The Global AI Safety Summit hosted by the UK Government, is an important landmark in the rapidly developing field of artificial intelligence. Their recently released agenda gives us a rare look into the thoughts of politicians, academics, and industry executives as they prepare to tackle AI’s biggest problems.

[To share your insights with us, please write to sghosh@martechseries.com]

Comments are closed.