How Agencies Can Use AI Image Generation to Build Better and More Creative Ads

Whether it’s designers and artworkers experimenting using DALL-E 2 and Midjourney for creative inspiration or coders and copywriters using ChatGPT to streamline their workflows, there are plenty of ways that AI tools can be introduced into the workplace. Yet, for all of the social media posts and articles I’ve seen selling the benefits of AI tools, there’s not much information out there guiding agencies through the process of AI image generation.

As lead creative at an agency specializing in the development of mobile ads, we’ve been experimenting with AI image generation tools for the last few months. By introducing DALL-E 2, Midjourney, and Dream Studios (StableDiffusion) into our workflow, we’ve been able to improve our workflow, free up internal resources and ultimately reduce costs.

It’s worth noting that we only use these tools during the pre-production stages of storyboarding, artwork, and concept development. We’re not suggesting that these tools be used to replace existing staff or deliver complete artwork. Rather, they should be used to streamline workflows and improve ways of working.

All of that said, figuring out these tools and training staff to use them has been a continuous learning experience, and there’s no escaping the fact that some AI tools can be intimidating for first-time users, and results can vary significantly depending on a variety of factors. These range from the unique differences between AI image generation tools to the quality of the prompts you feed the AI.

So, whether you’re an agency owner or worker that’s considering implementing AI tools into your workflow or you’re simply curious about the various applications of AI image generation tools, here are the benefits of using AI image generation tools, how to use them, and the key differences between the tools that are out there.

What is AI image generation?

Simply put, AI image generation is the creation of images using AI algorithms. This is done through a process called diffusion that references a collection of data and interprets noise to create recognizable shapes.

Three of the most popular AI image-generation tools are DALL-E 2, Midjourney, and Dream Studio (Stable Diffusion), and generate images via text-to-image prompts or image-to-image prompts.

The main differences between DALL-E 2, Midjourney and Stable Diffusion: Pros and Cons

DALL-E 2

DALL-E 2 is the name that many people will recognize most out of the three. It can be accessed by signing up through the OpenAi website, and new users receive free starter credits that can be used to generate images through text prompts. DALL-E 2 is a paid service, and after the free credits are used, new ones need to be purchased via credit packs. You can grab 115 credits for $15, which will generate 460 images.

Regarding image quality, DALL-E 2 is more focused on photo-realistic images and does a great job at creating realistic lighting conditions and replicating certain art styles. Still, it falls slightly short of the image quality and accuracy generated by Midjourney and Stable Diffusion.

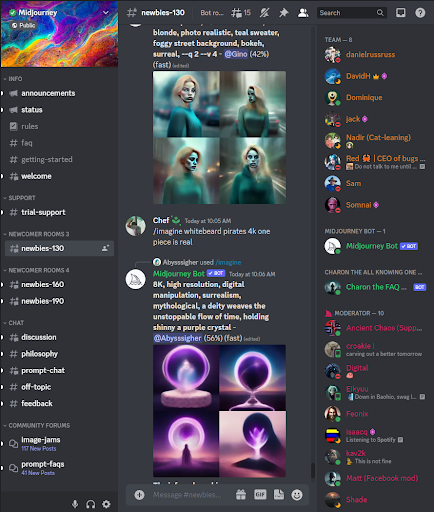

Midjourney

You’ll need a Discord account to access Midjourney, as it works entirely through there. You can access the Midjourney channel by visiting the midjourney website and registering. Once you register, you’ll receive an invitation to the channel and will be given instructions on how to generate your first images.

Similar to DALL-E 2, you can generate a set number of images for free, but after that, you’ll need to pay for a membership that costs $10 and lets you generate 200 images per month. Midjourney excels at generating environmental concept art as the AI model has been trained on those images, which makes it a great tool for background and concept artists, as well as storyboarding landscapes.

Stable Diffusion

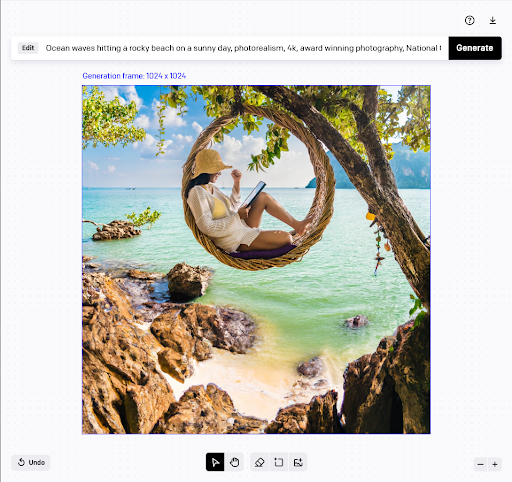

Stable Diffusion is the most flexible of the three tools and is best suited for designers, artworkers, and producers. It’s an open-source model that allows users to host their own API, and has already seen plugins developed for many photo editing software, including Photoshop, Clip Studio, and Krita. Users can locally host Stable Diffusion locally on their own PC, or it can be accessed through services like Dream Studios.

The steps to install plugins into your preferred creative software are solely dependent on the developer, but many use friendly services like Adobe Exchange to get users set up quickly. The advantage to setting up Stable Diffusion locally means it doesn’t cost anything extra to generate images, but the demands of Stable Diffusion mean it can easily drain VRAM and GPU resources, so you’ll need a decent PC or laptop.

NBA basketball player dunking, depicted as an exploding universe, stardust, surrealism, painting

Which AI image generator is best?

While DALL-E 2 and Midjourney are best used for exploring different art styles and visual concepts in a short amount of time, one of the biggest benefits of Stable Diffusion is making new iterations and artwork options to existing artwork through processes known as inpainting and outpainting. Midjourney doesn’t provide any editing tools at the time of writing, and inpainting isn’t available through Stable Diffusion.

Inpainting replaces sections of existing artwork (which can be highlighted using Photoshop’s content aware tool as an example) with new references. As an example, if we created a storyboard of a man in a suit for a client and their feedback was they wanted to see him in a variety of different suit options, we could open the image and highlight his suit using Photoshop’s content aware tool and then generate new artwork options using AI Inpainting through Stable Diffusion.

Recommended:

Zeta Alpha Boosts GPT Reliability for Enterprise Search with its Powerful Semantic Neural Engine

Outpainting, available through DALLE-2 and Stable Diffusion (depending on the plug-in and hosting service), allows the canvas to be expanded in any direction outside of the original image dimensions. So let’s say we have a 1080×1080 image of a building – with out-painting we could expand that to 1920×1080 and extend the building naturally, or add in a new prompt to generate a realistic dragon or waterfall on the side of the building.

As any artworker or agency worker knows, briefing new artwork changes into the design studio can take up a lot of time. Another benefit of Stable Diffusion is if other members of your team are trained on how to use it (such as producers and execs) they can make these amends themselves, rather than briefing them back into the studio.

While DALL-E 2, Midjourney and Stable Diffusion are the three most-popular AI image generators, there are plenty of other tools out there, not least the influx of apps based on Stable Diffusion, such as Lensa.

Apps like Lensa use a seed, such as 20 different pictures of a person’s face, to generate new artwork in a variety of different art styles and scenarios. The idea of using a seed can also be applied in an agency’s local Stable Diffusion workflow, as you can explore different art styles and/or compositions with a main character while maintaining the consistent design features of that character.

If agencies use their own Stable Diffusion API, they can create their own models based on internally-sourced training data. This might involve a game company using all of their existing artwork as references for their custom model, allowing them to maintain tighter design direction on concepts generated in the future. Models can be a variety of things, from those that only focus on modern architecture to something like portraits of futuristic robots.

As any artworker or agency worker knows, briefing new artwork changes into the design studio can take up a lot of time. Another benefit of Stable Diffusion is if other members of your team are trained on how to use it (such as producers and execs) they can make design decisions and generate variations themselves, rather than briefing them back into the studio.

Introducing AI image generation tools into your workflow and how to write great prompts

Decent prompts are what make the difference between a great image and a poor image, and they’re one of the things that people struggle with most. If you’re serious about AI image generation, there are books and guidelines out there on how to write great prompts that are worth reading, such as DALL-E 2’s free prompt book.

It’s important for prompts to provide specific detail on the style of artwork you’re hoping to generate. The more specific information you give the prompt, the better it is at generating things (within reason) due to how it references training data. So, if I integrate ‘35mm focus short depth of field’ into one of my prompts, we know that the model is going to scrape references for that specific keyphrase.

What I usually do is focus on one or two specific elements in the piece. So, if I’ve created a couple of compositions with multiple characters, I’ll focus on one character each with details on their style, what they’re wearing, their aesthetics and more.

Bad prompts don’t include a lot of details and an example would be:

‘Woman in a dress standing on a farm’

An example of a good prompt would be:

‘Mid 30s woman wearing a green dress on a farm, overcast, realistic photography, 35mm, distressed photograph’

A newer prompt development is also including “negative” prompts alongside your main prompt. For example, because of the wide references associated with our prompt above, we may generate images that have widely different variables, like including cows, different types of farm crops, or people besides the “mid 30s woman”. Including a negative prompt that states: “children, cows, sunny day, cartoony, blurry” we essentially “delete” any references that may include those items, so we have more control over the generated image.

In terms of setting up workflows with these tools, when producers write their briefs, they usually mention art styles and specific types of imagery. DALL-E 2 and Midjourney are both easy to use for beginners and provide great ways of experimenting with art styles and specific images for people who may not have specialist design skills.

As DALL-E 2 and Midjourney cost money after the starter credits are used up, it’s worth weighing up the costs of how much you would spend having staff generate concept art and pre-production images from staff against how much you’re going to spend generating initial images through AI image generation tools that can eventually be fleshed out into proper artwork by a design team.

As AI requires a lower art skill level as long as you know how many prompts you need to create (and how to write good prompts), you can have more people involved in the creative process or taking ownership of specific tasks rather than feeding design edits back and forth.

The way we’re using it internally is to explore our internal ideas better, alongside paid creative input to provide more alternatives quickly. We’ve also opened up the tools to clients directly, so we can establish strong creative directions at the start, instead of potentially wasting time and money on something that the client then changes their mind on.

This allows us to give our designers more time and money towards the finished product, instead of mockups. Instead of putting money toward mock-ups, we can put that money toward the finished product, ultimately giving our clients more value for money as the time can be invested elsewhere.

AI ethics, considerations and what’s next.

As we’re only at the very beginning of realizing the full potential of AI, there are some important things to consider in terms of ethics as there are no legal guidelines (yet!) regarding copyright and ownership.

Three artists have launched a class action lawsuit against Stability AI (Stable Diffusion) and Midjourney due to their artwork being scraped for training data without consent or compensation. Getty Images has also launched a class action lawsuit against Stability AI, saying it believes that the company “unlawfully copied and processed millions of images protected by copyright.”

It’s important to stress, again, that AI image generation should only be used for concept development and pre-production.

Some image generation tools such as DALL-E 2 and Stable Diffusion are taking active measures to safeguard users with reference to the images that their tools scrape from across the internet, but there is no way of guaranteeing that anything generated is copyright free and doesn’t include NSFW imagery.

With AI tools already incredibly popular, it’s likely that we’ll see many stories about industry regulation this year. All of that said, these tools are still safe to use and I’d recommend that producers, artworkers and agency staff get ahead of the curve now by familiarizing themselves with what’s out there.

An easy way to get started is by simply visiting the DALL-E website and throwing yourself into the resources that are out there. AI is only going to become more disruptive in the coming years, and knowing how to use image generation tools will be a useful skill moving forward.

Comments are closed.