The Black Box Problem: A Convenient Scapegoat

In the increasingly digital world of the post-pandemic age, companies are facing a customer experience (CX) challenge. Meanwhile, CX is increasingly becoming a key differentiator for businesses as more businesses embrace digital ways of operating. Because CX is determined by the difference between what businesses deliver and what their customers expect, it’s summarized in a simple equation: CX = brand delivery – customer expectation. Hence, a satisfying CX is when brands deliver exactly what their customers expect.

Although companies have perfected the delivery of their products and services, customer expectation is very difficult to gauge without the tacit intelligence of a human being. This is because customer expectation is very volatile and changes rapidly depending on what the customers have seen in their digital environment.

Fortunately, artificial intelligence (AI) is able to mimic human decisions with high accuracy when it’s trained with sufficient data. Moreover, digital channels are well suited to capture massive amounts of customer behavior data that can be used to train an AI. These include information about their browsing habits, interests, past purchases, buying behavior, willingness to pay for certain items and more. AI is becoming a crucially important tool for businesses to gauge customer expectation online, where the customer is invisible. And this is a prerequisite to providing a consistently good CX. So why aren’t more businesses utilizing AI?

Not Everything You Can’t Explain is a Black Box

Despite the importance of AI for CX, many companies are still reluctant to use it, especially for direct customer engagements. The ultimate cause of this reluctance is fear. Business leaders fear unfamiliar new technology, its uncertain ROI due to its high total cost of ownership, and the uninterpretable nature of many AI solutions (a.k.a. the “black box” problem).

As AI becomes more pervasive in consumer technologies and its performance constantly improving, its business application is also gaining traction. This has led to the growing number of AI SaaS vendors, which keeps the total cost of ownership for Business AI very affordable. However, the black box problem continues to be a convenient scapegoat for those who really have no excuse other than the fear of ceding control to something they don’t understand, and ultimately afraid of change.

In reality, businesses use many technologies that they don’t fully understand and can’t explain. It’s safe to say that every company uses a computer today, but it’s doubtful that anyone at that company can explain how a computer works, which requires the esoteric knowledge of semiconductors physics. But no one is claiming that the computer is a black box and therefore we shouldn’t use it. Every business also uses the internet. But can anyone explain how each of the 7 layers of the internet routing protocol works?

Doubtful!

Yet, the internet is not considered a black box. The list of examples goes on.

Not all AI-based solutions are black boxes

Despite the fact that every business is currently using some “black box-ish” technology that they can’t explain, there are many business applications of AI that are not black boxes. In fact, there are many AI solutions that use simple and interpretable models (e.g. linear, logistics, decision trees, additive models, etc.). Whether a system is AI is less dependent on what kind of model it uses, but depends on two criteria:

- the ability to make proper decisions and/or subsequent actions autonomously.

- the ability to learn and adapt to improve its performance over time.

Therefore, even a simple linear model can be the core model of an AI system, as long as it is re-trained frequently enough to capture the essential dynamics of the data it’s trying to model, so it’s able to automate decisions and actions based on the continued influx of data.

In practice, many businesses and industries have limited data availability, computing resource constraints, response time requirements, or compliance obligations that preclude them from using more complex models. Because of this, they can only use simple interpretable models when developing their AI solutions. However, this doesn’t make them any less of an AI solution.

What Make a Black Box… “Black”?

So what kinds of AI systems are truly black boxes? It is generally accepted that very complex models (e.g. deep neural networks, random forest, generative adversarial networks, gradient boosted machines, etc.) are essentially black boxes because they all have two properties:

- they have huge numbers of model parameters

- they have nonlinear input-output relationships

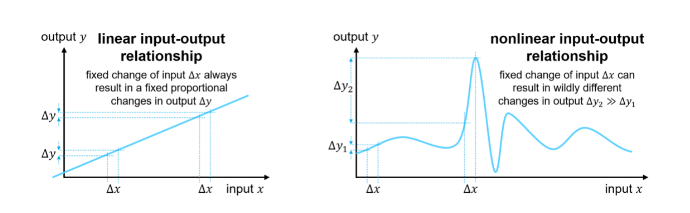

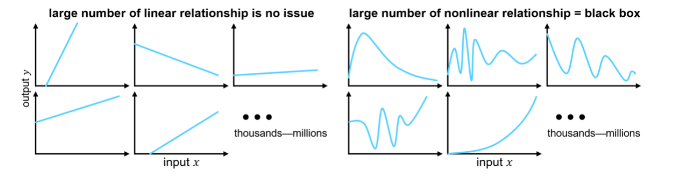

Black box models are difficult to interpret because they have vast amounts (thousands and millions) of model parameters. An extreme example is GPT-3, a deep learning transformer-based language model, which has 175 billion model parameters. Moreover, the relationships between the inputs and outputs are nonlinear. This means a fixed change in any input can translate to an arbitrary change in the output, sometimes huge, but sometimes tiny.

Note that having bajillions of parameters alone does not make a model uninterpretable if the input-output relationships are linear. If that were the case, any changes in any number of inputs can always be translated to a proportional change in the output in a consistent manner. This is because the sums, products, or compositions of linear functions are still linear. Likewise, nonlinearity is not a problem if there are only a few of them. However, our brains are just not capable of keeping track of a large number of nonlinear relationships.

Note that having bajillions of parameters alone does not make a model uninterpretable if the input-output relationships are linear. If that were the case, any changes in any number of inputs can always be translated to a proportional change in the output in a consistent manner. This is because the sums, products, or compositions of linear functions are still linear. Likewise, nonlinearity is not a problem if there are only a few of them. However, our brains are just not capable of keeping track of a large number of nonlinear relationships.

What makes a black box model uninterpretable is just complexity, nothing more! And the black box problem is an inherent inability of our human brain to distill those complex models down to something simple enough for us to explain in English or other languages. However, not everything that you don’t understand or can’t explain is a black box, including many AI technologies. In fact, many business AI solutions use simple models and are perfectly interpretable.

What makes a black box model uninterpretable is just complexity, nothing more! And the black box problem is an inherent inability of our human brain to distill those complex models down to something simple enough for us to explain in English or other languages. However, not everything that you don’t understand or can’t explain is a black box, including many AI technologies. In fact, many business AI solutions use simple models and are perfectly interpretable.

At the end of the day, the businesses that take the risk and implement AI are the ones that will outrun competitors with better customer and business insights, and a superior CX that will keep customers coming back. The next time you hear the naysayers rejecting AI on the grounds of the black box problem, ask yourself: are you willing to risk having an inferior CX for your customers over the immaterial fear of a convenient scapegoat?

[To share your insights with us, please write to sghosh@martechseries.com]

Comments are closed.